The recent sale of American TikTok to a consortium of US investors, spearheaded by Oracle, has ignited a firestorm of user complaints and regulatory scrutiny. While many decry immediate issues like video muting and view suppression as censorship, a deeper analysis reveals that these are likely symptoms of systemic technical failures and the predictable downstream consequences of a rushed, politically charged acquisition. This conversation uncovers the hidden costs of such rapid transitions and the subtle ways platform ownership can shift incentives, impacting everything from user experience to the very fabric of online discourse. Leaders in tech, policy, and content creation should read this to understand how seemingly minor technical glitches can snowball into major trust deficits and how the underlying architecture of information dissemination is ripe for political manipulation, offering a distinct advantage to those who grasp these complex dynamics early.

The Invisible Hand of Technical Debt and Misplaced Blame

The immediate aftermath of American TikTok's ownership change was met with a chorus of accusations: censorship, suppression, and a deliberate silencing of voices. Users reported videos failing to upload, experiencing zero views on sensitive topics, and even the word "Epstein" being flagged in direct messages. The temptation is to attribute these issues to a new, politically motivated regime, eager to exert control. However, the analysis presented here suggests a more mundane, yet equally impactful, culprit: massive technical failure.

The episode highlights that these widespread issues align perfectly with a significant outage at Oracle, TikTok's new data center provider. This wasn't a case of a new leadership team flipping a "censorship" switch, which would be technically complex and immediately obvious. Instead, it was a cascading failure within the infrastructure itself. This distinction is crucial. While the perception of censorship is a real problem, the cause in this instance appears to be ordinary corporate ineptitude rather than deliberate malice.

"All of this is more easily and just as successfully explained by normal corporate ineptitude, which is that TikTok's new data center provider, Oracle, had a huge outage."

This situation offers a potent lesson in consequence mapping: the immediate, visible problem (censorship) obscures the underlying, systemic cause (technical failure). The downstream effect of this misattribution is a loss of trust, amplified by the contentious nature of the sale itself. For those who grasp this, the advantage lies in separating genuine threats from transient technical problems, allowing for a more rational assessment of platform risk. The conventional wisdom of "blame the new owners" fails when extended forward, as it ignores the foundational technical realities that govern platform operation.

The Shifting Sands of Algorithmic Control and Political Incentives

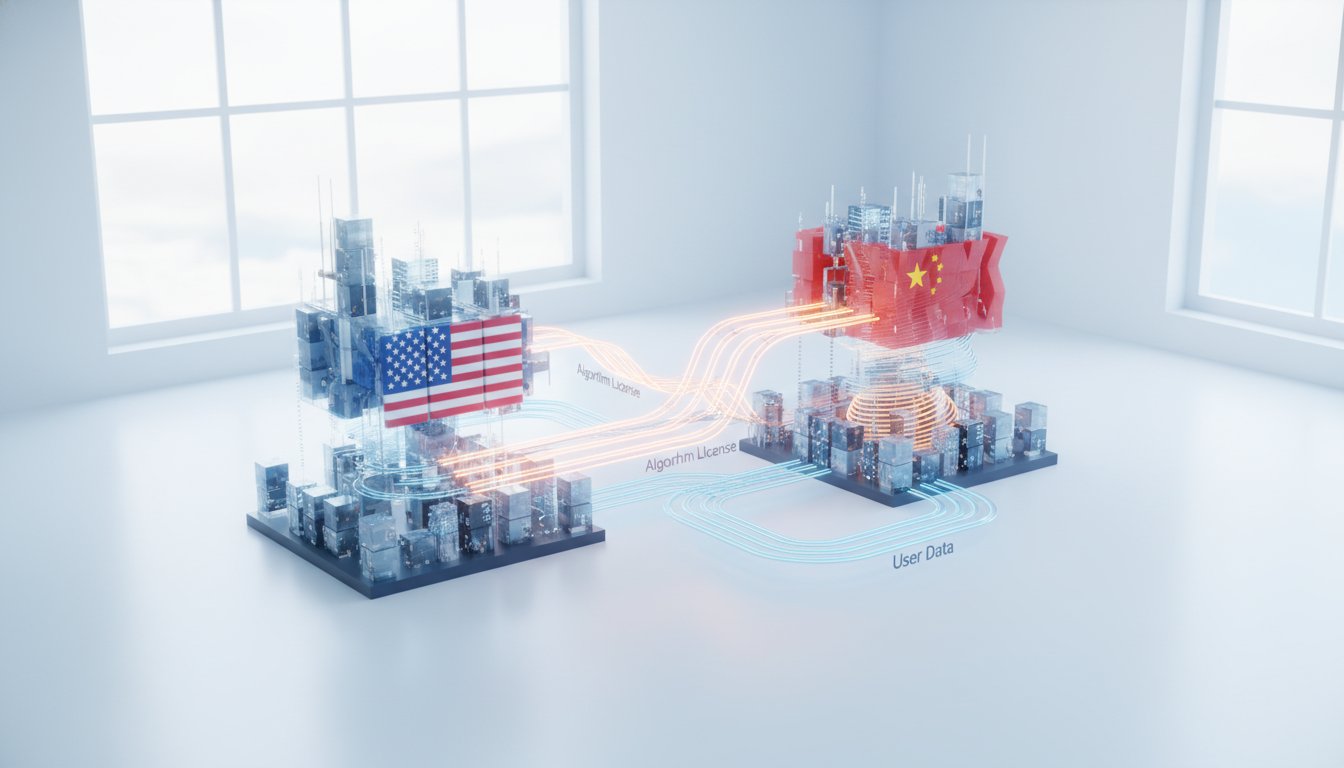

Beyond immediate technical glitches, the sale of TikTok to a US-based entity, with significant investment from Oracle and its founder Larry Ellison (a Trump ally), raises profound questions about the future of its algorithm. The core justification for the sale was to sever ties with Chinese ownership and mitigate national security concerns related to data privacy and algorithmic manipulation. However, the new ownership structure introduces a different, arguably more transparent, set of political incentives.

The episode points out that Oracle is now directly in charge of the algorithm, privacy, and data security. This means that any potential skewing of content is now under the purview of individuals with demonstrably right-leaning political affiliations. The implication is that instead of abstract fears of Chinese government influence, users now face the concrete possibility of a politically geared, right-wing coded platform.

"And so if you're Gavin Newsom, to say, 'I am going to be your champion to keep TikTok as the thing that you want and not turned into the kind of cesspool that X has become,' it's a smart move."

This shift highlights a critical system dynamic: when the ownership and operational control of a powerful information dissemination tool change hands, the incentives driving its content delivery change with it. The advantage here for observers is recognizing that the "national security" argument, while valid in its concern about foreign influence, can also serve as a pretext for domestic political control. The discomfort of acknowledging that a US-based entity might wield similar, or even more overt, manipulative power is precisely where lasting advantage can be found--by anticipating the political motivations that will now shape the user experience.

The Unseen Architecture of Addiction: Platform Design and Legal Reckoning

The conversation pivots to a broader, systemic issue plaguing social media: the deliberate design of platforms to foster addiction, leading to significant mental health consequences, particularly for young people. The emergence of numerous lawsuits against tech giants like Meta, YouTube, TikTok, and Snapchat underscores a fundamental critique of their product design.

Plaintiffs argue that features like endless scrolling, autoplay, and constant notifications are not accidental byproducts but intentional mechanisms engineered to maximize user engagement at the expense of well-being. This framing draws a parallel to the legal battles faced by the tobacco industry, which was accused of knowingly designing addictive products. The key difference here is the focus on platform design as the direct cause of harm, rather than solely on user behavior.

"It's really about the platform design. And so they focus on things like, you know, an endless stream of content, right? They focus on features like autoplay on YouTube, right? Notifications, product features like that that they say are really designed to just keep people returning to their sites over and over and over again, leading to really harmful consequences, whether that's anxiety, whether that's depression, untimely deaths."

The analysis reveals that the "addiction" is not merely a correlation but a consequence of deliberate architectural choices. While academic research on social media addiction is nuanced, the legal cases leverage internal company documents and the direct testimony of users experiencing anxiety, depression, and insecurity. The advantage for those who understand this is recognizing that the "free" services we use are not without cost; the price is often paid in attention, mental health, and time. The discomfort of admitting that these platforms are designed to be addictive, and that this design has tangible, harmful downstream effects, is a necessary precursor to reclaiming agency over our digital lives and potentially influencing future platform regulation. The long-term payoff for understanding these design choices is the ability to navigate these platforms more consciously and to advocate for more ethical design principles.

Key Action Items:

-

Immediate Action (Next 1-2 Weeks):

- Verify Technical vs. Political Issues: When experiencing platform disruptions (e.g., view counts, upload errors), actively seek information on underlying technical causes (e.g., provider outages) before assuming deliberate censorship. This avoids misdirected outrage and allows for clearer problem assessment.

- Review Privacy Settings: Proactively audit and adjust privacy settings on all social media platforms, particularly noting any new or expanded data collection permissions introduced post-acquisition or via updated terms of service.

- Engage with Skepticism: Approach reports of censorship or algorithmic bias with a critical eye, considering both technical explanations and potential political motivations.

-

Short-Term Investment (Next 1-3 Months):

- Diversify Content Sources: Reduce reliance on any single platform for news and information. Actively seek out diverse sources and perspectives to mitigate the impact of potential algorithmic skewing on any one platform.

- Educate on Platform Design: Research and understand the common design patterns used by social media platforms that encourage prolonged engagement (e.g., infinite scroll, notifications). This awareness is the first step to countering their effects.

- Advocate for Transparency: Support initiatives and discussions calling for greater transparency in platform algorithms and data usage policies, particularly regarding post-acquisition changes.

-

Long-Term Investment (6-18 Months):

- Develop Digital Well-being Habits: Consciously implement strategies to manage screen time and social media consumption, recognizing that these platforms are designed for addiction. This may involve scheduled usage, notification batching, or designated "digital detox" periods. This discomfort now creates a significant advantage in maintaining mental health and focus.

- Monitor Regulatory Landscape: Stay informed about ongoing legal challenges and regulatory efforts concerning social media addiction and platform accountability. This awareness can inform personal choices and potential advocacy.