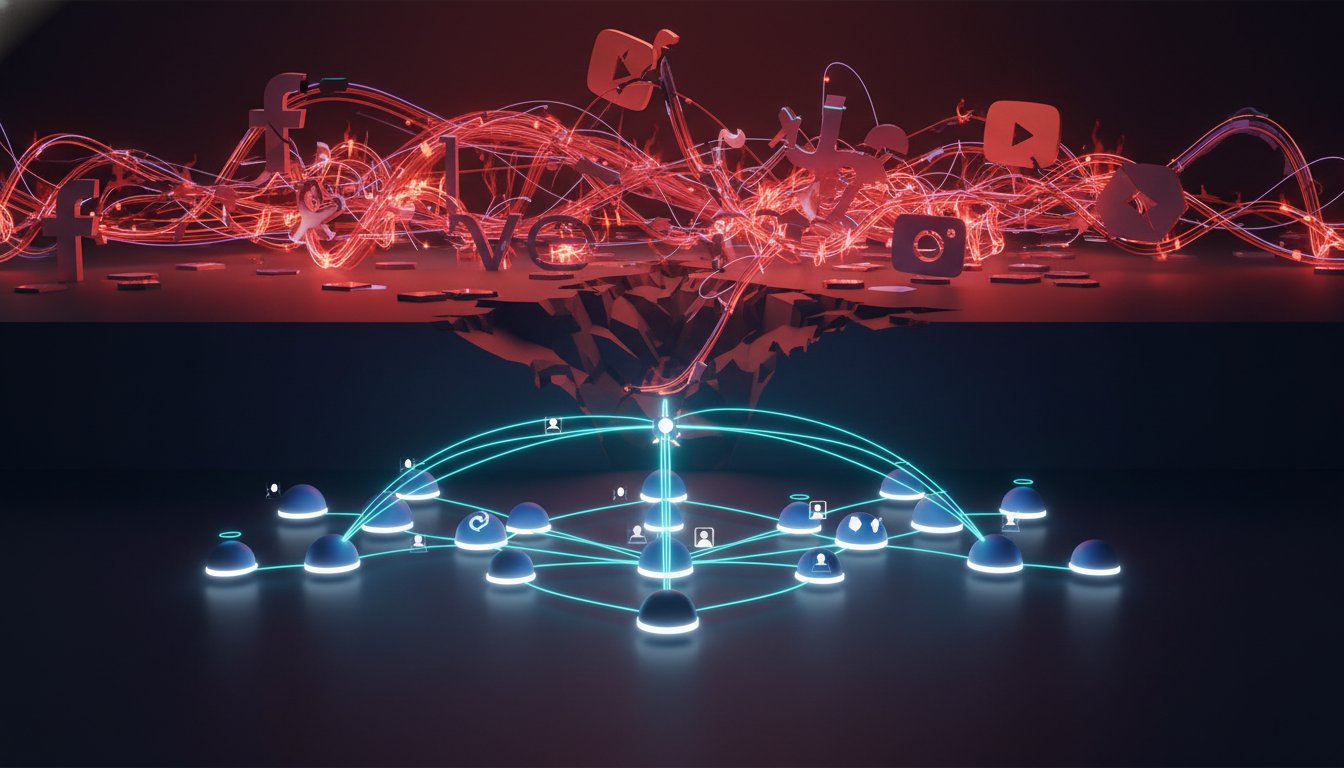

This conversation reveals a critical inflection point for social media giants, shifting from a defense of free speech to a battle over product design and its addictive nature. The non-obvious implication is that the very features designed to maximize engagement--infinite scrolling, autoplay, and algorithmic content curation--are now the smoking gun in a wave of personal injury lawsuits. These legal challenges represent a potential "big tobacco moment" for social media, threatening to dismantle business models predicated on constant user attention. Individuals and state attorneys general should pay close attention, as these lawsuits could redefine accountability for digital platforms, offering a path to redress for widespread mental health harms and potentially forcing fundamental changes in how these technologies are built and deployed. Understanding these dynamics offers an advantage in navigating the evolving regulatory and legal landscape surrounding digital platforms.

The Algorithmic Hook: Why Engagement Becomes the Core Liability

The traditional defense for social media platforms has rested on the First Amendment, framing their services as conduits of speech, not manufacturers of harm. However, the current wave of litigation, spearheaded by individuals, school districts, and state attorneys general, bypasses this shield by focusing on the technologies themselves. The argument is no longer about what content is hosted, but how the platforms are engineered to be addictive, leading to a cascade of personal injuries including anxiety, depression, and suicidal thoughts. This is a fundamental shift, moving from content moderation debates to product liability, and it’s precisely where the danger lies for the companies.

The core of these claims centers on features like infinite scrolling and autoplay videos. These aren't mere design choices; they are deliberate mechanisms to maximize user engagement, which directly fuels the advertising-based business model. As Cecilia Kang explains, "The business model is advertising. And what really fuels advertising revenue is engagement. Engagement is at the heart of this. And these tools are meant to keep people more engaged." This direct link between addictive design and revenue generation is the linchpin of the plaintiffs' case.

"The crux of this is that these are personal injury claims, right? And that effectively allows the plaintiffs to sidestep what has traditionally shielded these companies from liability, which is their free speech defense."

-- Cecilia Kang

The implication is that the very features lauded for keeping users "hooked" are now evidence of a product designed to be harmful. By focusing on these technological hooks, plaintiffs aim to prove that social media companies engineered addictive products, knowing their potential for harm, and withheld this knowledge from the public. This strategy directly challenges the companies' ability to hide behind Section 230 of the Communications Decency Act, which shields platforms from liability for user-generated content. The focus shifts from the content to the design, a far more perilous territory for the platforms.

The "Big Tobacco Moment": When Design Becomes a Weapon

The comparison to Big Tobacco is not hyperbole; it frames the existential threat these lawsuits pose. For decades, tobacco companies successfully deflected blame, but sustained litigation eventually exposed internal knowledge of addiction and harm. The current lawsuits aim to replicate this by leveraging internal company documents. Plaintiffs' lawyers are sifting through "hundreds of thousands of documents" that allegedly reveal the companies' awareness of the problematic effects of their products on young people.

One striking example involves Meta's study of beauty filters on Instagram. Despite internal findings of toxicity, particularly for young girls, and pleas from employees--including one executive whose own daughter suffered from body dysmorphia--CEO Mark Zuckerberg reportedly reinstated the filters. This internal conflict, where engagement metrics seemingly trumped documented harm, is precisely the kind of evidence plaintiffs will use to argue that the companies knew their products were harmful and chose to prioritize profit.

"The companies' lawyers are expected to argue that there are many factors that go into mental health issues. They're going to say that it's multifactorial. It could be school problems, stress with friends, or it can be all kinds of factors that lead to anxiety, depression, and other mental health disorders, and not social media alone."

-- Cecilia Kang

This highlights a critical point of contention: causation. While companies will argue that mental health issues are multifactorial, the plaintiffs' strategy is to demonstrate a direct causal link between engineered addictive features and specific harms. The internal documents, combined with expert testimony on the addictive nature of features like infinite scrolling and algorithmic recommendations, will be crucial in persuading juries. The hope for plaintiffs is that juries, composed of individuals with personal experiences and anecdotal evidence of social media's impact, will be more receptive to these claims than legal shields might suggest.

The Unseen Cost of Engagement: Delayed Payoffs and Competitive Moats

The business model of social media is fundamentally built on engagement, and the features that drive it--infinite scroll, autoplay, Snap Streaks--are designed for maximum user retention. The immediate payoff for the companies is increased ad revenue. However, the downstream effect, as highlighted by these lawsuits, is the potential for significant personal injury to users, particularly minors. This creates a stark dichotomy: immediate corporate profit versus long-term user well-being and potential legal liability.

The plaintiffs are not just asking for monetary damages; they are demanding changes to the platforms' core design. This includes stronger age verification, enhanced parental controls, and, crucially, the removal of addictive features like infinite scroll and autoplay. For companies whose business models are predicated on maximizing engagement through these very features, such changes represent an existential threat. This is where immediate discomfort--altering or dismantling core revenue-generating mechanisms--could create a lasting competitive advantage for those who adapt, or for new entrants who prioritize user well-being from the outset.

"The other thing to keep in mind is that the companies, especially Meta and YouTube, really feel strongly that they have a good case on their side. And they will bring up speech protections, like you mentioned, Rachel. They are going to say that there is a law known as Section 230 of the Communications Decency Act that shields internet companies from the content they host because Section 230 has been so broad and so strongly used in their favor in so many different instances."

-- Cecilia Kang

The conventional wisdom that platforms can simply rely on Section 230 is being challenged head-on. By framing the issue as product design rather than content, plaintiffs aim to sidestep this shield. The companies' defense will likely pivot to the complexity of mental health causation and the broad protections of Section 230. However, the sheer volume of internal documents and the relatable nature of the alleged harms--anxiety, depression, body image issues--could sway juries. The companies' decision to fight these cases, rather than settle broadly, suggests a belief in their legal defenses, but also a high-stakes gamble that could fundamentally alter their business. The "win" for these companies might be avoiding massive payouts and design changes, but the "loss" could be a profound erosion of public trust and a precedent-setting legal landscape.

Key Action Items

-

For Social Media Companies:

- Immediate Action: Conduct a thorough internal audit of engagement-driving features (infinite scroll, autoplay, notification systems) and assess their documented impact on user mental health, particularly minors.

- Immediate Action: Proactively develop and implement enhanced age verification and parental control tools, going beyond current superficial offerings.

- Short-Term Investment (6-12 months): Begin exploring alternative engagement models that do not rely solely on addictive design patterns. This may involve diversifying revenue streams or focusing on value-added content over sheer time-on-platform.

- Long-Term Investment (12-18 months): Fund independent, transparent research into the long-term mental health effects of platform use, and be prepared to act on findings even if they conflict with current engagement strategies.

- Strategic Shift: Prepare legal and PR strategies that acknowledge the potential for harm and focus on responsible product development, rather than solely relying on existing legal shields.

-

For Parents and Educators:

- Immediate Action: Educate young users about the intentionally addictive design features of social media platforms and encourage critical thinking about their usage.

- Immediate Action: Actively utilize and advocate for stronger parental controls and time-limiting features on digital devices and platforms.

-

For Policymakers and Regulators:

- Immediate Action: Review existing legal frameworks (like Section 230) to assess their adequacy in addressing harms stemming from product design, not just content.

- Long-Term Investment (18-24 months): Consider establishing clear guidelines or standards for platform design features that are demonstrably linked to user harm, particularly for minors.