AI Hype Obscures Non-Determinism Risks and Undermines Open Knowledge

TL;DR

- AI's non-deterministic nature poses significant risks when applied to scenarios requiring predictable, deterministic code, potentially leading to unreliability and debugging challenges that traditional software development has long solved.

- The anthropomorphic interface of LLMs can mislead non-experts into attributing personhood, enabling exploitation by those who understand the technology's limitations and are misrepresenting its capabilities.

- Over-reliance on LLMs for tasks better suited to deterministic code, driven by hype and investment, can lead to increased costs, reduced predictability, and the introduction of unnecessary complexity.

- Treating AI as a "normal technology" fosters honest evaluation and preparation for harms, preventing the exaggeration of capabilities that obscures real-world risks and hinders effective criticism.

- Democratizing access to technology, a core ethos of communities like Stack Overflow, is undermined by AI hype that creates myths and obscures the underlying principles, betraying the spirit of open knowledge sharing.

- The success of AI coding tools is directly enabled by the open sharing of knowledge within communities like Stack Overflow, creating a reciprocal obligation to maintain generosity and prevent exploitation of community contributions.

- The evolution of coding abstractions, from assembly to prompt engineering, will always create new entry points, but the inherent social nature of coding and the eventual leakage of abstractions will continue to draw curious individuals deeper into the stack.

Deep Dive

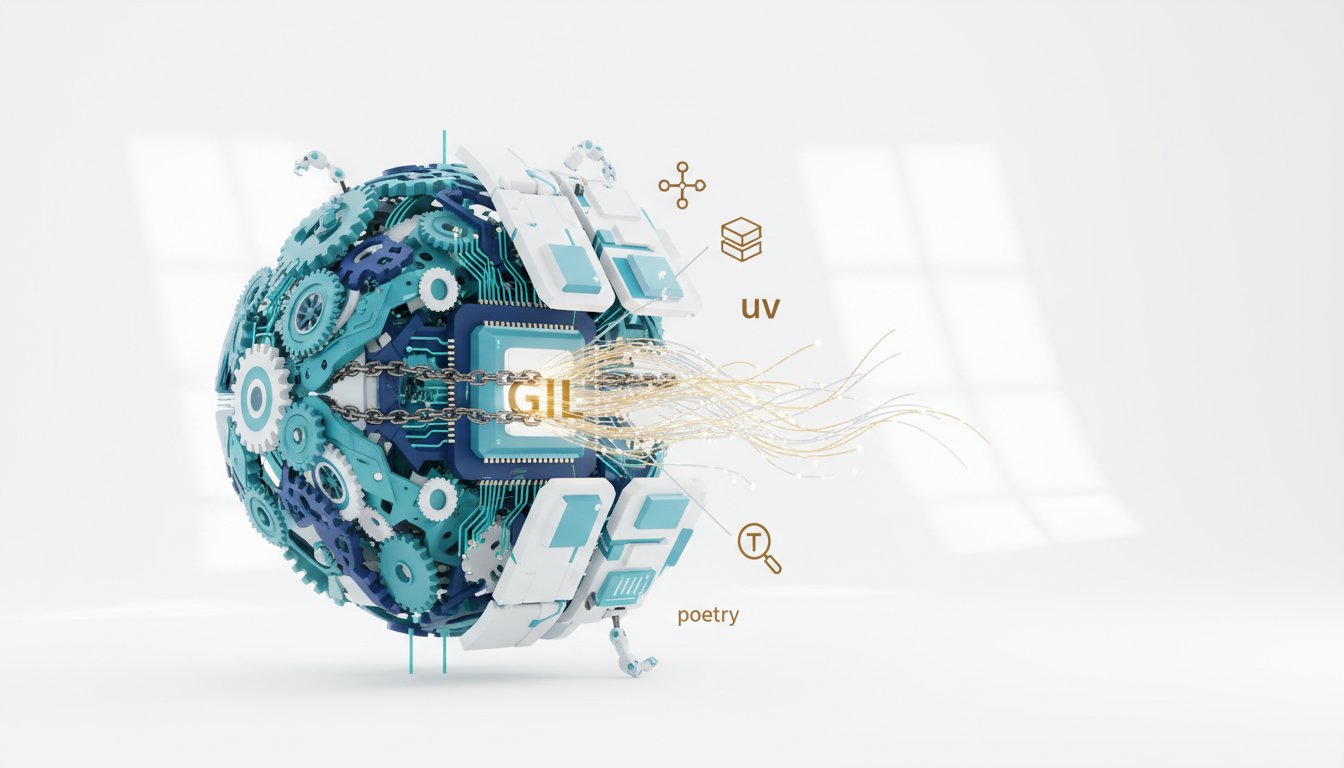

AI represents the next logical step in computational evolution, not a magical new paradigm, and should be evaluated as such. While current AI, particularly large language models (LLMs), offers significant advancements in accessibility and automation, the hype surrounding them often obscures their limitations and exploits a public misunderstanding of their deterministic nature. This misrepresentation risks undermining honest technical evaluation, fostering unrealistic expectations, and creating dangerous vulnerabilities by misapplying non-deterministic tools to problems requiring predictable solutions.

The core of the issue lies in AI's anthropomorphic interface, which leads users to attribute human-like thought processes and intentions to LLMs. This is particularly problematic when AI "hallucinates" or makes errors. Instead of recognizing these as predictable outputs from a complex statistical model, users may perceive them as personal failings, leading to miscategorization of bugs and creating an exploitable gap for those who misunderstand or deliberately misrepresent AI capabilities. Furthermore, the inherent non-determinism of LLMs contrasts sharply with the foundational principle of software engineering: determinism. Applying LLMs to tasks that demand precise, predictable outcomes, such as critical scripting systems, introduces unnecessary complexity, cost, and fragility. This often occurs not because LLMs are the best tool for the job, but due to corporate pressure to adopt "AI" for its own sake, forcing engineers to integrate unreliable technologies into stable systems.

The democratization of technology, a principle championed by communities like Stack Overflow, is threatened by this trend. The open sharing of knowledge that fueled AI's development on platforms like Stack Overflow is being overshadowed by a narrative that positions AI as an esoteric, exclusive domain controlled by a few. This not only betrays the ethos of open access but also prevents a clear-eyed assessment of AI's risks and benefits. While AI can empower individuals to create without deep technical expertise, this power must be balanced with an understanding of its limitations. The "slop art" or insecure code generated by inexperienced users is a predictable outcome, but can be mitigated by integrating AI tools with established software engineering practices, such as security scanners and deterministic code, rather than embracing an "all-LLM" approach. The future of software development will likely involve a spectrum of expertise, with prompt-based engineers at one end and those working with lower-level languages at the other, all driven by the fundamental human curiosity and the need to solve problems, ensuring that deeper technical skills will remain relevant as abstractions inevitably "leak" and break.

The enduring value of communities like Stack Overflow lies in their commitment to open knowledge sharing and collaborative problem-solving. As AI platforms are trained on this community-generated data, there is a reciprocal obligation to uphold the spirit of generosity and accessibility. The principle of democratizing access to technology, allowing more people to create and innovate, should guide the integration of AI. However, this must be pursued with a clear understanding of AI as a tool within a broader technological continuum, not as a mystical force. The success of AI hinges on its rational application, using it for tasks it excels at while relying on robust, deterministic tools for areas requiring reliability and security. This balanced approach ensures that technological progress empowers rather than exploits, fostering a healthier ecosystem where innovation is grounded in clear understanding and responsible practices.

Action Items

- Audit LLM usage: For 3-5 current projects, evaluate if deterministic code is a more suitable tool than LLMs for core functionality.

- Create LLM integration guidelines: Define 5 criteria for assessing LLM suitability, focusing on determinism, reliability, and cost-effectiveness.

- Measure LLM impact on developer productivity: Track code generation speed and bug rates for 2-3 projects before and after LLM integration.

- Draft policy for LLM-generated code review: Establish 4 key checks for security, performance, and maintainability of AI-assisted code.

- Evaluate LLM training data sources: For 2-3 internal LLM applications, assess the origin and licensing of training data to ensure ethical sourcing.

Key Quotes

"A lot of folks know this I have friends who were you know teaching in these disciplines and and creating you know software in and and you know bayesian systems and and you know other adaptive systems for years and decades and so a thing that folks you know in the stack overflow communities will know is that they're nothing new under the sun right this this is something that has been around for many years and that certainly large language models and and the sort of related tech represent a breakthrough an evolution and there's lots of cool things that they can do but this is not something that comes out of nowhere or that there wasn't any prior art or that um coders particularly are saying oh my gosh we couldn't imagine that this was coming it is a um maybe a step change"

Anil Dash argues that the core concepts behind AI and machine learning are not new, having existed for decades. He points out that many in the software development community, particularly those familiar with Stack Overflow, recognize this history. Dash emphasizes that while large language models represent a significant evolution, they are built upon existing foundations and are not entirely novel inventions.

"And so yeah i i wanted to call it out because it's also um you know a couple parts one is you know it's bad for the economy it's bad for culture it it is bad because you have opportunists coming in but also as somebody who loves to make stuff and loves to see like the brilliant coders i know make stuff um you miss out like making stuff with code is awesome and it's like this great creative endeavor and we don't need the bs like we don't need to lie about it we don't need to make up that it like we don't need to exaggerate because the part that is real is cool and and so i i just end up very and you know not to be emotional about it but i am like i end up very resentful about these people who are misrepresenting it saying oh it it it does these exaggerated magical lies because the part that is real is cool and we could evaluate it more honestly these ways and also prepare against the harms right we could talk about what is broken or risky or dangerous more honestly if we if we just told the truth"

Anil Dash expresses frustration with the misrepresentation of AI technology, stating it is detrimental to the economy and culture by allowing opportunists to exploit exaggerated claims. He believes that the genuine capabilities of AI are already impressive and creative, and that lying or exaggerating about them detracts from this reality. Dash argues that an honest assessment would allow for better preparation against potential harms and a more accurate understanding of what is broken or risky.

"And the challenge with that is then a non expert person is putting the bugs in the wrong category but like as a technical person we can kind of translate that right we know why it's doing that but the effect of that is the non expert people are being exploited right you know because they're sort of attributing a certain degree of magic to it that the you know some of the people building these systems are taking advantage of right and and i just also think of like put all that like that's very theoretical really practically one of the magical things about software is it's deterministic like zeros and ones is a great thing right a falsifiable assertion is a great thing a test that passes is a great thing we all know that feeling of like i got this build to complete i got the single light up i got this you know this html page to render like whatever it is where you're like my code runs is an incredible feeling and part of the reason why is because it's deterministic behavior because of the nature of zeros and ones and the nature of llms is not that right and now the problem is we are trying to apply non deterministic systems to a lot of scenarios where you should have deterministic code like llms are bad at that thing and so why are we trying to use them as the hammer and all these things that ain't nails right"

Anil Dash explains that the anthropomorphic interface of large language models (LLMs) can lead non-experts to miscategorize errors, attributing them to human-like thinking rather than technical issues. He contrasts this with traditional software, which is deterministic and verifiable through tests. Dash highlights the danger of applying non-deterministic LLMs to tasks requiring predictable outcomes, likening it to using the wrong tool for the job.

"And so i i think 100 there's going to be somebody that starts as a prompt engineer who becomes a rust coder 100 somebody who goes down the rabbit hole yeah exactly so you have to have a gateway drug um so i have high confidence in that like people will always be curious people will always well the things will always break the abstractions will always leak and there's always going to be an error message where you're like what the hell is that and also one of the lessons of stack overflow coding is profoundly social people love hacking together they love showing off they love being proud of what they made they love interacting together knowledge is profoundly social i think and so i think that that aspect is always going to pull you further down the stack so i don't i don't worry for a minute about oh this is going to attenuate people's you know curiosity down down the stack at all"

Anil Dash expresses confidence that AI tools, like prompt engineering, will serve as gateways for people to explore deeper into coding, similar to how previous abstractions have functioned. He believes that curiosity, the inherent nature of technology to break or reveal complexities, and the social aspect of coding will naturally draw individuals further down the technical stack. Dash asserts that the human desire to build, share, and understand will prevent a decline in technical curiosity.

"And the reason that every single one of the big you know ai platforms and big llms is good at helping people code is because they were trained on stack overflow period yeah right and it's the generosity of this community in sharing information openly that made it possible and so there's i feel a social obligation to be reciprocal to like share that spirit and so when you know and so to to not you know return the favor and be generous in spirit and say no no no this is this is this rare thing that only these handful of people handful of companies can do and not listen to the expertise of the community who are saying treat this like other technologies and like there are some exceptions i'm sure there are some people that are like no no this is unlike anything else before and you have to treat it as a magical special thing but overwhelmingly 99 9 of coders i talk to of you know product people i talk to designers i talk to are all like can we just be normal about this"

Anil Dash emphasizes that the success of AI platforms in coding assistance is directly attributable to their training on Stack Overflow data. He feels a social obligation to reciprocate the community's generosity by advocating for a reciprocal spirit. Dash argues against the notion that AI is a rare, exclusive technology, asserting that the vast majority of technical professionals advocate for treating it as a normal technology, similar to others.

"And so you know the meta thing i would say the like the bigger thing i would say is um

Resources

External Resources

Articles & Papers

- "Settle down, nerds. AI is a normal technology" (Blog Post) - Discussed as the primary topic of the podcast episode, exploring AI as a normal technological evolution rather than magic.

- "the last time Anil was on the pod in 2020 to talk all things Glitch and Glimmer." (Blog Post) - Referenced as a previous discussion with Anil Dash.

- "Using type hint Any in Django - NameError: name 'Any' is not defined" (Stack Overflow Question) - Mentioned as the question for which user pgrad won a Lifejacket badge.

People

- Anil Dash - Guest on the podcast, writer, former Stack Overflow board member, and tech entrepreneur.

- Ryan Donovan - Host of The Stack Overflow Podcast and editor of the blog.

- Arvin Satya Narayan - Researcher and writer credited with coining the phrase "normal technology."

- Joel Spolsky - Co-founder of Stack Overflow.

- Jeff Atwood - Co-founder of Stack Overflow, working on a project around Universal Basic Income.

- pgrad - Stack Overflow user who won a Lifejacket badge for an answer to a Django-related question.

Organizations & Institutions

- Stack Overflow - Community and platform for software developers, discussed for its ethos of open information sharing and its role in training AI models.

- Fog Creek Software - Anil Dash's former sister company.

Websites & Online Resources

- anildash.com - Anil Dash's blog.

- linkedin.com/in/anildash/ - Anil Dash's LinkedIn profile.

- stackoverflow.blog/2025/12/23/settle-down-nerds-ai-is-a-normal-technology/ - URL for the transcript of the podcast episode.

- art19.com/privacy - Privacy policy link.

- art19.com/privacy#do-not-sell-my-info - California Privacy Notice link.

- stackoverflow.com/users/8629305/pgrad - Stack Overflow profile for user pgrad.

- stackoverflow.com/questions/72579982/using-type-hint-any-in-django-nameerror-name-any-is-not-defined - Stack Overflow question related to Django type hints.

Other Resources

- AI (Artificial Intelligence) - Discussed as a normal technology and the next step in computing's evolution, with emphasis on its capabilities, limitations, and the importance of honest evaluation.

- LLMs (Large Language Models) - Discussed as a breakthrough in AI, their non-deterministic nature, and the challenges they pose.

- Glitch - A community for building apps, previously led by Anil Dash.

- Glimmer - Mentioned in relation to a previous podcast discussion with Anil Dash.

- Lifejacket badge - A badge awarded on Stack Overflow for providing a helpful answer to a question with a negative score.

- Universal Basic Income (UBI) - A project being worked on by Jeff Atwood.