Python's 2025: Concurrency, Tooling, AI, and Funding Challenges

TL;DR

- The widespread adoption of tools like

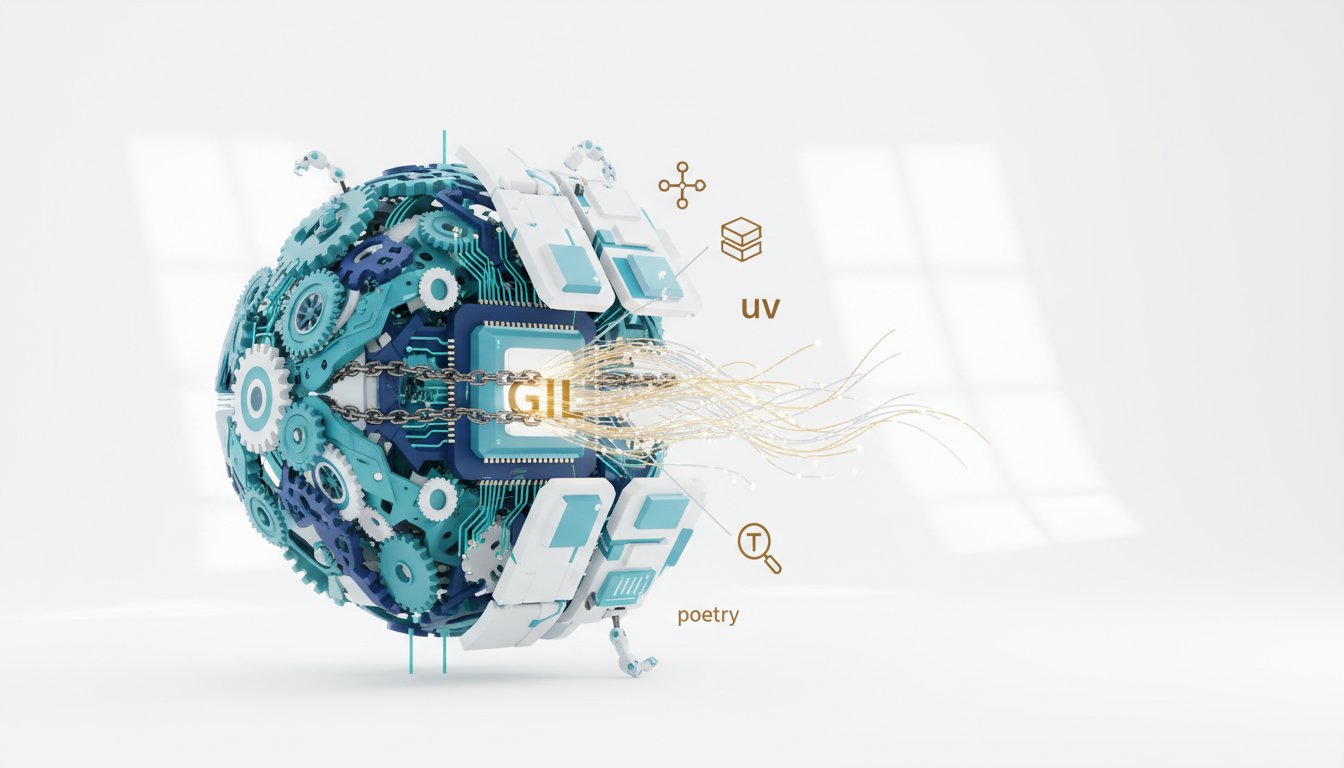

uvandpoetrysignifies a shift where the Python ecosystem now prioritizes developer experience by treating the interpreter as an implementation detail, simplifying setup and execution for beginners. - The impending removal of the Global Interpreter Lock (GIL) in free-threaded Python promises significant performance gains, potentially accelerating parallelizable tasks by 10x or more, though community adoption of updated extension modules remains a challenge.

- The AI bubble's potential economic impact and unsustainable valuations are concerning, yet the underlying technology, particularly LLMs and agentic coding tools, is here to stay, offering substantial productivity boosts despite potential code quality trade-offs.

- The increasing speed and sophistication of type checkers and Language Server Protocols (LSPs), often implemented in Rust, are transforming developer workflows by reducing feedback loops to under a second, enabling more frequent and impactful code analysis.

- The Python Software Foundation (PSF) faces a critical funding crisis due to declining corporate sponsorships and grant challenges, threatening its ability to support essential infrastructure like PyPI and community initiatives.

- The PEP process for evolving Python is becoming increasingly difficult and emotionally draining, necessitating a reevaluation of how community feedback is gathered and incorporated to foster a more collaborative and positive language evolution.

Deep Dive

The Python ecosystem in 2025 is experiencing transformative growth, marked by the impending obsolescence of the GIL, the refinement of packaging tools, and the proliferation of advanced type checkers, all of which signal a more robust and accessible future for developers. These advancements, while promising significant performance gains and improved code quality, also introduce new complexities in tool adoption and community engagement, necessitating a strategic focus on education and broader participation to fully realize their benefits.

The year 2025 saw significant progress in Python's core infrastructure and developer experience, spearheaded by the impending removal of the Global Interpreter Lock (GIL) and the maturation of packaging tools. The advent of "free-threaded" Python, officially supported in version 3.14, promises to unlock substantial performance gains, potentially by a factor of 10x for highly parallel problems, by enabling true multithreading. This development, however, hinges on community adoption and the updating of third-party extension modules, a process that requires significant educational outreach to overcome potential inertia and ensure broad compatibility. Concurrently, the rise of tools like uv, hatch, and pdm is simplifying Python's execution environment, abstracting away complex setup processes and treating Python itself as an implementation detail. This shift, while enhancing the user experience, particularly for beginners and in educational settings, raises questions about the "magic" involved and the potential difficulty for users to debug when these abstractions break. The underlying tension lies in balancing ease of use with the need for transparency and understanding of fundamental concepts, a challenge that requires thoughtful design and clear documentation.

Beyond infrastructure, the Python community is grappling with the implications of rapid AI advancement and the sustainability of its open-source model. The discourse around AI, particularly Large Language Models (LLMs), has shifted from unbridled optimism to a more pragmatic assessment, with the perceived bubble around AGI showing signs of bursting following the release of GPT-5. While LLMs are recognized as powerful tools for language tasks and coding assistance, concerns are mounting over unsustainable business models and the potential for economic disruption. This sentiment is mirrored within the Python community itself, where the Python Software Foundation (PSF) faces funding challenges due to decreased corporate sponsorship, exacerbated by the decline in in-person conferences. The reliance on a small number of key sponsors and the limited participation in funding initiatives highlight a potential crisis, where growing community needs outpace available resources. This funding gap is further complicated by the increasing complexity of Python's evolution, with the PEP process proving to be emotionally draining and time-consuming for contributors, potentially discouraging valuable input.

Finally, the increasing sophistication and performance of type checkers and Language Server Protocols (LSPs) are fundamentally reshaping Python development. Tools like Pyright, Pyre-FLy, and Ty, often implemented in Rust for speed, are dramatically reducing the time required for type checking and code analysis, often to under a second. This has made static analysis a more integral and less burdensome part of the development workflow, even for complex projects. The development of a Type Server Protocol (TSP) aims to unify these efforts, allowing different type servers to interoperate. However, challenges remain regarding conformance and consistency between these tools, leading to potential disagreements on error flagging and the need for developers to be aware of these nuances. The integration of type hints with AI tools is also a significant development, as it provides crucial context for LLMs, enabling them to generate better code and understand agentic behavior more effectively.

The core implication is that while Python's technical advancements in concurrency, tooling, and static analysis are accelerating its adoption and capabilities, the community must actively address issues of developer experience, funding sustainability, and process evolution. The success of these advancements hinges on fostering broader participation, ensuring equitable distribution of resources, and refining the mechanisms for language evolution to remain inclusive and efficient.

Action Items

- Audit AI discourse: Analyze 3-5 recent articles or discussions to identify and counter Hype-driven claims with evidence-based language model capabilities.

- Implement automated refactoring: Build a system to automatically refactor 5-10 core modules, incorporating test case generation and linter checks to improve code quality.

- Track type checker conformance: Measure disagreements between 3-5 type checkers (e.g., MyPy, Pyright, Pyre-check) on 10-20 code snippets to identify and address inconsistencies.

- Develop funding outreach strategy: Draft a plan to secure 3-5 new corporate sponsorships for the PSF, focusing on AI companies and demonstrating ROI.

- Standardize Python execution: Create a runbook template for 3-5 common Python project types, detailing the use of tools like

uvfor simplified interpreter and dependency management.

Key Quotes

"Python in 2025 is in a delightfully refreshing place: the GIL's days are numbered, packaging is getting sharper tools, and the type checkers are multiplying like gremlins snacking after midnight."

This quote, from the episode description, sets the stage for the discussion by highlighting key areas of advancement and excitement within the Python ecosystem for the year 2025. Michael Kennedy, the host, uses vivid imagery to convey a sense of progress and innovation across core language features, development tooling, and static analysis.

"I'm an associate professor of computer and information science and I do research in software engineering and software testing. I've built a bunch of python tools and one of the areas we're studying now is flaky test cases in python projects."

Gregory Kapfhammer introduces himself and his research focus, establishing his expertise in software testing and the practical challenges faced in Python development. His mention of "flaky test cases" points to a specific, common problem in software engineering that impacts reliability and development efficiency.

"I'm a long time python core developer although not as long as one of the other guests on this podcast. I worked at google for 17 years for the last year or so I've worked at meta in both cases I work on python itself within the company and just deploying it internally."

Thomas Wouters provides context on his extensive experience with Python, particularly within large tech companies like Google and Meta, focusing on internal deployment and development of the language itself. This highlights his deep understanding of Python's practical application and evolution in enterprise environments.

"I am a data scientist and developer advocate at JetBrains working on PyCharm and I've been a data scientist for around 10 years and prior to that I was actually a clinical psychologist so that was my training my PhD but abandoned academia for greener pastures let's put it that way."

Jodie Burchell shares her background, bridging data science and developer advocacy with a unique transition from clinical psychology. This diverse experience suggests a perspective that values both technical depth and effective communication, likely influencing her views on tools and community engagement.

"I've been at Microsoft for 10 years started doing working on AI R&D for Python developers. Also keep Wazi running for Python here and do a lot of internal consulting for teams outside. I am actually the shortest running core developer on this call amazingly even though I've been doing it for 22 years."

Brett Cannon details his long tenure at Microsoft and his involvement in AI R&D for Python, along with his role in maintaining internal Python infrastructure. His self-deprecating remark about being the "shortest running core developer" despite 22 years of experience humorously underscores the depth of expertise present among the guests.

"I've been a core developer for a long time since 1994 and I've been, you know, in the early days I did tons of stuff for python.org, worked with Guido at CWI and, you know, we moved everything from the mailing, you know, the postmaster stuff and the version control systems back in the day, websites, all that kind of stuff."

Barry Warsaw recounts his foundational contributions to the Python ecosystem, dating back to 1994, and his work on critical infrastructure like python.org and version control systems. This establishes him as a long-standing figure whose early involvement shaped the very fabric of Python's online presence and development processes.

"I want to mention AI like wow what a, you know, big surprise. So to kind of give context to where I'm coming from, I've been working in NLP for a long time. I like to say I was working on LLMs before they were cool so sort of playing around with the very first releases from Google in like 2019 incorporating that into search."

Jodie Burchell identifies AI as a major topic for 2025, framing it with a personal history in Natural Language Processing (NLP) and early work with Large Language Models (LLMs). This provides a grounded perspective on the AI discourse, suggesting a focus on realistic applications rather than just hype.

"I actually originally said we should talk about AI but Jody had a way better pitch for it than I did because my my internal pitch was literally AI do we do I actually have to write a paragraph explaining why. Then Jody actually did write the paragraph so she did a much better job than I did."

Brett Cannon humorously acknowledges Jodie's superior framing of the AI topic, admitting his own initial pitch was less developed. This interaction highlights the collaborative nature of the discussion and the value placed on clear, compelling communication.

"I want to talk about Python ecosystem and funding. When I talk to people about Python and I talk about how it's open source, they're like, oh right, it's open source, that means I can download it for free and from their perspective that's sort of where it starts and ends."

Reuven Lerner introduces the critical issue of funding for the Python ecosystem, contrasting the common perception of open source as "free" with the reality of the resources and support required for its maintenance and growth. He points out a widespread lack of awareness regarding the financial underpinnings of open-source projects.

"I want to thank the two of you for oh sorry sorry go ahead I was just going to say like for years teaching python that how do we get it installed at first it surprised me how difficult it was for people because like oh come on we just got python like what's so hard about this but it turns out it's a really big barrier to entry for newcomers and I'm very happy that Jupiter light now has solved its problems with input and it's like huge but until now I hadn't really thought about starting with UV because it's cross platform and if I say to people in the first 10 minutes of class install UV for your platform and then say UV init in your project bam you're done it just works and then it works cross platform."

Ruben Lerner expresses enthusiasm for the UV tool as a solution to the long-standing challenge of Python installation for beginners. He highlights how UV simplifies the setup process, making Python more accessible and reducing a significant barrier to entry for new developers.

"I want to thank the two of you for oh sorry sorry go ahead I was just going to say like for years teaching python that how do we get it installed at first it surprised me how difficult it was for people because like oh come on we just got python like what's so hard about this but it turns out it's a really big barrier to entry for newcomers and I'm very happy that Jupiter light now has solved its problems with input and it's like huge but until now I hadn't really thought about starting with UV because it's cross platform and if I say to people in the first 10 minutes of class install UV for your platform and then say UV init in your project bam you're done it just works and then it works cross platform

Resources

External Resources

Books

- "The Atlantic article" - Mentioned in relation to the story of what was happening behind the scenes with GPT-5.

Articles & Papers

- "Microsoft research paper" - Mentioned in relation to the sparks of AGI (Artificial General Intelligence) and the expectation fueled by OpenAI that GPT-5 would be the AGI model.

People

- Barry Warsaw - Mentioned as a Python core developer, former Steering Council member, and current employee at NVIDIA.

- Brett Cannon - Mentioned as a Python core developer and former developer manager for the Python experience in VS Code.

- Gregory Kapfhammer - Mentioned as an associate professor of computer and information science, researcher in software engineering and testing, and host of the Software Engineering Radio podcast.

- Jodie Burchell - Mentioned as a data scientist and developer advocate at JetBrains, working on PyCharm.

- Reuven Lerner - Mentioned as a freelance Python and Pandas trainer.

- Thomas Wouters - Mentioned as a long-time Python core developer, former employee at Google and Meta, and former board member and Steering Council member of the PSF.

- Michael Kennedy - Mentioned as the host of Talk Python To Me, a PSF fellow, and a social media presence.

- Anthony Shaw - Mentioned as a "famous Python quotationist" and a frequent podcast guest.

- Guido van Rossum - Mentioned in relation to moving Python infrastructure and his role in the PEP process.

- Ed Zitron - Mentioned as a journalist who writes about the state of the AI industry.

- David Halter - Mentioned as the creator of Jedi and Zoon.

- Pavel - Mentioned as a Steering Council member who received vitriol for proposing Lazy Imports (PEP 810).

- Benedict - Mentioned as a student who collaborated on a tool to generate Python programs that cause type checkers to disagree.

- Lauren - Mentioned as a full-time employee at the PSF handling marketing.

- Warren - Mentioned as a person who does a great job at the PSF.

- Eva - Mentioned as a former employee of the PSF.

- Deb - Mentioned as a current employee of the PSF.

- Paul Everett - Mentioned in relation to the Python documentary and his fashion.

- Chuck - Mentioned as the person who suggested using UV for the Humble Data community.

Organizations & Institutions

- OpenAI - Mentioned in relation to the expectation that GPT-5 would be an AGI model.

- IEEE Computer Society - Mentioned as the sponsor of the Software Engineering Radio podcast.

- PSF (Python Software Foundation) - Mentioned in relation to funding for conferences, workshops, and community activities, and its role in supporting Python development.

- NSF (National Science Foundation) - Mentioned in relation to a government grant that the PSF turned down.

- JetBrains - Mentioned as the employer of Jodie Burchell and a sponsor of the PSF.

- Microsoft - Mentioned as the employer of Brett Cannon.

- Google - Mentioned in relation to early releases of LLMs and as a contributor to frameworks like PyTorch and TensorFlow.

- Meta - Mentioned as the employer of Thomas Wouters and as a contributor to frameworks like PyTorch and TensorFlow.

- Pytorch - Mentioned as a framework that Meta and Google have contributed to.

- TensorFlow - Mentioned as a framework that Meta and Google have contributed to.

- Keras - Mentioned as a framework that Meta and Google have contributed to.

- Humble Data - Mentioned as a beginners' data science community.

- Astral - Mentioned as the team behind the type checker "Ty".

- NumFocus - Mentioned as an organization with a larger budget than the PSF, indicating a possible model for funding.

- Fastly - Mentioned as a consistent and generous sponsor of PyPI.

- European Python Society - Mentioned as a driver for EuroPython.

- Ohio State University - Mentioned as a campus that has hosted the PyOhio conference.

- Cleveland State University - Mentioned as a campus that has hosted the PyOhio conference.

Tools & Software

- Sentry AI Code Review - Mentioned as a tool that uses historical error and performance information to find and flag bugs in PRs.

- PyCharm - Mentioned as an IDE developed by JetBrains.

- VS Code - Mentioned in relation to the Python experience and its development manager.

- Hatch - Mentioned as a tool for running Python code.

- PDM - Mentioned as a tool for running Python code.

- Poetry - Mentioned as a tool for running Python code.

- UV - Mentioned as a tool for running Python code, and for its cross-platform capabilities and ease of installation.

- Pydantic AI - Mentioned as a tool for building AI agents.

- MyPy - Mentioned as a tool for type checking Python code.

- Pyright - Mentioned as a language server protocol (LSP) for type checking.

- Pylance - Mentioned as a language server protocol (LSP) for type checking.

- Pyre Fly - Mentioned as a Rust-based type checker from Meta.

- Ty - Mentioned as a Rust-based type checker from Astral.

- Zoon - Mentioned as a Rust-based type checker from David Halter.

- Jedi - Mentioned as a system created by David Halter that helps with LSP tasks.

- GitLab - Mentioned as an example of documentation that can be searched using LLMs.

- Pydantic - Mentioned in relation to AI and type checking.

- Claude Sonnet 4 - Mentioned as an example of a powerful LLM.

- Anthropic - Mentioned in relation to Claude models.

- Open Code - Mentioned in relation to Anthropic models.

- Python Launcher - Mentioned as a tool that could potentially be enhanced to support more features.

- Jupyter Light - Mentioned as having solved problems with input.

- Docker - Mentioned as a technology that beginners do not need to be taught when using UV.

- Concur to Futures - Mentioned as a unified approach that supports various concurrency methods.

Websites & Online Resources

- TalkPython.fm - Mentioned as the website for past episodes of the podcast.

- TalkPython.fm/YouTube - Mentioned as the place to watch live streams of podcast recordings.

- Discuss.python.org (DPO) - Mentioned as the platform for PEP discussions.

Podcasts & Audio

- Talk Python To Me - Mentioned as the podcast hosting the episode.

- Software Engineering Radio - Mentioned as a podcast hosted by Gregory Kapfhammer.

Other Resources

- GIL (Global Interpreter Lock) - Mentioned as a feature whose days are numbered, indicating its removal from Python.

- Packaging - Mentioned as an area getting sharper tools.

- Type Checkers - Mentioned as multiplying and becoming more sophisticated.

- AI (Artificial Intelligence) - Mentioned as a significant topic, with discussions on its hype, potential, and economic impact.

- LLMs (Large Language Models) - Mentioned in relation to AI and their capabilities.

- AGI (Artificial General Intelligence) - Mentioned in relation to the expectations for GPT-5.

- Scaling Laws - Mentioned in relation to the performance of AI models.

- Flaky Test Cases - Mentioned as an area of research for Gregory Kapfhammer.

- Python Core Developer - Mentioned as a role held by several guests.

- PSF Fellow - Mentioned as a title held by Michael Kennedy.

- Mastodon, Blue Sky, X - Mentioned as social media platforms where Michael Kennedy can be found.

- PEP 723 (Inline Script Metadata) - Mentioned as a standard that helps make running Python code easier.

- PEP 810 (Lazy Imports) - Mentioned as a proposed change to Python that involves syntactic changes.

- PEP 572 (The Walrus Operator) - Mentioned as a syntactic change that generated significant discussion and controversy.

- Free Threading - Mentioned as a major development for Python, aiming to remove the GIL.

- Sub Interpreters - Mentioned as a concurrency option alongside threading and multiprocessing.

- Multiprocessing - Mentioned as a concurrency option.

- Async IO - Mentioned as a library for concurrent programming.

- Type Server Protocol (TSP) - Mentioned as a protocol being defined to allow type servers to feed type information to a higher-level LSP.

- Python Documentary - Mentioned as a resource that highlighted the special nature of the Python community.

- PyOhio Conference - Mentioned as a free, local Python conference.

- Tritration S-curve/Sigmoid - Mentioned as a potential model for AI development, contrasting with exponential growth.

- Agentic Coding Tools - Mentioned as a type of tool that is likely to remain even if an AI bubble bursts.

- Open Weight Models - Mentioned as a part of academic research in AI.

- Abstract Syntax Tree (AST) - Mentioned as a concept that LLMs can help refresh memory on.

- Bash - Mentioned as a scripting language that may become less necessary with AI assistance.

- Virtual Environments - Mentioned as a concept that UV abstracts away.

- Lazy Imports - Mentioned as a new development in Python that has been accepted.

- Python 3.14 - Mentioned as the version where free threading will be officially supported.

- Extension Modules - Mentioned as a component that needs to be updated for free threading.

- Thread Safety - Mentioned in the context of free threading and its impact on Python code.

- Concurrency - Mentioned as a broad topic with various approaches like threading, sub interpreters, multiprocessing, and async IO.

- GUI Frameworks - Mentioned as an area that often utilizes threads.

- Pytorch Data Loader - Mentioned as an example of significant improvement through multithreading.

- Type Checking - Mentioned as a significant trend in 2025, with new tools and protocols emerging.

- Language Server Protocols (LSP) - Mentioned as a key technology for improving developer experience with type checking.

- Type Hints - Mentioned as a feature that helps AI understand code and provides a safety net for developers.

- Pydantic AI - Mentioned in relation to interoperability with type checkers like Pyre Fly.

- Python Ecosystem and Funding - Mentioned as a critical issue facing the Python community.

- PEP Process - Mentioned as a difficult and emotionally draining process for authoring changes to Python.

- PEP Authoring - Mentioned as a time-consuming and often toxic activity.

- PEP Discussions on Discuss.python.org - Mentioned as a platform where discussions can be difficult