AI Economy Stabilizes: Model Shifts Drive Application-Layer Innovation

TL;DR

- The AI economy has stabilized into distinct model, application, and infrastructure layers, creating a clearer playbook for AI-native companies and normalizing idea generation difficulty.

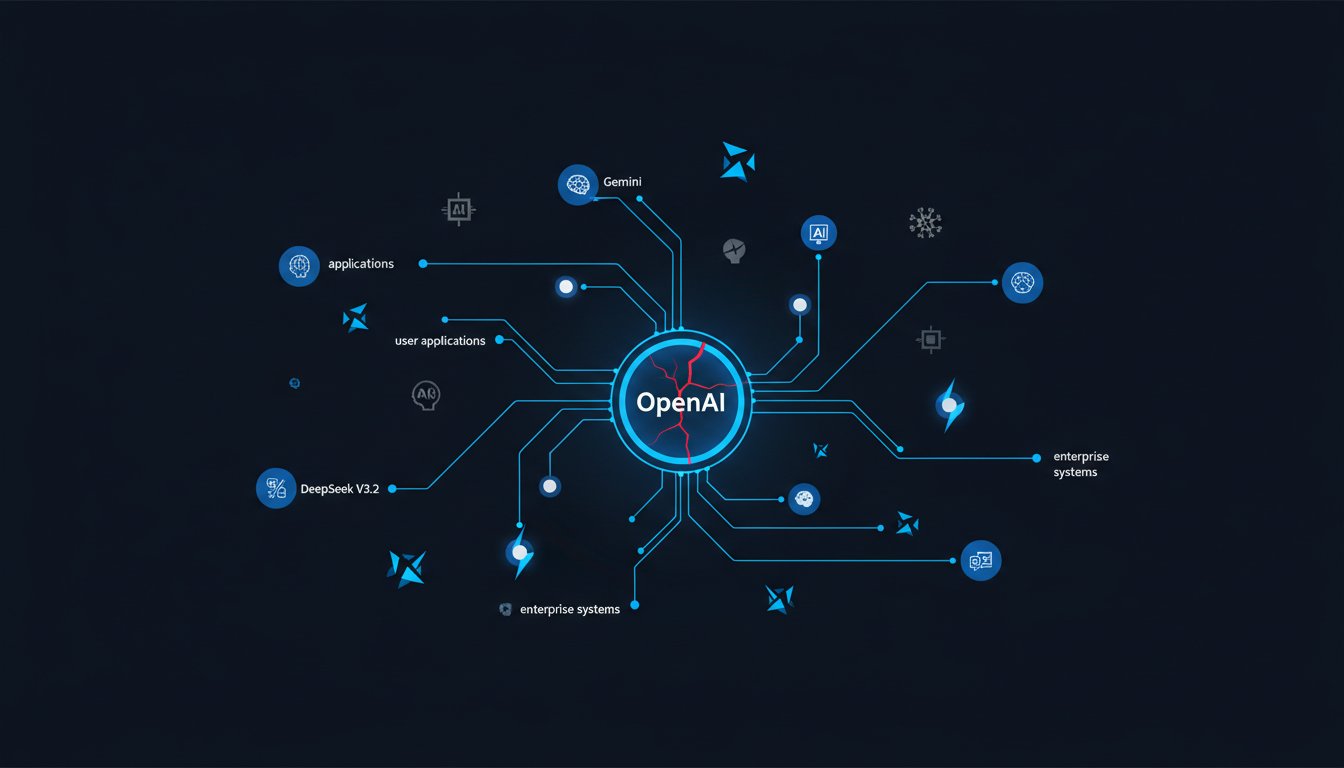

- Anthropic has surpassed OpenAI as the preferred LLM for YC founders, driven by superior performance in coding tools and agents, indicating a shift in model dominance.

- Gemini is rapidly climbing in popularity, now at 23% usage among YC founders, impressing with its reasoning capabilities and real-time information access via Google's index.

- The AI revolution is entering a "deployment phase" characterized by abundance and proliferation, shifting value creation from infrastructure build-out to application-layer startups.

- Despite initial hype, AI is not creating a "bubble" for startups; instead, abundant compute and commoditized models create opportunities for application-layer innovation.

- Companies are increasingly adopting an orchestration layer approach, abstracting and swapping between multiple LLMs to leverage the best model for specific tasks.

- The trend of companies achieving high ARR with significantly fewer employees is continuing, driven by AI's efficiency gains, but customer expectations are also rising, necessitating continued hiring.

Deep Dive

The AI economy has stabilized in 2025, shifting from a chaotic, rapidly evolving landscape to a more predictable structure with distinct layers for models, applications, and infrastructure. This stabilization, driven by incremental model improvements rather than disruptive breakthroughs, has returned the difficulty of finding startup ideas to normal levels. The primary surprise is the changing guard in model preference, with Anthropic now leading Open AI among Y Combinator applicants, suggesting a shift in perceived model utility and developer familiarity.

The dominance of Anthropic’s models is partly attributed to their strong performance in coding-related tasks, a significant area for startups. This success may create a "bleed-through effect," where founders familiar with Claude for personal coding tasks are more inclined to use it for their applications, regardless of whether those applications involve coding. Gemini is also climbing in popularity, with its reasoning capabilities and integration with real-time information sources making it a preferred tool for tasks like replacing web searches. While Open AI's ChatGPT retains a loyal user base due to its strong memory and personalized interaction, the overall trend indicates increasing competition and choice among foundational AI models.

This competitive environment at the model layer is creating an opportunity for startups to build an "orchestration layer," abstracting away from specific models and allowing for dynamic swapping or the use of specialized models for different tasks. This approach allows companies to leverage the best of multiple AI providers, such as using Gemini for context engineering and then Open AI for execution, adapting as new models emerge. This mirrors historical technological revolutions, like the internet boom, where initial heavy infrastructure investment (the "installation phase") created a foundation for a subsequent "deployment phase" characterized by widespread abundance and innovation at the application layer.

The infrastructure build-out, particularly for data centers and power generation, is facing significant constraints, leading to innovative solutions like data centers in space and the exploration of fusion energy. This intense capital expenditure by large tech companies, while potentially appearing like a bubble to some, is seen as creating a glut of compute resources that directly benefits startups. This abundance lowers costs and accelerates innovation, making it an opportune time for founders to build application-layer companies, much like YouTube emerged during the telecom build-out.

The trend of startups achieving significant revenue with lean teams is evolving. While early AI-native companies focused on maximizing revenue with minimal hiring, the second wave of companies, driven by increasing customer expectations and intense competition, are now expanding their teams post-Series A. This indicates that while AI enhances efficiency and reduces the time to produce, the demand for sophisticated applications and the need for strong execution capabilities mean that human talent remains a critical bottleneck, suggesting that the era of the solo founder running a trillion-dollar company is still some way off.

Action Items

- Audit LLM API usage: Track Anthropic, OpenAI, and Gemini adoption rates for the past 3 batches to inform future model selection strategies.

- Create LLM evaluation framework: Define criteria for assessing model performance across 5 key use cases (e.g., coding, reasoning, information retrieval) to guide technology choices.

- Analyze model switching costs: For 3-5 current AI startups, quantify the effort and impact of swapping LLM providers to understand long-term strategic flexibility.

- Draft playbook for AI-native company building: Outline 5 essential stages, from model selection to application development, to standardize successful startup creation.

- Measure domain-specific model performance: For 3 healthcare AI startups, compare their custom models against general-purpose LLMs (e.g., GPT-4) on key benchmarks.

Key Quotes

"i think perhaps the thing that most surprised me is the extent to which i feel like the ai economy has stabilized we have like the model layer companies and the application layer companies and the infrastructure layer economy and it seems like everyone is going to make a lot of money and there's kind of like a relative playbook for how to build an ai native company on top of the models"

The speaker expresses surprise at the stabilization of the AI economy, noting the emergence of distinct layers: model, application, and infrastructure. This suggests a maturing market where a clearer path for building AI-native companies is becoming established, indicating a shift from pure chaos to a more predictable landscape.

"one of the shocking things is that for the longest time open ai was the clear winner for all of last year last couple of batches though that number has been coming down and shockingly in this batch the number one api is actually anthropic came out a bit more than open ai which who would have thought"

This quote highlights a significant shift in the AI landscape, specifically concerning the preferred LLM providers among founders. The speaker points out that Anthropic has surpassed OpenAI in popularity for API usage among new companies, a development that was unexpected given OpenAI's previous dominance.

"i think there's a couple of things in terms of the tech stack selection i think as we've seen this year there's been a lot of wins in terms of code coding tools that are getting built out there and coding agents or so many categories that this isn't that being a bigger problem space that actually is creating a lot of value and it turns out that model that performs the best at it is actually the models from anthropic"

The speaker attributes the rise of Anthropic's models to their strong performance in coding-related tasks and tools. This suggests that the practical utility and effectiveness of Anthropic's models in a high-value area like coding have driven their adoption by founders.

"i mean what are your guys's tools of choice i haven't switched off of chat gpt i mean i find the memory very sticky it knows me it knows my personality it knows the things that i think about and so i'll use perplexity for fast web searches or things that um you know i know was like a research task because i think chat gpt is still like a little bit of a step behind for searching the web i don't know i think memory is turning into an actual moat for like that consumer experience"

This speaker explains their continued reliance on ChatGPT, emphasizing its "sticky memory" and personalized interaction as a key differentiator. They contrast this with other tools, suggesting that ChatGPT's ability to retain context and user history is becoming a significant competitive advantage in the consumer AI experience.

"i guess what's funny is that you do need to have a post training infrastructure you know we've also had yc companies where uh they had something that beat open ai uh you know gpt 3 5 and they were doing fine tuning with rl but then uh yeah gpt 4 5 and then 5 1 came out and uh you know basically blew their fine tuning out of the water you have to keep going"

The speaker points out the challenge of maintaining an advantage with fine-tuned models, as newer, more powerful base models can quickly render previous optimizations obsolete. This highlights the continuous effort required in the AI development cycle, where ongoing adaptation and improvement are necessary to keep pace with model advancements.

"i think that's exactly right it's like people are asking this question like is it a bubble that's maybe a question that's really relevant if you're like the equivalent of like comcast like if you're nvidia that's a very relevant question like oh are people overbuilding gpu capacity but like the college students they're not comcast they're actually like youtube if you're doing a startup in in your dorm room it's like the ai equivalent of like youtube and like doesn't really matter that much maybe nvidia's stock will go down next year i don't know but like even if it does that doesn't actually mean that it's like a bad time to be working on an ai startup"

The speaker addresses the "AI bubble" concern by distinguishing between infrastructure providers and application builders. They argue that for startups operating at the application layer, the potential overbuilding of infrastructure by companies like Nvidia is not a deterrent but rather an enabler, akin to the abundant telecom bandwidth that fueled the growth of internet companies like YouTube.

Resources

External Resources

Books

- "The Age of AI" by Henry Kissinger, Eric Schmidt, Daniel Huttenlocher - Mentioned in relation to the potential impact of AI on society and the economy.

- "The Second Machine Age" by Erik Brynjolfsson and Andrew McAfee - Mentioned in relation to the economic implications of technological advancements.

- "The AI Revolution: The Road to Superintelligence" by Tim Urban - Mentioned in relation to the potential trajectory of AI development.

- "The Lean Startup" by Eric Ries - Mentioned in relation to startup methodologies and building companies.

- "The Innovator's Dilemma" by Clayton Christensen - Mentioned in relation to disruptive innovation and market dynamics.

- "The Singularity is Near" by Ray Kurzweil - Mentioned in relation to the concept of technological singularity and exponential growth.

- "The Structure of Scientific Revolutions" by Thomas Kuhn - Mentioned in relation to paradigm shifts and how scientific understanding evolves.

- "The Great Transition" by Carolotta Perez - Mentioned in relation to technological revolutions and their phases of installation and deployment.

Articles & Papers

- "AI 2027" report - Mentioned as a doomer piece that predicted societal collapse, later revised.

- MIT report on enterprise AI projects - Mentioned as indicating a high failure rate for enterprise AI initiatives.

People

- Sam Altman - Co-founder of OpenAI, mentioned in relation to the development of AI models.

- Ilya Sutskever - Co-founder of OpenAI, mentioned in relation to the development of AI models.

- Tom Brown - Mentioned as having a conversation about Anthropic's models being intentionally made their launch star.

- Varun Mohan - Mentioned for releasing "anti-gravity" at Google and his involvement with "vibe coding."

- Sundar Pichai - CEO of Google, mentioned in relation to space data centers and "vibe coding."

- Elon Musk - Mentioned in relation to space data centers.

- Mark Zuckerberg - Mentioned for comments on Meta's capital expenditure on AI infrastructure.

Organizations & Institutions

- Y Combinator (YC) - Host of the podcast and incubator for startups, mentioned for its selection cycles and founder discussions.

- OpenAI - AI research company, mentioned as a dominant LLM provider that has seen declining usage in favor of Anthropic.

- Anthropic - AI safety and research company, mentioned as the leading LLM provider in the Winter 2026 YC batch.

- Google - Technology company, mentioned for its involvement in space data centers and its AI models.

- Nvidia - Technology company, mentioned in relation to its stock performance and the competition in the GPU market.

- AMD - Technology company, mentioned as a competitor to Nvidia in the GPU market.

- Intel - Technology company, mentioned in the context of competition in the chip industry.

- Microsoft - Technology company, mentioned in relation to its AI investments.

- Meta - Technology company, mentioned for its significant capital expenditure in AI infrastructure.

- Replit - Coding platform, mentioned as a company winning in the "vibe coding" category.

- Emergence - Mentioned as a company winning in the "vibe coding" category.

- Boom Supersonic - Mentioned for its quest to create enough power for AI data centers using jet engines.

- Helion - Mentioned as a company working on fusion energy to power data centers.

- Zephyr Fusion - Mentioned as a space fusion company.

- Lagora - Mentioned as a company competing with Harvey.

- Giga - Mentioned as a company competing with Sierra.

- Sierra - Mentioned as a company competing with Giga.

- Harvey - Mentioned as an early AI-native company that may have wasted capital on fine-tuning.

- Open Evidence - Mentioned alongside Harvey in the context of AI-native companies.

Tools & Software

- Claude - LLM from Anthropic, mentioned as the leading API choice in the Winter 2026 YC batch and for its performance in coding tasks.

- GPT-4 - LLM from OpenAI, mentioned as a benchmark for comparison.

- GPT-3.5 - LLM from OpenAI, mentioned as a benchmark for comparison.

- Gemini - LLM from Google, mentioned for its climbing rankings and impressive reasoning capabilities.

- Perplexity - AI-powered search engine, mentioned for its speed but occasional inaccuracy compared to Gemini.

- ChatGPT - LLM from OpenAI, mentioned for its sticky memory and personalized user experience.

- TPUs (Tensor Processing Units) - Mentioned as an alternative to GPUs for AI computation.

Websites & Online Resources

- Pro Football Focus (PFF) - Mentioned as a data source for player grading.

- Lightcone - Mentioned as the episode series name.

- Twitter - Mentioned as a platform where negative comments about an AI bubble are discussed.

- Google Index - Mentioned in relation to Gemini's ability to use real-time information.

Other Resources

- AI Economy - Mentioned as having stabilized with distinct layers for models, applications, and infrastructure.

- Model Layer - Refers to the companies providing foundational AI models.

- Application Layer - Refers to companies building applications on top of AI models.

- Infrastructure Layer - Refers to companies providing the hardware and services for AI.

- Tech Stack - The combination of technologies used by a company.

- LLM (Large Language Model) - A type of AI model.

- API (Application Programming Interface) - A way for different software systems to communicate.

- Vibe Coding - A concept or behavior related to coding assistance, discussed as a growing category.

- Scaling Laws - Principles governing the relationship between model size, compute, and performance.

- Fast Takeoff Argument - The idea that AI development will accelerate rapidly.

- Log Linear Scaling - A type of growth that is linear on a logarithmic scale, implying slower acceleration than exponential growth.

- Dark Fiber - Unused fiber optic cable infrastructure.

- SEQA (California Environmental Quality Act) - Mentioned as a regulation that can hinder innovation and building.

- Fusion Energy - A potential source of abundant energy for data centers.

- Tokamaks - A type of fusion reactor.

- Open Source Models - AI models that are publicly available for use and modification.

- Fine-tuning - The process of adapting a pre-trained AI model to a specific task or dataset.

- RL (Reinforcement Learning) - A type of machine learning where an agent learns by trial and error.

- Domain Specific Models - AI models trained for a particular industry or task.

- 8 Billion Parameters - A measure of the size of an AI model.

- Post-Training Infrastructure - Systems needed to manage and deploy AI models after initial training.

- Nanobanana - Mentioned in relation to video generation.

- Anti-Gravity - Mentioned in relation to Varun Mohan's release at Google.

- AI Bubble - The concern that investment in AI is excessive and unsustainable.

- Telecom Bubble - A historical period of overinvestment in telecommunications companies.

- Compute - The processing power required for AI operations.

- GPU (Graphics Processing Unit) - Hardware commonly used for AI computations.

- ARR (Annual Recurring Revenue) - A metric for subscription-based businesses.

- Capital Expenditure (CapEx) - Investment in physical assets.

- Capital Stack - The combination of debt and equity used to finance a company.

- Vibe Coding - A concept discussed as a growing category of AI-assisted coding.

- AI Native Companies - Companies built from the ground up with AI at their core.

- LLM Arena - A concept for comparing the performance of different LLMs.

- Orchestration Layer - A system that manages and coordinates multiple AI models.

- Vertical AI Agent - An AI agent designed for a specific industry.

- Gigawatt Data Centers - Data centers requiring massive amounts of power.

- CapEx - Capital Expenditure.

- AI Labs - Research institutions focused on artificial intelligence.

- Non-profit - An organization not focused on making profit.

- Arc AGI Prize - A competition related to artificial general intelligence.

- Doomer Piece - A report or analysis predicting negative outcomes.

- Fast Takeoff - The idea of rapid AI advancement.

- Log Linear - A mathematical relationship.

- Enterprise AI Projects - AI initiatives within established companies.

- SB 1047 - Mentioned in relation to proposed regulations on AI compute.

- Non-trivial double-digit percentage - A significant portion of capital.

- Post-training infrastructure - Systems for managing AI models after initial training.

- Vibe Coding - A concept related to AI-assisted coding.

- Nanobanana - Mentioned in relation to video generation.

- Anti-Gravity - Mentioned in relation to Varun Mohan's release at Google.

- AI Bubble - The concern that investment in AI is excessive and unsustainable.

- Telecom Bubble - A historical period of overinvestment in telecommunications companies.

- Compute - The processing power required for AI operations.

- GPU (Graphics Processing Unit) - Hardware commonly used for AI computations.

- ARR (Annual Recurring Revenue) - A metric for subscription-based businesses.

- Capital Expenditure (CapEx) - Investment in physical assets.

- Capital Stack - The combination of debt and equity used to finance a company.

- Vibe Coding - A concept discussed as a growing category of AI-assisted coding.

- AI Native Companies - Companies built from the ground up with AI at their core.

- LLM Arena - A concept for comparing the performance of different LLMs.

- Orchestration Layer - A system that manages and coordinates multiple AI models.

- Vertical AI Agent - An AI agent designed for a specific industry.

- Gigawatt Data Centers - Data centers requiring massive amounts of power.

- CapEx - Capital Expenditure.

- AI Labs - Research institutions focused on artificial intelligence.

- Non-profit - An organization not focused on making profit.

- Arc AGI Prize - A competition related to artificial general intelligence.

- Doomer Piece - A report or analysis predicting negative outcomes.

- Fast Takeoff - The idea of rapid AI advancement.

- Log Linear - A mathematical relationship.

- Enterprise AI Projects - AI initiatives within established companies.

- SB 1047 - Mentioned in relation to proposed regulations on AI compute.

- Non-trivial double-digit percentage - A significant portion of capital.

- Post-training infrastructure - Systems for managing AI models after initial training.

- Vibe Coding - A concept related to AI-assisted coding.

- Nanobanana - Mentioned in relation to video generation.