AI's Strategic Pivot: From Model Superiority to User Experience

TL;DR

- The current AI model landscape offers diminishing differentiation, as leading general-purpose models perform nearly identically, preventing sustainable competitive advantage through model superiority alone.

- Competing in AI requires strategic choices: either invest heavily in infrastructure and hardware, or focus on building differentiated user experiences and applications above the foundational models.

- Building differentiated AI applications is challenging, as it involves competing with the entire tech industry to invent novel use cases, akin to creating a new category like a photo-sharing app.

- The "features approach" of integrating AI into existing products, like improved search or voice assistants, is a viable strategy currently pursued by major tech companies like Google and Meta.

- The core challenge for AI companies is identifying and serving the actual user needs, as current development often focuses on obscure benchmarks rather than common, practical applications like dating advice or data entry.

- The AI industry faces a "dogfooding paradox" where developers using models extensively may not represent typical users, leading to a disconnect between advanced capabilities and everyday utility.

- The current AI paradigm may not fundamentally alter work like the internet or mobile did, with differentiation potentially lying in integrating AI as features rather than solely relying on raw chatbot interfaces.

Deep Dive

The core challenge for OpenAI and similar AI companies is that while their large language models exhibit impressive capabilities on obscure benchmarks, they lack a clear, defensible strategy for competing in a market where foundational models are becoming commoditized. This necessitates a pivot away from differentiating the raw model itself toward building sustainable advantages either by integrating AI as features into existing products or by creating entirely new, non-chatbot user experiences, both of which present significant strategic hurdles.

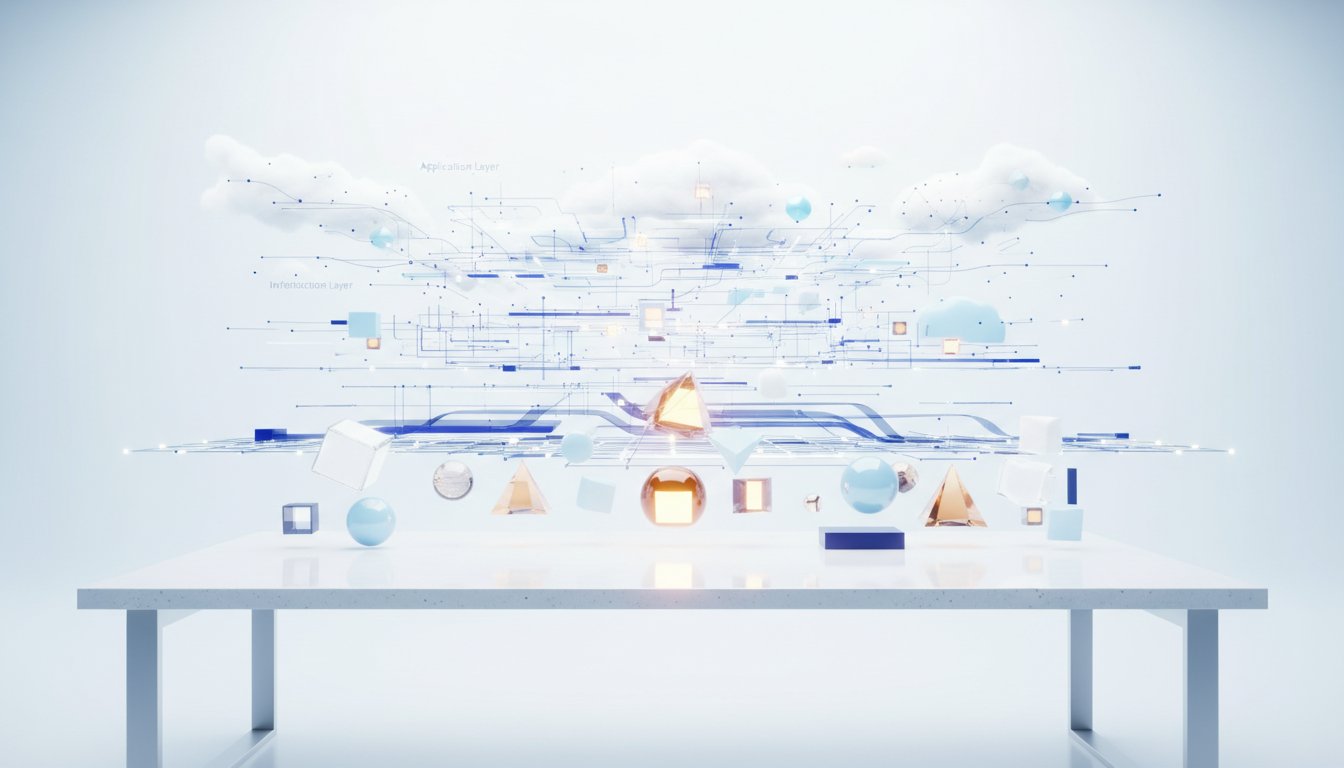

The competitive landscape for AI is defined by a lack of network effects in the models themselves, meaning that week-to-week, leading models are largely indistinguishable to most users. This makes it difficult to build a defensible moat based on model superiority alone. Consequently, companies must look "down the stack" to infrastructure or "up the stack" to user experiences. However, competing on infrastructure, such as data centers and chips, is extremely capital-intensive and unlikely to yield significant cost or performance advantages over established cloud providers or competitors with similar investments. This path offers limited differentiation unless it translates into a directly perceivable user benefit, which is not guaranteed.

The more promising, yet still challenging, approach is to move "up the stack" by building differentiated user experiences. This can take two primary forms: first, integrating AI as features into existing products, such as improving search results, voice assistants, or recommendation engines, where incumbents like Google, Meta, and Apple are already strong. Second, creating entirely new applications powered by AI. This approach faces the immense difficulty of competing with the entire tech industry in inventing novel consumer use cases, akin to trying to build the "best photo sharing app" without a clear differentiator. OpenAI's own attempts, like Sora, are positioned as such new experiences, but the novelty wears off, and sustained differentiation is hard to achieve. Furthermore, relying on an API platform for third-party developers does not create the same network effects or ecosystem lock-in as proprietary operating systems or platforms, as users are not necessarily compelled to remain within a specific AI interface to access these third-party applications. The fundamental problem with differentiating the raw chatbot interface itself is also significant; it is difficult to make a general-purpose "ask anything" box fundamentally different from another, potentially leading to feature bloat that mimics existing software.

A critical disconnect exists between the advanced capabilities demonstrated by frontier models on complex, niche benchmarks and the actual day-to-day needs of most users, who often require deterministic results for standard operating procedures or seek practical applications like dating advice. This suggests that the current focus on pushing the boundaries of model capabilities on obscure tasks may not align with mass-market adoption. The user base, while large in terms of weekly active users, often engages with AI shallowly, making subtle improvements in model performance or tone changes imperceptible and irrelevant to their workflow. This disconnect implies that a successful strategy must bridge the gap between cutting-edge AI development and the practical, often procedural, realities of how most people work and live, a challenge reminiscent of the early days of the internet or mobile technology where the ultimate form and function of transformative technologies were far from clear.

The core strategic question remains: how can AI companies build sustainable competitive advantages when the foundational models are commoditized and network effects are absent? The path forward likely involves either embedding AI deeply into existing, high-distribution products or convincing users to adopt entirely new AI-native experiences. However, the difficulty of achieving defensible differentiation in both avenues, coupled with a misunderstanding of typical user needs, leaves the long-term competitive strategy for companies like OpenAI uncertain.

Action Items

- Audit user workflows: Identify 3-5 common tasks where users require deterministic results, not generalized reasoning.

- Design user feedback mechanism: Capture instances where users request obscure benchmarks or theoretical problems instead of practical solutions.

- Create runbook template: Define 5 required sections (setup, common failures, rollback, monitoring) to prevent knowledge silos for standard operating procedures.

- Measure chatbot differentiation: For 3-5 core use cases, quantify the difference in user experience between a raw chatbot and feature-enhanced applications.

- Track user adoption: For 3-5 non-chatbot AI experiences, measure engagement and retention compared to raw chatbot interfaces.

Key Quotes

"the models are all basically the same the general purpose model for general purpose use cases the models are all basically the same and from week to week the leader changes they're all within a couple of percentage points of each other but if you're not doing image generation or coding most people wouldn't be able to tell the difference especially most people who are using them every week and rather than every day"

Benedict Evans explains that for most general use cases, the leading AI models are functionally indistinguishable. Evans highlights that this similarity extends to the underlying science, data sets, and infrastructure, suggesting a lack of a clear competitive advantage based solely on model performance.

"so how do you compete an option you either go down the stack or up the stack or both so you can go down the stack and build infrastructure and design your own chips and raise a lot of money but in the end a it's going to be very hard for particularly openai to for their data center to be better than everybody else's or cheaper or more efficient than anybody else's"

Evans outlines two primary strategies for companies to compete in the AI space: either by focusing on the underlying infrastructure ("down the stack") or by building applications and user experiences on top of the models ("up the stack"). Evans notes the significant difficulty in achieving a sustainable advantage in infrastructure due to high costs and the challenge of outperforming established players.

"and then secondly you can make a whole new experience around this technology you can do stuff with it that you couldn't have done before where that is that might be a new dating app it might be a better photo sharing experience of course it might also be sora which is obviously an openai attempt to solve this or now so it was now dropped off the charts as a novelty wore off you make an app that isn't a chatbot but it is powered by the underlying capability"

Evans suggests that another competitive approach involves creating entirely novel user experiences that leverage AI capabilities in ways not previously possible. Evans points to examples like new dating apps or enhanced photo sharing, and also mentions Sora as an attempt to build a distinct experience beyond a basic chatbot interface.

"but microsoft had proprietary apis and proprietary operating system that had totally dominant digital base of like 95 of the world with using windows so you had to write windows software you don't have to write if i'm going to use make a third party app i'm not necessarily going to experience it inside chatgpt it's going to be a third party app and the user isn't going to know what apis i'm using"

Evans contrasts Microsoft's historical ecosystem advantage with the current AI landscape, explaining that Microsoft's Windows operating system created a strong lock-in effect requiring software to be built for it. Evans argues that AI platforms currently lack this inherent lock-in, as users interact with third-party applications rather than directly with the underlying AI APIs.

"and i wonder if there's a gap between that between the clever people building these things and the people actually using it on a day to day basis and there's a lack of understanding still of how people you know it's hard to build something if the people building it aren't actually also the people using it on a day to day basis"

Evans posits a disconnect between AI developers and everyday users, suggesting that the creators may not fully grasp how their technology is being used or needs to be used in practical, day-to-day scenarios. Evans emphasizes that this gap makes it challenging to build relevant and useful AI products.

"and the answer seems to be kind of yes they did hence the disappointment of we don't have enough people using the specific yeah people aren't using those things a couple of weeks ago i heard i heard someone from openai on a podcast an old colleague of mine from a16z martin casado and he said something like you know we really we were really surprised to discover that outside of silicon valley most people like run standard operating procedures and need deterministic results and you thought wait how old are you most people's jobs are a series of processes just why didn't why didn't you know that"

Evans recounts a surprising discovery shared by Martin Casado from a16z, indicating that OpenAI was surprised to learn that most people outside of Silicon Valley perform standard operating procedures and require predictable outcomes. Evans questions this surprise, noting that most jobs inherently involve structured processes, implying a lack of fundamental understanding of typical user behavior.

Resources

External Resources

Books

- "The Information: A History, a Theory, a Flood" by James Gleick - Mentioned as an example of a book that discusses information.

Articles & Papers

- Mary Meeker's decks - Referenced for historical charts of email adoption being higher than web adoption.

People

- James Gleick - Author of "The Information: A History, a Theory, a Flood."

- Larry - Co-founder of Google, mentioned in the context of the early internet.

- Sergey - Co-founder of Google, mentioned in the context of the early internet.

- Mark Zuckerberg - Mentioned in relation to Meta's AI usage and the early days of the internet.

- Jeff Bezos - Mentioned with a meme illustrating his business evolution from bookseller to broader commerce.

- Martin Casado - Mentioned as a former colleague from a16z who spoke on a podcast about user behavior.

- Mike Krieger - Mentioned in relation to Claude's strategic decision not to pursue a particular market.

Organizations & Institutions

- OpenAI - Primary subject of discussion regarding competition and model differentiation.

- Google - Mentioned in relation to data center infrastructure, AI usage (Gemini), and early internet business models.

- Meta - Mentioned in relation to AI usage (Meta AI) and its comparison to Gemini.

- Microsoft - Mentioned in the context of its historical ecosystem dominance with proprietary APIs and operating systems.

- Apple - Mentioned in the context of its historical challenges and the product strategy of its devices.

- Amazon - Mentioned as an example of a company that could integrate AI features and its early business model as a bookstore.

- AWS (Amazon Web Services) - Mentioned as an infrastructure layer for startups, contrasted with Windows/macOS lock-in.

- GCP (Google Cloud Platform) - Mentioned as an alternative infrastructure layer to AWS.

- Oracle - Mentioned as a potential cloud infrastructure provider.

- Nirvana - Mentioned as a band associated with Seattle, the location of Jeff Bezos's early bookstore.

- A16z - Mentioned as the firm where Martin Casado is a colleague.

Websites & Online Resources

- ChatGPT - Discussed as a widely used AI model and a potential platform for third-party developers.

- Gemini - Mentioned as a competing AI model with significant user engagement.

- Claude - Mentioned as an AI model with a specific strategic market decision.

- Sora - Mentioned as an OpenAI attempt to create a new experience around AI capabilities.

- Information Superhighway - A historical concept for the internet, discussed in relation to early predictions of its form.

- Windows - Mentioned as Microsoft's operating system with proprietary APIs and dominant market share.

- macOS - Mentioned as an operating system with significant user lock-in.

Other Resources

- AI (Artificial Intelligence) - The central topic of discussion, focusing on competition, differentiation, and user adoption.

- LLM (Large Language Model) - Referred to as the underlying technology for AI models like ChatGPT.

- TPUs (Tensor Processing Units) - Mentioned in the context of data center infrastructure and chip comparisons.

- Network Effect - Discussed as a factor absent in current AI models, contrasting with previous software platform shifts.

- API (Application Programming Interface) - Mentioned in relation to Microsoft's proprietary APIs and third-party developer access.

- Chatbot - Discussed as a primary interface model for AI, with challenges in differentiation.

- Tab Browsing - Mentioned as a past innovation in web browser UI.

- Agentic - A term used to describe AI agents, noted for its sound rather than clear definition.

- Dog Feeding - A concept used to describe internal usage of a product by its developers.

- Frontier Models - Refers to advanced AI models being developed by OpenAI.

- Benchmarks - Used to evaluate the capabilities of AI models, particularly in obscure or theoretical areas.

- Standard Operating Procedures - Described as a common job requirement for most people, contrasting with AI model capabilities.

- Deterministic Results - Expected outcomes from processes, particularly in standard operating procedures.

- Data Entry - An example of a task requiring precise, deterministic results.

- Intern - Mentioned as a role that might be assigned tasks that current AI models cannot reliably perform.

- Proofreading - An example of a task the speaker uses AI for.

- Features Approach - A strategy for integrating AI into existing products.

- New Experience Approach - A strategy for creating novel applications powered by AI.

- Infra Layer Approach - A strategy for providing the underlying infrastructure for AI.

- New Modality - A term used to describe a new way of interacting with technology, applied to AI.

- Memory - Mentioned as a feature that adds stickiness to AI products, but not a network effect.

- Productivity - Implied as a benefit of AI integration into existing workflows.

- User Surveys - Used to track the usage of different AI models.

- Llama - Mentioned in the context of Meta's AI development.

- Consumer Use Cases - The practical applications of AI for everyday users.

- Conceptual Blue Sky Question - A broad, open-ended question about the future impact of AI.

- Internet in 1997-1998 - Used as a historical parallel to the current state of AI development, characterized by uncertainty and rapid change.

- Mobile (2005-2010) - Used as a historical parallel to the transformative potential of AI, similar to the impact of smartphones.

- iPhone - Mentioned as a catalyst for the mobile revolution.

- Portals (AOL) - Discussed as a dominant internet concept in the late 1990s.

- Email Adoption - Referenced in historical data showing its early prevalence over web adoption.

- Web Adoption - Referenced in historical data showing its growth relative to email.

- Search - Mentioned as a business model that was initially questioned.

- Closed Ecosystems - A characteristic of Apple that was historically seen as a weakness.

- Open Ecosystems - Contrasted with closed ecosystems, often seen as a strength.

- AI Overviews - A feature that could be added to existing products.

- Siri - Mentioned as a product that could be improved with AI.

- Instagram - Mentioned as a platform that could be transformed by AI features.

- Amazon Recommendations - An example of a feature that could be enhanced by AI.

- New Dating App - An example of a new experience that could be built with AI.

- Better Photo Sharing Experience - An example of a new experience that could be built with AI.

- Proprietary APIs - Mentioned in relation to Microsoft's historical advantage.

- Operating System - Mentioned in relation to Microsoft's historical advantage.

- Web Browser - Discussed as a key interface that Microsoft owned but did not leverage for overall internet control.

- Search Engine Business Model - Questioned in the early days of the internet.

- AI Differentiation - The core challenge for companies in the AI space.

- Commodities - The current state of AI models, according to the discussion.

- Platforms with Distribution - Entities that could pose a competitive threat to AI model providers.

- General Purpose Model - The current state of widely available AI models.

- Image Generation - A specific use case for AI models.

- Coding - A specific use case for AI models.

- Infrastructure - A layer of the AI stack that companies can build upon.

- Chips - A component of AI infrastructure.

- Data Centers - Physical locations for AI infrastructure.

- Fiber Connections - An example of infrastructure investment.

- Response Time - A user-perceivable performance metric.

- Cost Differential - A potential advantage derived from infrastructure efficiency.

- Performance Differential - A potential advantage derived from infrastructure efficiency.

- Semiconductors - A high-cost, specialized area of infrastructure.

- Aircraft - An example of a high-cost, specialized industry with few players.

- Raw Chatbot - The basic AI model without additional features or applications.

- New Paradigm - A fundamental shift in how technology is used.

- Interface Model - The way users interact with a technology.

- Rendering Engine - A component of a web browser.

- URL Bar - A component of a web browser.

- Input Box - A component of an interface.

- Output Box - A component of an interface.

- Microsoft Office - Mentioned as an example of complex software with many features.

- Clippy - A virtual assistant from Microsoft Office, used as an analogy for potential AI feature complexity.

- Stickiness - A quality that keeps users engaged with a product.

- Clever People - Refers to the skilled individuals working in the AI field.

- Mind Share - The level of public awareness and recognition for a product or company.

- Momentum - The force of progress and adoption behind a product or company.

- Strategic Advantage - A unique benefit that allows a company to outperform competitors.

- Lock-in - A situation where users are dependent on a particular product or service.

- Silicon Valley - The hub of technological innovation.

- Theoretical Physics Questions - An example of obscure benchmarks used to test AI models.

- Chemistry Tests - An example of obscure benchmarks used to test AI models.

- Logic Puzzles - An example of obscure benchmarks used to test AI models.

- Mathematics - An example of obscure benchmarks used to test AI models.

- Dating Advice - A practical, everyday use case for AI.

- Theoretical Physics - An area of study involving complex concepts.

- Reasoning Categories - Different types of cognitive processes that AI models can perform.

- User Workflows - The sequence of tasks a user performs.

- Unusable - A state where a product or service cannot be effectively used.

- AI Features - Enhancements added to existing products using AI.

- Transformative Features - AI features that significantly change how a product functions.

- Existing Business - Companies that can integrate AI into their current operations.

- New Applications - Novel software or services built with AI.

- Solving New Things - The ability of AI to address previously unaddressed problems.

- Raw Chatbot Differentiation - The challenge of making a basic chatbot unique.

- Google AI Usage - Data on how users interact with Google's AI products.

- Meta AI Usage - Data on how users interact with Meta's AI products.

- Gemini Usage Charts - Data showing the growth of Gemini's user base.

- Claude Usage - Data on how users interact with Claude.

- Products that don't look like a chatbot - A category of AI applications.

- **Chatbots that