Treating LLMs as APIs Requires Defensive Programming and Structured Output

TL;DR

- Treating LLMs as APIs requires defensive programming, using tools like

diskcacheto store and retrieve results, preventing redundant and costly computations for identical inputs. - LLM outputs can be unpredictable; employing structured output formats via libraries like Pydantic, potentially with retry mechanisms like the

instructorlibrary, ensures data integrity and type safety. - Leveraging LLMs effectively means focusing on prompt engineering and output validation, rather than solely relying on the LLM for complex logic, to maintain control and predictability.

- The shift in the developer landscape favors domain expertise over deep coding skills when integrating LLMs, enabling niche professionals to build specialized applications with AI assistance.

- While LLMs offer powerful capabilities, developers must remain vigilant against over-reliance, actively maintaining their own programming skills to avoid a decline in fundamental abilities.

- The emergence of tools like OpenRouter and local LLM runners (Ollama, LM Studio) democratizes access to diverse models, facilitating experimentation and cost-effective development.

- The mandate for AI integration in enterprises can provide opportunities to re-evaluate and implement foundational data science techniques, such as those in scikit-learn, which may prove more efficient and reliable for specific tasks.

Deep Dive

Treating Large Language Models (LLMs) as programmable API building blocks, rather than abstract black boxes, is crucial for effectively integrating them into Python applications. This approach emphasizes building defensive, observable, and well-defined interfaces around LLMs, enabling developers to leverage their power while mitigating inherent unpredictability. The core implication is a shift in software development, where the focus moves from rote coding to idea generation and strategic application of LLM capabilities, thereby enhancing rather than replacing human intelligence.

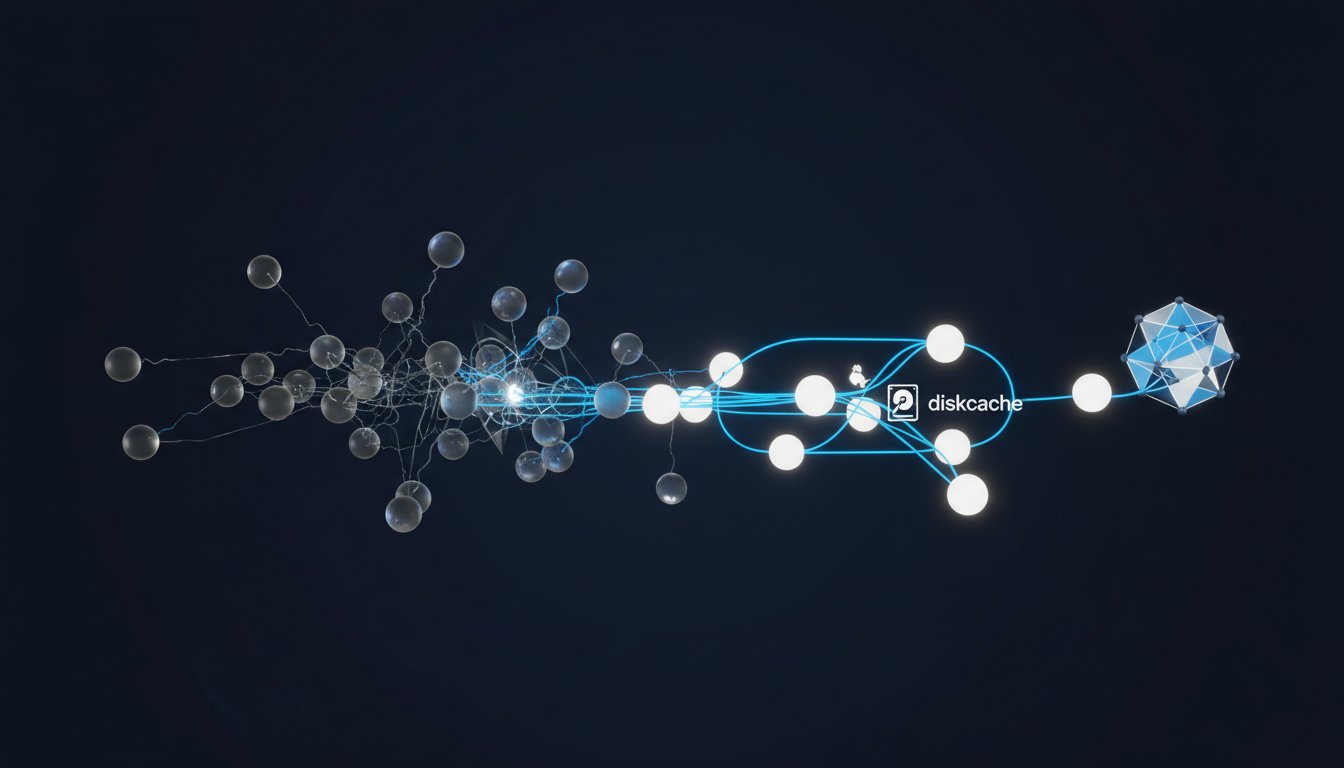

The integration of LLMs into software development necessitates a disciplined approach, treating them as specialized APIs with clear boundaries and robust monitoring. Vincent Warmerdam highlights the inherent unpredictability of LLMs -- providing the same input can yield different outputs -- which demands a defensive programming posture. This means implementing strategies like caching, where libraries such as diskcache become invaluable for storing and retrieving LLM responses based on specific inputs, model settings, and prompts. Caching prevents redundant, costly computations and ensures consistent results when identical queries are made, saving both time and financial resources. Furthermore, Warmerdam advocates for structured output generation, using tools like Pydantic models to define expected data formats (e.g., JSON, lists of strings), which LLMs can then be prompted to adhere to. This transforms LLM outputs from vague text into programmable data structures, enabling seamless integration with existing Python code and reducing the need for brittle text parsing. The library instructor is presented as a sophisticated method for handling LLM output validation, employing a retry mechanism with error feedback to guide the LLM towards producing correctly formatted data, even when initial attempts fail.

The broader implications of this LLM integration paradigm extend to how we conceptualize software development and the evolving role of developers. While LLMs can automate boilerplate and accelerate prototyping, they do not replace the need for strong foundational programming skills and critical thinking. Warmerdam stresses that the true value lies in "natural intelligence" and good ideas, with LLMs serving as powerful tools to realize them. This is particularly relevant in specialized domains, where LLMs can empower subject matter experts (like dentists) to build custom applications with minimal coding expertise, shifting the skill balance from heavily technical to domain-specific knowledge augmented by AI. However, there's a cautionary undertone: an over-reliance on LLMs without maintaining core programming skills can lead to "self-learned helplessness," mirroring the passive dependency depicted in the movie WALL-E. This underscores the importance of using LLMs as extensions of human capability, not replacements for fundamental understanding. The trend towards smaller, fine-tuned, open-source LLMs, accessible through platforms like Ollama and OpenRouter, further democratizes LLM integration, allowing for cost-effective, localized deployments and tailored AI solutions for specific tasks, from code generation to specialized domain knowledge.

Ultimately, the effective use of LLMs in software development hinges on a mindful integration strategy that prioritizes robust tooling, disciplined methodology, and a continued emphasis on human ingenuity. The proliferation of tools like diskcache, Pydantic, and the llm library by Simon Willison, alongside platforms like OpenRouter for model access, provides developers with the building blocks to create sophisticated LLM-powered applications. The key takeaway is that while LLMs offer unprecedented capabilities, their true potential is unlocked when treated as predictable, programmable components within a well-architected system, guided by human creativity and a commitment to preserving core cognitive skills.

Action Items

- Audit LLM integration: For 5-10 core endpoints, evaluate caching strategies (e.g.,

diskcache) to prevent redundant API calls and reduce costs. - Implement structured output validation: For 3-5 critical LLM functions, enforce Pydantic models to ensure predictable, machine-readable responses, preventing manual text parsing.

- Develop LLM prompt templates: Create a reusable library of 5-10 parameterized prompt templates to standardize LLM interactions and improve consistency across applications.

- Measure LLM performance against benchmarks: For 2-3 key use cases, compare LLM output quality and cost against simpler models (e.g., scikit-learn) to identify cost-effective solutions.

- Establish LLM API boundaries: Define clear input/output contracts for 3-5 LLM-powered features to isolate LLM behavior and simplify integration into existing Python applications.

Key Quotes

"I do think that we are all tired of like every tech product out there is trying its best to find a way to put ai in the product like oh any any gmail any calendar app let's put ai in there and it gets a bit tiring like I wouldn't be too surprised if like fire hoses and refrigerators also come with ai features these days like it it feels a bit nuts."

Vincent Warmerdam expresses a common frustration with the pervasive integration of AI into everyday products. He highlights how the desire to incorporate AI can lead to its inclusion in unnecessary or even absurd applications, suggesting a saturation point where the novelty wears off and becomes tiring for consumers.

"My main way of dealing with it is more along the lines of like okay like this genie is not going to go back into the bottle in a way but if we're able to do more things with these LLMs if they're able to automate more boilerplate and that sort of thing then it seems that then I can try and get better at natural intelligence as well instead of this artificial intelligence."

Warmerdam proposes a proactive approach to the integration of LLMs, viewing them as tools that can automate tedious tasks. He suggests that by offloading boilerplate work to AI, individuals can focus on enhancing their own "natural intelligence" and pursue more complex or creative endeavors, rather than simply accepting AI as a replacement for human thought.

"The one thing that's very annoying is you can write down x is equal to 10 and then have all sorts of cells below it that depend on x you can then delete the cell and the notebook will not complain to you about it even though if anyone else tries to rerun the notebook x is gone it won't run and your notebook is broken and you can't share the thing anymore."

Warmerdam points out a significant drawback of traditional notebook environments like Jupyter. He explains that the lack of explicit dependency tracking can lead to broken notebooks when cells are deleted or reordered, making them unreliable and difficult to share, a problem that newer tools aim to address.

"The main thing I had with the course was like I would an LLM is a building block at some point but it's very unlike a normal building block when you're dealing with code because normally with code you put something into a function like one thing comes out but in this particular case you put a thing into a function you have no idea of front what's going to come out and not only that but you put the same thing in twice and something else might come out as well."

Warmerdam describes the unique challenge of integrating LLMs into software development. He contrasts LLMs with traditional code functions, emphasizing their inherent unpredictability and non-deterministic nature, which requires a more defensive and cautious approach to their implementation.

"One of the things you always want to do is like think a little bit about caching and there's a python library called disk cache that I've always loved to use and I highly recommend people have a look at it."

Warmerdam advocates for the use of caching mechanisms when working with LLMs, specifically recommending the diskcache Python library. He explains that caching is crucial due to the cost and computational expense of LLM calls, ensuring that repeated identical requests are not processed unnecessarily.

"The main thing that's always nice and the and when you're dealing with LLMs is you always want to be able to say in hindsight like okay how did this LLM compare to that one you want to compare outputs and then just writing a little bit of a decorator on top of a function is the way to put it in the sql light and you're just done with that concern."

Warmerdam highlights the utility of diskcache for comparing LLM outputs. He suggests that by using a decorator, developers can easily store and retrieve LLM responses in a SQLite database, enabling straightforward comparison and analysis of different models or prompts after the fact.

"The main thing that Simon Willison's library does allow you to do is you are able to say well I have a prompt over here but the output that I'm supposed to get out well that has to be a JSON object of the following type and then you can use Pydantic."

Warmerdam discusses the llm library by Simon Willison, emphasizing its capability to enforce structured output formats. He explains that by combining this library with Pydantic, developers can specify that the LLM's response must conform to a particular JSON schema, ensuring more predictable and usable data.

"The main thing you get is I remember when the first open AI models sort of started coming out I worked back at at Explosion we made this tool called spaCy there and one of the things that we were really bummed out about was LLMs can only really produce text so this structural like if you wanted to take a substring in a text for example which is the thing that spaCy is really good at then you really want to guarantee well I'm going to select a substring that actually did appear in the text."

Warmerdam reflects on the limitations of early LLMs, contrasting them with NLP tools like spaCy. He notes the frustration that LLMs primarily output text, making it difficult to guarantee structured information extraction, a problem that has been a significant focus for improvement in the field.

Resources

External Resources

Books

- "LLM Building Blocks for Python" by Vincent Warmerdam - Mentioned as a course that covers reliably adding LLMs to code.

Articles & Papers

- "Smart Funk" (koning github alias) - Mentioned as an example of a library built on top of the LLM library from Simon Willison.

Tools & Software

- Disk Cache - Discussed as a Python library for caching that works on disk and is recommended for its efficiency and ease of use, especially with LLMs.

- LM Studio - Mentioned as a tool with a UI for discovering, setting up, and configuring local LLMs, offering an OpenAI-compatible API endpoint.

- Ollama - Mentioned as a command-line utility for running local LLMs, similar to LM Studio but with less UI focus.

- OpenAI SDK - Referenced as a de facto standard that other libraries can leverage for LLM interaction.

- Open Router - Discussed as a service that provides a single API key to route requests to various LLMs, including open-source models, facilitating experimentation and comparison.

- Pydantic - Mentioned as a library used for defining data structures and types, which can be leveraged to enforce structured output from LLMs.

- Sentry - Mentioned as a tool for error tracking and performance monitoring in Python applications, with a specific AI-driven pair programmer feature.

- Simon Willison's LLM library - Described as a "boring" and predictable library for interacting with LLMs, suitable for rapid prototyping and serving as a base layer for custom abstractions.

- Starlette - Mentioned as a project that Marimo builds on top of.

- Uvicorn - Mentioned as a tool that Marimo builds on top of.

People

- Alex - Mentioned as someone who was on the show to talk about Marimo.

- Andre Karpathy - Referenced for an interview discussing the potential negative outcomes of excessive convenience, referencing the movie Wall-E.

- Michael Kennedy - Host of the podcast, mentioned as having used Disk Cache in a course.

- Phil - Mentioned as a good tech YouTuber to follow for learning Python.

- Samuel Colvin - Mentioned as talking about Pydantic AI and being the creator of Starlette.

- Simon Willison - Creator of the LLM library and Disk Cache.

- Vincent Warmerdam - Guest on the podcast, author of "LLM Building Blocks for Python," and associated with commcode.io, Marimo, and data science at Marimo.

Organizations & Institutions

- Anthropic - Mentioned as a provider of LLMs.

- Claude - Mentioned as an LLM.

- Commcode.io - Mentioned as a project maintained by Vincent Warmerdam, offering free educational content on Python.

- Digital Ocean - Mentioned in the context of using S3-like APIs.

- GPT-4 - Mentioned as an LLM provider.

- Jupyter - Referenced as a well-known interactive environment for Python, with discussions on its limitations and the advantages of alternatives.

- Leaf Cloud - A Dutch cloud provider mentioned for its innovative approach to using excess heat from GPU servers to preheat water in apartment buildings.

- Marimo - Mentioned as an alternative to Jupyter notebooks, offering Python files as notebooks, interactive UI, and better reproducibility.

- Mistral - Mentioned as an LLM provider.

- OpenAI - Mentioned as a provider of LLMs and the creator of the Atlas browser.

- OpenRouter - Mentioned as a service that routes requests to various LLMs.

- Pydantic AI - Mentioned in the context of higher-order programming models and using types with LLMs.

- Rasa - Mentioned as a company that worked on chatbot software.

- Sentry - Mentioned as a tool for error tracking and performance monitoring.

- Talk Python - The podcast itself, mentioned for its courses and YouTube channel.

Podcasts & Audio

- Talk Python To Me - The podcast where this discussion is taking place.

Other Resources

- AI Bubble - Discussed as a potential concern regarding overinvestment in AI.

- Atlas Browser - Mentioned as OpenAI's version of an AI browser.

- Ergonomic Keyboards - Discussed extensively, with a focus on their benefits for RSI prevention and customization.

- LLM as an API - A core concept discussed in the episode, treating Large Language Models as callable services.

- LLM Building Blocks - The concept of using LLMs as modular components in software development.

- Natural Intelligence - Contrasted with Artificial Intelligence, suggesting a focus on human intelligence development.

- Python Package Managers - Discussed in the context of generational shifts, with UV being a modern example.

- RSI (Repetitive Strain Injury) - Discussed in relation to the use of ergonomic keyboards.

- Screaming Frog SEO Spider - Mentioned as a tool that can be used with LLMs.

- UV - Mentioned as a modern Python package manager.