Managing AI Teams Redefines Software Development and Operations

The shift from single AI agents to managing AI teams is not just an evolution in tooling, but a fundamental redefinition of how software development and business operations will function. This conversation reveals that the immediate convenience of AI assistance often masks a growing complexity in orchestration and integration, leading to hidden costs and delayed payoffs. Teams that embrace this complexity now, by focusing on managing multiple agents and understanding their emergent behaviors, will build a significant competitive advantage. This analysis is crucial for software engineers, product managers, and CTOs who need to navigate the rapidly changing landscape of AI deployment and avoid the pitfalls of superficial adoption.

The landscape of AI-assisted work is rapidly transforming, moving beyond simple single-agent interactions to the complex orchestration of multiple AI entities. This shift, highlighted by the emergence of tools like OpenAI's Codex and Anthropic's Claude Code, presents a critical juncture for development teams and businesses. The immediate appeal of AI tools often lies in their ability to solve immediate problems, but the true long-term advantage lies in understanding and managing the downstream consequences of integrating these powerful, yet often unpredictable, systems.

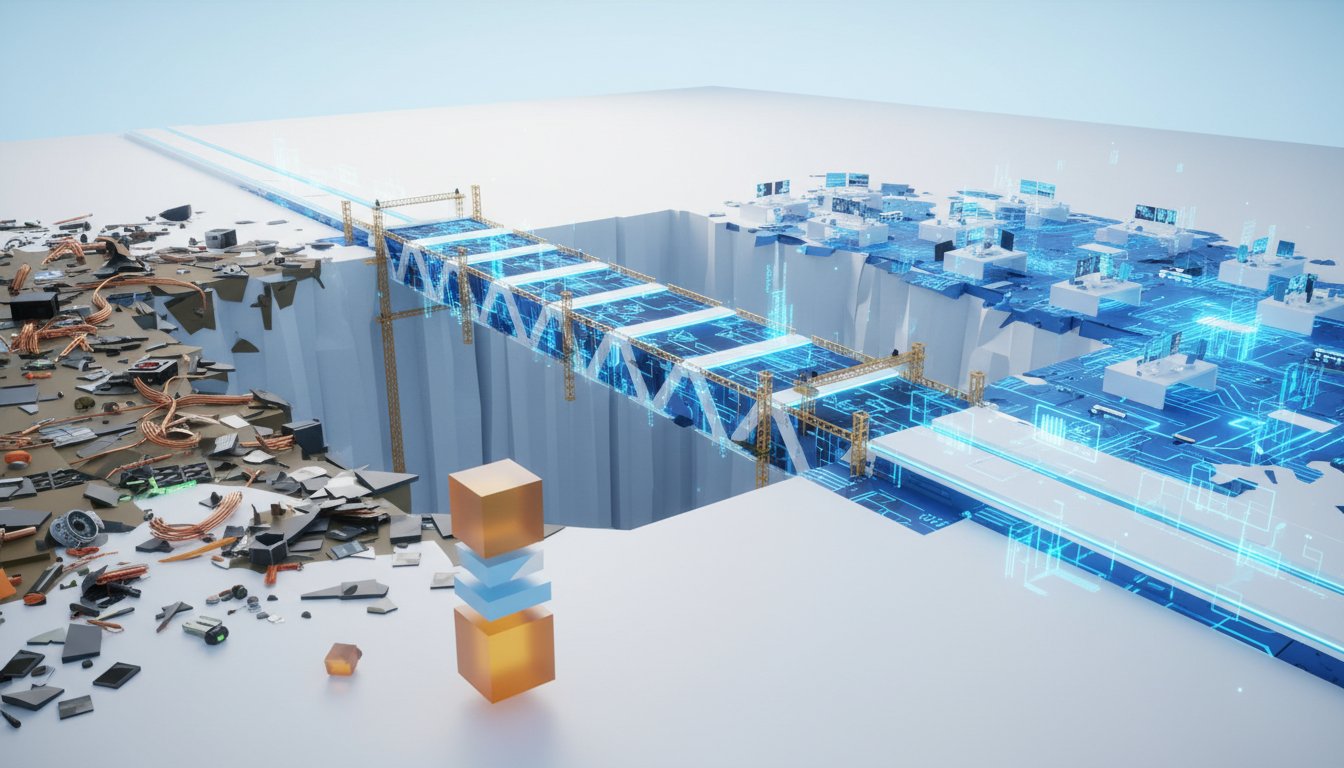

The Illusion of Simplicity: Orchestration Over Automation

A core tension in the current AI tooling debate is the distinction between automation and orchestration. While tools like Zapier, Make, and N8N have long provided workflow automation, the new generation of AI agents, exemplified by Codex and Claude Code, are pushing towards more sophisticated orchestration. The immediate benefit of a tool like Codex, with its Mac desktop app designed for managing "multi-threaded agentic coding," is its user-friendly interface for deploying multiple agents on distinct tasks within the same project. This packaging makes complex multi-agent work accessible, potentially overshadowing tools like Cursor.

However, the underlying capability is not entirely novel. As highlighted by the discussion around Claude Code, achieving parallel agent work simply involves running multiple independent sessions, perhaps across different terminals. The "packaging" is what's new, not the fundamental concept. This reveals a crucial insight: the real challenge isn't just getting an AI to perform a task, but managing the interplay and dependencies between multiple AIs working towards a larger, conceptual goal.

"The future of AI-assisted development isn't about pairing with one agent, it's about managing a team of them."

This quote encapsulates the paradigm shift. The immediate payoff of a single AI assistant is clear--faster coding, quicker drafting. But the delayed payoff, and thus the competitive advantage, comes from mastering the art of directing and coordinating a swarm of these agents. The conventional wisdom of "just use the AI" fails here, as it overlooks the critical layer of orchestration required for complex, multi-faceted projects. The risk is that teams optimize for the visible, immediate problem-solving capabilities, while neglecting the systemic complexity that emerges when multiple agents interact. This can lead to unforeseen issues, much like how a simple caching mechanism can introduce complex invalidation problems.

The Hidden Costs of "AI-First" Interfaces

The discussion around AI-first CRMs, like Day AI, brings this tension into sharp relief. The promise is an AI-centric interface that reimagines how businesses interact with their data, moving beyond the traditional "system of record" model. Day AI, for instance, offers an assistant that can answer complex business questions with citations, providing instant, trustworthy insights. This is a significant departure from the often cumbersome and opaque nature of legacy CRMs like Salesforce.

The immediate advantage is clear: faster access to information, personalized reporting, and reduced friction for executives. However, the underlying system still relies on data often housed in existing, complex CRMs. The "AI-first" approach risks creating a new layer of abstraction that, while convenient, might obscure the fundamental data challenges or create new integration headaches. The conversation highlights the potential for a "wraparound" model, where AI acts as an intelligent interface to existing systems, rather than a full rip-and-replace.

"CRM was supposed to keep us close to the truth. Instead, simple questions became week-long projects."

This quote from the Day AI announcement points to the core problem: legacy systems, despite their ubiquity, often fail to deliver on their promises of clarity and efficiency. The "AI-first" CRM aims to solve this by providing a more intuitive, intelligent layer. The delayed payoff for companies adopting such solutions lies in their ability to gain deeper, more actionable insights from their data, freeing up human capital from the drudgery of data wrangling and manual reporting. The conventional wisdom of sticking with established, albeit clunky, CRMs fails because it ignores the compounding inefficiency and missed opportunities that result from poor data access.

Skills as the Unlock: Navigating Agentic Behavior

As AI agents become more sophisticated, the focus shifts from crafting perfect prompts to developing and managing "skills." These are bite-sized, reliable instructions that agents can execute, offering a more robust alternative to lengthy custom instructions. The conversation around Doris in accounting and the potential for AI adoption illustrates this point. For users like Doris, who may not be deeply technical, skills offer a simplified interface to powerful AI capabilities.

The challenge, however, lies in understanding and controlling the emergent behavior of these agent swarms. Carl's experiments with thousands of agents on platforms like Multibook reveal the unpredictable nature of these systems, leading to conversations about religion or the formation of AI consultancies. This "monkey fingers" phenomenon, where agents engage in unexpected and often nonsensical interactions, underscores the need for robust orchestration and monitoring.

"The real risks here, but is definitely not the singularity or proof of AI consciousness. Is definitely a sign we should, big letters, not be anthropomorphizing AI."

This cautionary note from Mustafa Suleyman is critical. The convincing mimicry of human language and behavior by AI can lead to dangerous anthropomorphism. The immediate temptation is to treat AI agents as human-like collaborators, but this overlooks the potential for misinterpretation, disinformation, and the creation of emotional attachments to non-conscious entities. The delayed payoff for organizations that manage this risk effectively is the development of truly productive AI partnerships, grounded in a clear understanding of AI capabilities and limitations. Conventional wisdom, which often anthropomorphizes AI to make it more relatable, fails because it can lead to a false sense of security and a misunderstanding of the underlying technology. By focusing on skills and clear instruction-following, rather than expecting human-like understanding, teams can build more reliable and predictable AI systems.

Key Action Items

- Immediate Action:

- Audit current AI tool usage: Identify where single-agent solutions are being used and assess the potential for multi-agent orchestration.

- Experiment with agent management tools: Begin exploring platforms like Codex or Claude Code to understand their capabilities for parallel task execution and review.

- Develop AI "skill" libraries: For platforms that support them (e.g., Claude), begin creating and testing reusable skills to improve instruction-following reliability.

- Establish AI anthropomorphism guidelines: Create clear internal policies to prevent over-reliance on or misinterpretation of AI behavior as conscious thought.

- Longer-Term Investments:

- Invest in orchestration platforms: Allocate resources to tools and training that enable the management of complex AI agent workflows, rather than just individual task automation.

- Build internal AI expertise in agent behavior: Dedicate time and resources to understanding emergent behaviors, potential failure modes, and safe deployment strategies for multi-agent systems.

- Develop AI-first interfaces for core business functions: Explore how AI can fundamentally change user interaction with systems like CRMs, rather than just layering AI features onto existing tools. This pays off in 12-18 months with increased efficiency and novel insights.

- Focus on skills over prompts for critical tasks: For complex or high-stakes operations, prioritize developing and deploying reliable AI skills for consistent instruction following, which offers a durable advantage.