Orbital Data Centers and Agent Orchestration Redefine AI Compute

The AI race is escalating beyond raw model capabilities, shifting focus to how humans orchestrate complex AI systems. This conversation reveals that the future of AI compute might be in space, driven by a radical consolidation of resources and ambition. Simultaneously, the interface for interacting with AI is evolving from simple commands to sophisticated command centers, fundamentally altering the role of human "developers." Those who grasp these shifts--from capital intensity and infrastructure to agent orchestration and user experience--will gain a significant advantage in navigating the rapidly changing AI landscape. This analysis is crucial for technologists, investors, and strategists aiming to understand the non-obvious implications of recent AI developments.

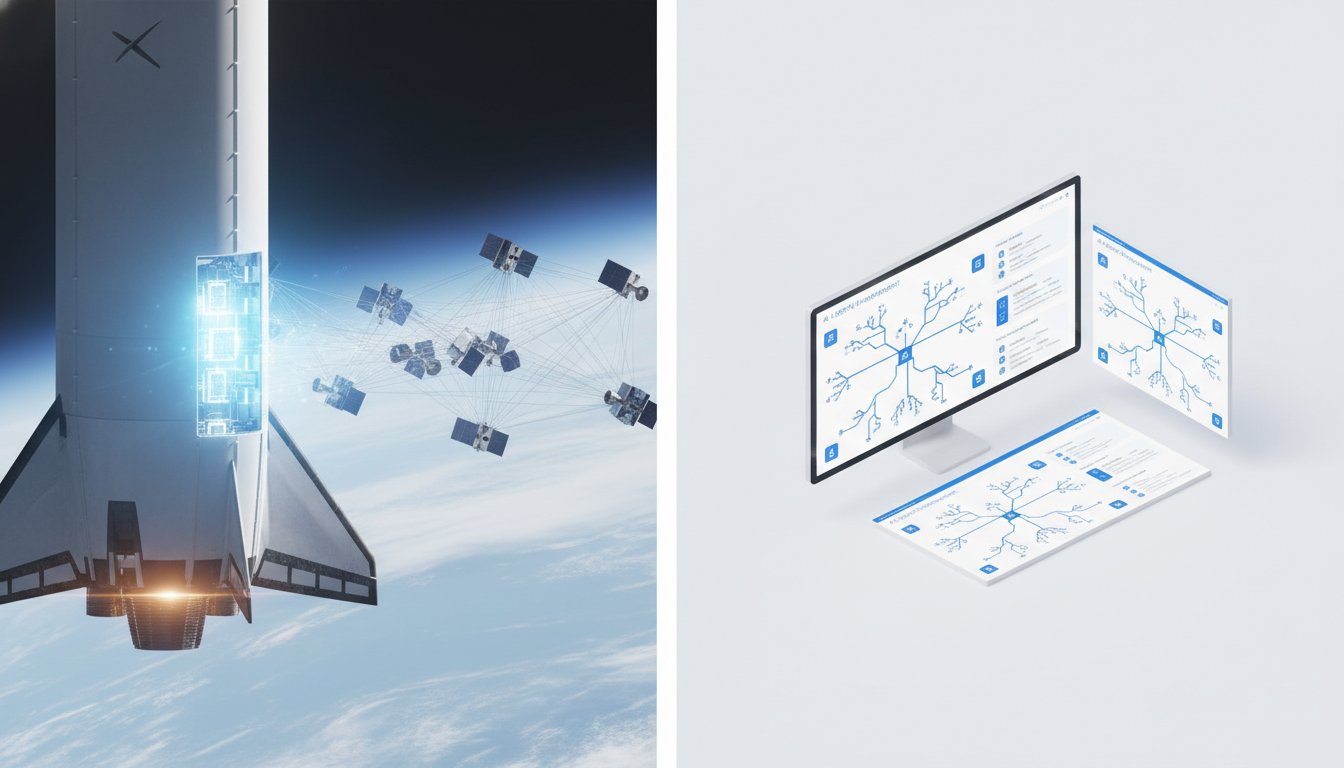

The Orbital Data Center Gambit: Beyond Earthly Compute

The recent merger of xAI and SpaceX, valued at an astonishing $1.25 trillion, signals a dramatic escalation in the AI race, moving beyond terrestrial limitations. Elon Musk's vision, articulated as creating "the most ambitious vertically integrated innovation engine on and off Earth," points to a future where AI compute is not just abundant but strategically located in space. The immediate rationale, as Musk states, is cost efficiency:

"My estimate is that within two to three years, the lowest cost way to generate AI compute will be in space. This cost efficiency alone will enable innovative companies to forge ahead in training their AI models and processing data at unprecedented speeds and scales, accelerating breakthroughs in our understanding of physics and invention of technologies to benefit humanity."

This isn't just about having more compute; it's about a fundamental shift in infrastructure. The proposed network of a million AI satellites, a two-orders-of-magnitude increase in Earth's orbital density, aims to harness solar energy more efficiently and vent heat into space, potentially offering a more environmentally viable solution for the immense power demands of future AI. This move challenges conventional thinking about AI infrastructure, which is typically confined to Earth-bound data centers. The long-term implication is not just about faster AI training but about enabling sci-fi-scale ambitions, from lunar manufacturing to Mars colonization, by providing the necessary computational power. This requires a long-term investment horizon, where current discomfort with regulatory hurdles and skepticism is a necessary precursor to future, unprecedented capabilities.

The Financial Engineering Behind the Vision

While the technological vision is grand, the merger also appears to be a strategic financial maneuver. As The Information notes, the move addresses the immense capital intensity of competing in AI:

"There's no question the move is financially motivated. Musk may be the richest man in the world, but he's facing the same financial realities the leaders of other AI startups face. It's very difficult to compete in AI development with deep-pocketed tech giants like Google and Meta, which own cash machines in their advertising businesses."

xAI, reportedly burning through billions, needs SpaceX's substantial revenue and investor base. This consolidation aims to create a unified entity capable of attracting the massive capital required for both space infrastructure and cutting-edge AI development. The valuation, while astronomical, reflects the market's--or at least Musk's--belief in the potential of this integrated approach. Investors are left to weigh the ambitious technological roadmap against the financial realities and the potential for dilution or misallocation of resources. This highlights a critical system dynamic: the need for massive capital can drive seemingly disparate ventures into synergistic, albeit complex, partnerships. The delayed payoff for this strategy is immense, potentially creating a unique competitive moat if the orbital compute vision materializes.

The Uncomfortable Reality of Agent Orchestration

Shifting from infrastructure to application, OpenAI's new Codex desktop app signifies a critical evolution in how humans interact with AI. The conversation highlights a paradigm shift from models competing on raw capability to products competing on user experience, specifically in orchestrating AI agents. The move from graphical user interfaces (GUIs) to terminal-based interactions with tools like Claude Code marked an initial step towards efficiency. However, OpenAI's Codex app represents a return to and reimagining of the GUI, positioning it as a "command center for agents."

"The core challenge has shifted from what agents can do to how people can direct, supervise, and collaborate with them at scale. Existing IDEs and terminal-based tools are not built to support this way of working. This new way of building, coupled with new model capabilities, demands a different kind of tool, which is why we're introducing the Codex desktop app, a command center for agents."

This statement underscores a key consequence: as AI models become more capable of handling complex, long-running tasks, the bottleneck shifts from model performance to human orchestration. The Codex app aims to address this by enabling users to manage multiple agents in parallel, switch between tasks seamlessly, and collaborate on large projects. This is a deliberate bet against conventional wisdom, which has seen a trend towards terminal-based development. By offering a dedicated interface for agent management, OpenAI is not just providing a tool but shaping the future workflow. This requires developers to adopt a new identity, moving from individual coders to orchestrators, a transition that can be uncomfortable but promises significant long-term advantages in productivity and project scope. The immediate discomfort of learning new workflows is a prerequisite for the delayed payoff of managing complex AI systems at scale.

Key Action Items

-

Immediate Action (0-3 Months):

- Evaluate Orbital Compute Viability: For strategic planners and investors, begin researching the technical and regulatory feasibility of orbital data centers. This involves understanding the FCC application, potential energy advantages, and environmental considerations.

- Experiment with Agent Orchestration Tools: For developers and product managers, actively test OpenAI's Codex app and compare its agent management capabilities against existing tools like Claude Code and Cursor. Focus on parallel task execution and long-running task supervision.

- Assess AI Infrastructure Costs: For CTOs and infrastructure leads, re-evaluate current AI compute strategies. Consider the long-term cost implications of traditional data centers versus potential future orbital solutions, factoring in energy efficiency and scalability.

-

Short-Term Investment (3-12 Months):

- Develop Agent Orchestration Workflows: For engineering teams, begin defining and implementing workflows that leverage multi-agent collaboration for complex projects. This includes training teams on new interfaces and developing best practices for delegating and supervising AI agents.

- Monitor xAI/SpaceX Integration Progress: For investors and market analysts, track the integration progress of xAI and SpaceX, paying close attention to FCC approvals for satellite networks and early deployments of orbital compute infrastructure.

-

Long-Term Investment (12-24 Months):

- Investigate Space-Based AI Compute: For forward-thinking organizations, begin exploring partnerships or pilot programs related to space-based AI compute as the technology matures and regulatory frameworks solidify. This is a bet on a future where compute is not geographically constrained.

- Redefine Developer Identity and Skills: For educational institutions and HR departments, anticipate the shift in developer roles from individual coders to AI orchestrators. Invest in training programs that focus on agent supervision, prompt engineering for complex tasks, and understanding system-level AI interactions. This requires embracing discomfort now for future relevance.