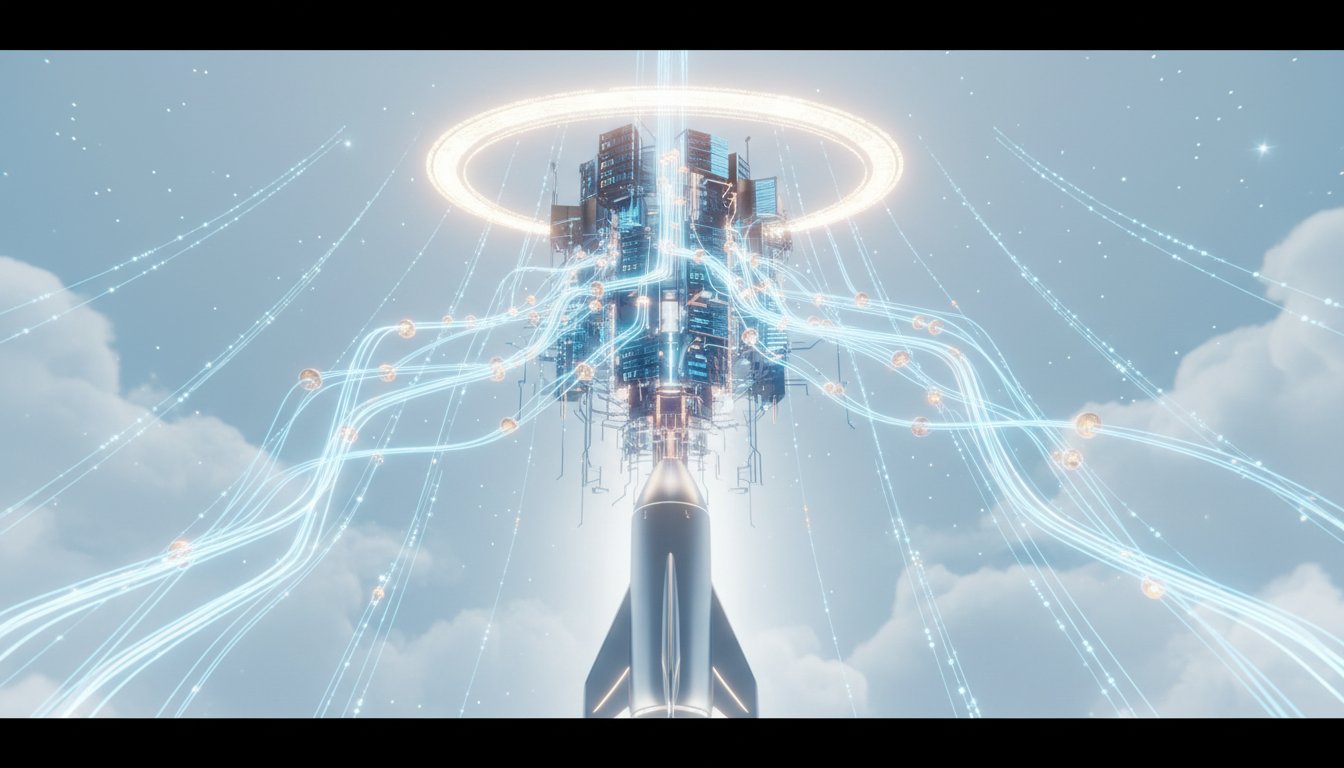

The audacious consolidation of SpaceX and xAI, valued at an astonishing $1.25 trillion, signals a seismic shift in the AI landscape, moving beyond terrestrial limitations to embrace space-based data centers. This move isn't just about scaling AI; it's a bet on a future where the very infrastructure of computation resides in orbit, solving Earth's energy and space constraints. The non-obvious implication is the creation of a deeply integrated "Elon Inc." where AI development becomes the unifying force across Musk's ventures, potentially reshaping industries from autonomous vehicles to robotics. This conversation is essential for tech strategists, investors, and anyone seeking to understand the bleeding edge of AI infrastructure and the complex interplay of Musk's ambitious ventures. It offers a distinct advantage by illuminating the long-term vision and systemic thinking that conventional market analysis often misses, providing foresight into how AI's future might be built, quite literally, beyond Earth.

The Orbiting Oracle: How SpaceX's xAI Acquisition Reimagines AI Infrastructure

The tech world is abuzz with the unprecedented $1.25 trillion consolidation of SpaceX and xAI, a move that Elon Musk boldly frames as the creation of "the most ambitious vertically integrated innovation engine on and off Earth." While the immediate narrative focuses on the sheer scale and the ambition of space-based data centers, a deeper analysis reveals how this merger is not merely an expansion but a fundamental redefinition of AI infrastructure, driven by a long-term vision that prioritizes deep vertical integration and addresses the escalating constraints of terrestrial computing. This isn't about solving today's AI problems; it's about building the foundational architecture for AI's exponential future, a future where the limiting factors of energy, water, and physical space are overcome by leveraging the vacuum of orbit.

The core thesis driving this merger, as articulated by Ed Ludlow, is Musk's conviction that "space-based data centers are, 'obviously the only way to scale AI.'" This statement cuts through the immediate financial machinations to reveal a profound strategic pivot. On Earth, the relentless demand for AI compute power is straining energy grids, consuming vast amounts of water for cooling, and facing literal spatial limitations. The solution, from Musk's perspective, is to move the infrastructure itself into orbit. Here, solar power becomes an abundant energy source, the vacuum of space simplifies cooling challenges, and the vastness of orbit provides seemingly limitless real estate for data centers. This vision is intrinsically linked to SpaceX's Starship program, which provides the launch capability for these orbital data centers, creating a self-reinforcing ecosystem. xAI, meanwhile, brings the AI models, and the combined entity will run both training and inference in space.

"The idea is space-based AI, that's the only way to scale."

This move represents a radical form of vertical integration, extending beyond the typical manufacturing or software development cycles. It's about controlling the entire stack, from the rockets that launch the infrastructure to the AI models that run on it, and critically, the physical location of the compute itself. This holistic approach, dubbed "Elon Inc." by observers, suggests a future where Tesla's AI development for autonomous driving and robotics, xAI's foundational models, and SpaceX's orbital infrastructure are not just collaborators but increasingly intertwined components of a singular, AI-centric conglomerate. The implication is that future AI advancements, whether for self-driving cars or humanoid robots, will leverage this space-based compute, creating a distinct competitive advantage for Musk's ventures.

The Hidden Costs of Terrestrial AI and the Orbital Escape Hatch

The narrative surrounding the SpaceX and xAI merger also illuminates the escalating, often hidden, costs and constraints of terrestrial AI development. As Gil Luria details in the context of Oracle's challenges, the demand for AI compute is immense, leading to significant capital expenditures and a reliance on complex financial structures. Oracle's situation, where it is borrowing heavily to build data centers for OpenAI, highlights the precariousness of relying on third-party funding and the potential for financial strain when a money-losing startup is at the center of the equation. The fact that OpenAI's financial commitments are contingent on its ability to raise substantial capital, and that Oracle sits in a less-than-ideal position in the payment hierarchy, underscores the systemic risks inherent in the current AI build-out.

"We've talked about this. This is boisterous behavior. This is not okay. This is unhealthy behavior. It may still work out because this AI stuff is great, but it's very, very risky type of situation for really for both companies."

The SpaceX-xAI consolidation, in contrast, offers a potential escape from these terrestrial constraints. By moving data centers to space, Musk aims to sidestep the energy, water, and land limitations that are increasingly bottlenecking AI growth on Earth. This strategic foresight, focusing on long-term infrastructure solutions rather than short-term capital raises, positions SpaceX-xAI to potentially avoid the financial entanglements and uncertainties faced by companies like Oracle. The immediate pain for Oracle, in the form of a 10% stock drop and concerns over its debt issuance, serves as a stark illustration of the risks associated with conventional AI infrastructure plays. The delayed payoff of space-based data centers, requiring massive upfront investment in Starship and orbital hardware, is precisely the kind of long-term play that can create a durable competitive advantage, as most competitors are focused on more immediate, ground-level solutions.

The Oracle-OpenAI Interplay: A Cautionary Tale of Financial Interdependence

The situation with Oracle and OpenAI provides a critical case study in the financial complexities and potential pitfalls of the AI boom. Oracle's announcement of a $50 billion debt raise to fund cloud infrastructure for OpenAI, coupled with Jensen Huang's clarification that Nvidia's $100 billion commitment to OpenAI was "never a commitment," exposed a web of financial interdependencies that is proving fragile. Gil Luria points out that Oracle's debt issuance was rated BBB- (just above junk), a concerning sign for a 50-year-old company, and that OpenAI sits fourth in line for payment, meaning Oracle's ability to recoup its investment is highly contingent on OpenAI's success in raising further capital.

"The Nvidia OpenAI deal has zero impact on our financial relationship with OpenAI. We remain highly confident in OpenAI's ability to raise funds and meet its commitments."

This precarious financial dance is further complicated by Oracle's public relations misstep. The official Oracle account's statement on X, attempting to downplay the Nvidia-OpenAI situation, backfired spectacularly, drawing more attention and leading to a significant stock price drop. Ed Elson highlights this as a classic example of the Streisand effect, where attempts to suppress information only amplify it. The statement, which Elson argues was a clear sign of Oracle's anxiety, demonstrated a lack of understanding of the current media landscape, where business is viewed as entertainment and missteps are amplified virally. The lesson for Oracle, and indeed for any company operating in this hyper-visible environment, is that defensive language and attempts to control the narrative can be counterproductive, leading to greater scrutiny and financial repercussions. This episode serves as a potent reminder that in the age of digital virality, the cost of PR blunders can be measured in billions of dollars in market capitalization.

Key Action Items

- Immediate Action (Next 1-3 Months):

- For Tech Strategists: Re-evaluate AI infrastructure strategies, considering the long-term viability of terrestrial versus orbital solutions. Prioritize understanding the energy and spatial constraints of current AI models.

- For Investors: Scrutinize companies with significant financial exposure to AI startups, particularly those reliant on third-party funding or complex debt structures for expansion. Look beyond immediate revenue growth to assess profitability and cash flow sustainability.

- For PR/Communications Teams: Develop crisis communication playbooks that avoid defensive language and the Streisand effect. Focus on transparency and addressing concerns directly, rather than attempting to suppress them.

- Short-Term Investment (Next 3-9 Months):

- For Companies: Explore partnerships that offer greater financial stability and less contingent revenue streams. Diversify compute providers and infrastructure partners to mitigate single-party risk.

- For Investors: Analyze the competitive positioning of companies in the AI infrastructure space, paying close attention to those with unique, long-term strategic advantages that may not be immediately apparent in financial statements.

- Long-Term Investment (12-24+ Months):

- For Tech Companies: Investigate the feasibility and strategic advantages of advanced infrastructure solutions, such as space-based data centers, if aligned with long-term scaling goals. This requires significant R&D and capital commitment.

- For Investors: Consider the potential of "Elon Inc." as a model for deep vertical integration in AI, where disparate ventures are unified by a common technological objective. Assess the long-term disruptive potential of such integrated ecosystems.

- For Leaders: Foster a culture that embraces difficult, long-term strategic bets, even if they involve immediate discomfort or lack visible short-term payoffs. This is where durable competitive advantages are built.