Iterative AI Product Development Through Continuous Calibration

TL;DR

- AI products require a fundamental shift in development due to non-determinism in user input and LLM output, necessitating a step-by-step approach with controlled agency.

- Successful AI product development hinges on a "problem-first" mindset, prioritizing workflow understanding and iterative improvement over immediate, complex agent deployment.

- The Continuous Calibration, Continuous Development (CCCD) framework guides AI product evolution through iterative cycles of development and calibration, minimizing risk and building trust.

- Evals and production monitoring are both crucial, but neither is a cure-all; their application must be context-dependent and integrated into a feedback loop for continuous improvement.

- Coding agents are currently underrated, offering significant potential for productivity gains across industries, despite widespread chatter about more complex multi-agent systems.

- Obsessing over customer problems and design is more valuable than simply building AI features, as implementation costs decrease and unique human judgment becomes paramount.

- Painful iteration and deep learning through practical experience, rather than just theoretical knowledge, create a sustainable competitive advantage or "moat" for AI companies.

Deep Dive

Building AI products demands a fundamental shift from traditional software development, centering on non-determinism and the agency-control tradeoff. This necessitates a problem-first, iterative approach, emphasizing continuous calibration and development to manage inherent uncertainties and build customer trust. Companies that successfully navigate this landscape will prioritize understanding complex workflows and fostering a culture of persistence and deep customer empathy over simply adopting the latest AI tools.

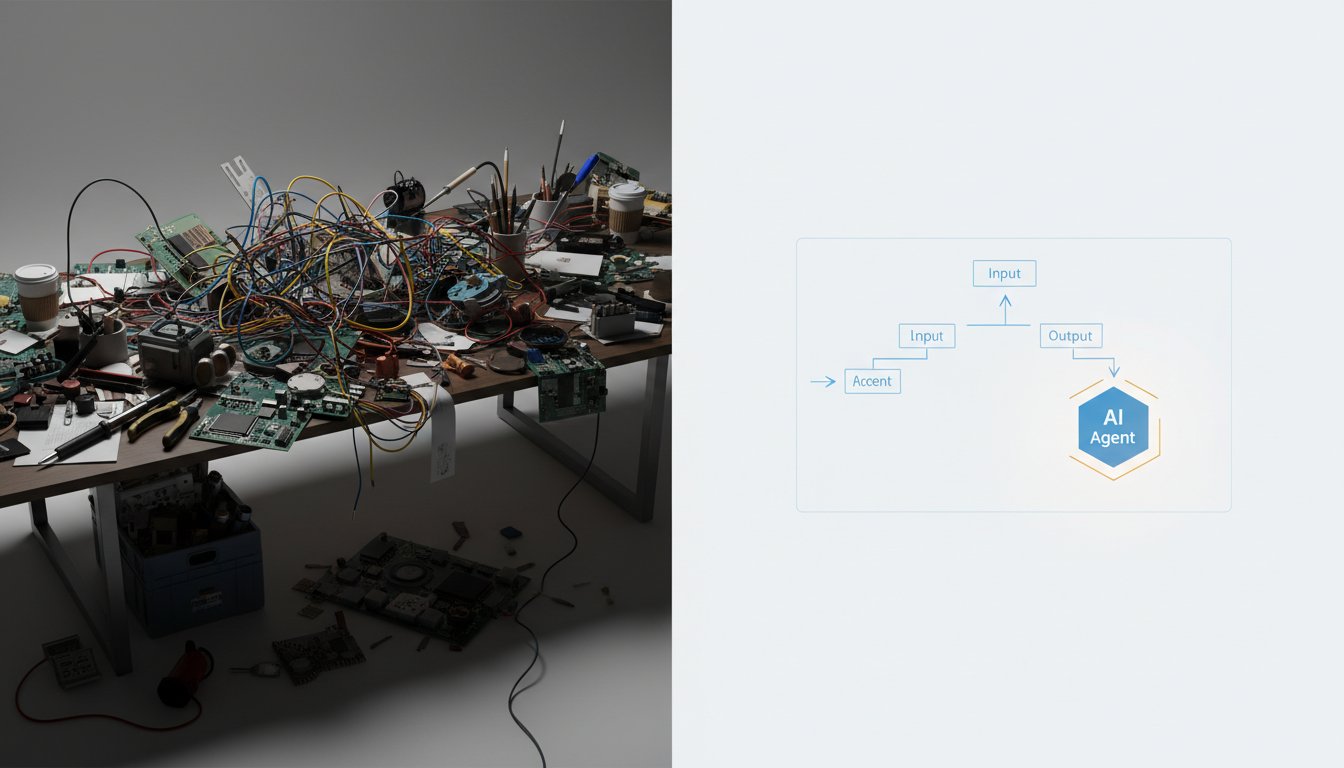

The core challenges in AI product development stem from two key differences compared to traditional software. First, AI systems operate on non-deterministic APIs, meaning both user input and system output can vary unpredictably. Unlike a predictable platform like Booking.com, AI interactions lack a fixed workflow, making it difficult to anticipate user behavior and system responses. Second, there's an inherent tradeoff between agency and control: granting AI systems more autonomy to make decisions means relinquishing human control. Successful AI product development requires carefully managing this balance, starting with high human control and low AI agency, and gradually increasing autonomy as confidence in the system's reliability grows.

This iterative approach is best encapsulated by the Continuous Calibration, Continuous Development (CC/CD) framework. It advocates for a phased rollout, beginning with simpler functionalities and human oversight, and progressively increasing AI autonomy as the system proves its reliability and the team gains confidence. For instance, a customer support AI might start by merely suggesting responses to human agents, then progress to drafting full replies for review, and eventually handle end-to-end resolutions autonomously. Each stage provides valuable data and insights, creating a flywheel effect for continuous improvement. This methodology helps avoid the pitfalls of premature full autonomy, such as the Air Canada incident where a chatbot hallucinated a policy, and ensures that AI systems align with business objectives and customer needs.

Beyond process, successful AI product builders must cultivate specific skills. A deep understanding of business workflows and a "problem-first" mindset are crucial, ensuring that AI is applied to solve genuine pain points rather than being a solution in search of a problem. The ability to iterate quickly, embrace "pain as the new moat" by learning from failures and complexities, and maintain persistence are vital individual and organizational assets. Furthermore, leaders must be deeply engaged with AI, as demonstrated by successful CEOs who maintain hands-on experience with AI tools to guide strategic decisions.

The discourse around "evals" (evaluations) is often misunderstood, creating a false dichotomy between them and production monitoring. Both are necessary but serve different purposes. Evals, often comprising curated datasets and specific metrics, are crucial for testing known failure modes and ensuring core functionalities perform as expected. Production monitoring, conversely, captures emergent behaviors and unexpected issues that arise in real-world usage, providing invaluable data for continuous improvement. The key lies in a problem-first approach to determine the right blend of these methods based on the specific application and its risks, rather than adhering to prescriptive definitions of evals.

Looking ahead, the AI landscape will likely see a greater emphasis on proactive and background agents that deeply understand user workflows and context, moving beyond task-specific execution. Multimodal experiences, integrating language with other sensory inputs, will become more sophisticated, enriching human-like interactions and unlocking vast amounts of unstructured data. Coding agents, despite their growing chatter, remain significantly underrated in their potential to transform engineering productivity across a wide range of companies. Ultimately, success in building AI products will hinge on a relentless focus on customer problems, a deep understanding of workflows, and the iterative calibration of AI systems to ensure they reliably deliver value.

Action Items

- Audit AI product development lifecycle: Identify 3 areas for implementing continuous calibration and development (CC/CD) framework to mitigate risks and build improvement flywheels.

- Design iterative AI agent deployment strategy: Start with low agency and high human control (e.g., suggestion-only support agents) for 2-3 core workflows.

- Develop problem-first AI product roadmap: Prioritize understanding 3-5 key customer workflows before selecting AI tools or features.

- Implement proactive monitoring for 5-10 key AI agent behaviors to catch emerging error patterns not covered by static evaluations.

- Create a feedback loop for AI model changes: Track explicit and implicit user signals to inform iterative improvements and recalibration.

Key Quotes

"There are tons of similarities of building AI systems and software systems as well. But then there are some things that kind of fundamentally change the way you build software systems versus AI systems, right? And one of them that most people tend to ignore is the non-determinism. You're pretty much working with a non-deterministic API as compared to traditional software."

This quote highlights a core difference between AI and traditional software development: non-determinism. The author explains that AI systems, unlike predictable traditional software, can produce varied outputs even with the same inputs. This unpredictability fundamentally alters how AI products must be built and managed.

"Every time you hand over decision-making capabilities or autonomy to agentic systems, you're kind of relinquishing some amount of control on your end, right? And when you do that, you want to make sure that your agent has gained your trust or it is reliable enough that you can allow it to make decisions."

The author introduces the concept of the "agency control tradeoff" in AI systems. This quote emphasizes that granting AI agents more autonomy means a corresponding loss of direct human control. It underscores the critical need for trust and reliability in AI systems before significant decision-making power is delegated.

"The AI life cycle, both pre-deployment and post-deployment, is very different as compared to a traditional software life cycle. And so, so a lot of old contracts and handoffs between traditional roles like say PMs and engineers and data folks has now been broken."

This quote points to the significant shift in development lifecycles brought about by AI. The author explains that the traditional boundaries and workflows between roles like Product Managers and engineers are being redefined. This necessitates a new, more integrated approach to collaboration and ownership in AI product development.

"The idea is pretty simple, which is we have this right side of the loop, which is continuous development, where you scope capability and curate data, essentially get a dataset of what your expected inputs are and what your expected outputs should be looking at. This is a very good exercise before you start building any AI product because many times you figure out that a lot of the folks within the team are just not aligned on how the product should behave."

The author describes the "Continuous Development" aspect of their framework. This quote emphasizes the importance of defining expected inputs and outputs early in the process. It highlights that this initial data curation phase serves as a crucial alignment tool for teams, ensuring everyone agrees on the desired product behavior before development begins.

"The issue with just building a bunch of evaluation metrics and then having them in production is evaluation metrics catch only the errors that you're already aware of. But there can be a lot of emerging patterns that you understand only after you put things in production, right? So for those emerging patterns, you're kind of creating, you know, a low-risk kind of a framework so that you could understand user behavior and not really be in a position where there are tons of errors and you're trying to fix all of them at once."

This quote explains a limitation of relying solely on pre-defined evaluation metrics for AI products. The author argues that these metrics can only identify known errors, while unforeseen issues emerge once the product is in production. The proposed solution is a low-risk framework to observe user behavior and identify these new patterns.

"I feel that as a company, I mean, like successful companies right now building in any new area, they are successful not because they're first to the market or like they have this fancy feature that more customers are liking it. They went through the pain of understanding what are the set of non-negotiable things and trade them off exactly with like what are the features or like what are the model capabilities that I can use to solve that problem."

The author introduces the concept of "pain is the new moat." This quote suggests that true competitive advantage in new technology areas comes not from being first or having flashy features, but from enduring the difficult process of understanding core problems and making trade-offs. This lived experience and knowledge gained through overcoming challenges forms a company's unique strength.

Resources

External Resources

Books

- "When Breath Becomes Air" by Paul Kalanithi - Mentioned as a memoir that encourages living life rather than solely examining it.

- "The Three-Body Problem" series by Liu Cixin - Mentioned for its exploration of grand science fiction, extraterrestrial life, and the impact of abstract science on human progress.

- "A Fire Upon the Deep" by Vernor Vinge - Mentioned as a science fiction book about AI and superintelligence.

Articles & Papers

- Guest post - Mentioned as a collaboration between Aishwarya Raganti and Krithi Batthula discussing key insights into building AI products.

People

- Paul Kalanithi - Neurosurgeon and author of "When Breath Becomes Air."

- Liu Cixin - Author of "The Three-Body Problem" series.

- Vernor Vinge - Author of "A Fire Upon the Deep."

- Dan Shipper - Mentioned as a guest on the podcast who discussed CEO involvement as a predictor of AI adoption success.

- Jason Lemkin - Mentioned as a guest on the podcast who discussed replacing sales teams with agents.

- Demis Hassabis - Mentioned as a guest from DeepMind AI Google who discussed multimodal AI experiences.

Organizations & Institutions

- OpenAI - Mentioned as the employer of Krithi Batthula and a company that faced support volume spikes after product launches.

- Google - Mentioned as a former employer of Krithi Batthula and Aishwarya Raganti.

- Kumo - Mentioned as a former employer of Krithi Batthula.

- Alexa - Mentioned as a former employer of Aishwarya Raganti.

- Microsoft - Mentioned as a former employer of Aishwarya Raganti.

- Amazon - Mentioned as a company where Aishwarya Raganti and Krithi Batthula supported AI product deployments.

- Databricks - Mentioned as a company where Aishwarya Raganti and Krithi Batthula supported AI product deployments.

- Rackspace - Mentioned for its CEO's daily practice of "Catching up with AI."

- DeepMind AI Google - Mentioned as the organization where Demis Hassabis works.

Courses & Educational Resources

- Number one rated AI course on Maven - Taught by Aishwarya Raganti and Krithi Batthula, focusing on building successful AI products.

Websites & Online Resources

- Lenny's Newsletter - Mentioned as a source for product pass subscriptions and free resources.

- Lenny's Product Pass - A subscription service offering access to various products.

- Merge.dev/lenny - A URL for booking a meeting with Merge.

- Strella.io/lenny - A URL for trying Strella.

- Brex.com - The website for Brex, an intelligent finance platform.

- GitHub repository - Mentioned as a free resource for learning AI with approximately 20,000 stars.

- LinkedIn - Mentioned as a platform where Aishwarya Raganti writes and Krithi Batthula is open to discussions.

- Twitter - Mentioned as a platform where chatter about coding agents can be found.

- Reddit - Mentioned as a platform where chatter about coding agents can be found.

- LM Arena - Mentioned as an independent benchmark for model evaluation.

- Artificial Analysis - Mentioned as an independent benchmark for model evaluation.

- Codex - Mentioned as a product and a team at OpenAI.

- Silicon Valley (TV show) - Mentioned as a rewatchable and timeless show relevant to current AI trends.

- Expedition 33 (game) - Mentioned as a well-made game with strong gameplay, story, and music.

- Whisperflow - Mentioned as a conceptual transcription tool that seamlessly switches between transcription and instruction.

- Recast - Mentioned as a CLI tool for productivity, offering shortcuts and commands.

- Caffeineate - Mentioned as a tool to prevent Macs from sleeping, useful for long coding tasks.

Other Resources

- Non-determinism - A key concept in AI products, referring to unpredictable user behavior and LLM responses.

- Agency-control tradeoff - The balance between handing over decision-making capabilities to AI systems and maintaining control.

- Flywheels - Systems that enable continuous improvement over time.

- Evals (Evaluations) - A concept discussed as being misunderstood and having multiple definitions.

- Production monitoring - The process of observing application performance and customer usage in a live environment.

- Implicit feedback signals - Non-explicit indicators of customer satisfaction or dissatisfaction (e.g., regenerating an answer).

- Semantic diffusion - A term coined by Martin Fowler describing how terms get butchered with different definitions.

- Multimodal experiences - AI experiences that incorporate multiple forms of input and output (language, vision, etc.).

- Proactive agents - AI agents that anticipate user needs and workflows.

- Continuous Calibration Continuous Development (CCCD) - A framework for building AI products iteratively.

- Behavior calibration - The process of understanding and refining AI system behavior.

- Pain is the new moat - A concept suggesting that enduring the difficult process of building and iterating is a competitive advantage.

- Problem-first approach - Prioritizing understanding and solving the core problem before focusing on the technology.

- Tool-first approach - Focusing on the technology rather than the problem it solves.

- Hype-first approach - Being driven by trends and excitement rather than a clear problem definition.

- Taste and judgment - Skills related to product design and decision-making that are becoming more valuable.

- Execution mechanics - The practical skills involved in building and implementing.

- Busy work era - A past era where tasks that did not significantly move the needle were common.