Brex's Three-Pillar AI Strategy Drives 10x Workflows and Business Growth

The following blog post analyzes the podcast transcript "Brex’s AI Hail Mary -- With CTO James Reggio" from Latent Space: The AI Engineer Podcast. It applies consequence mapping and systems thinking to distill key insights and actionable takeaways.

This conversation reveals that the true power and peril of AI adoption lie not in the immediate gains, but in the complex, often unseen, downstream effects. James Reggio, CTO of Brex, offers a masterclass in navigating these complexities, showcasing how a disciplined, systems-level approach to AI can unlock significant competitive advantages, even in highly regulated industries. Those who read this will gain a clearer understanding of how to move beyond superficial AI implementation to build durable, impactful AI-driven capabilities. It’s essential for technical leaders, product managers, and anyone tasked with embedding AI into complex business operations who wants to avoid common pitfalls and build truly transformative solutions.

The Unseen Architecture: How Brex Builds AI That Matters

The allure of AI is its promise of immediate leaps in efficiency and capability. However, as James Reggio articulates, the real magic--and the real danger--lies in the systems that underpin these tools and the second-order consequences they unleash. Brex’s strategy, as detailed in this conversation, isn't about chasing the latest LLM or agentic tool; it's about building a robust, adaptable platform and cultivating a culture that understands the long-term implications of AI integration. This approach moves beyond simply automating tasks to fundamentally reshaping workflows, organizational structure, and even the definition of a financial product.

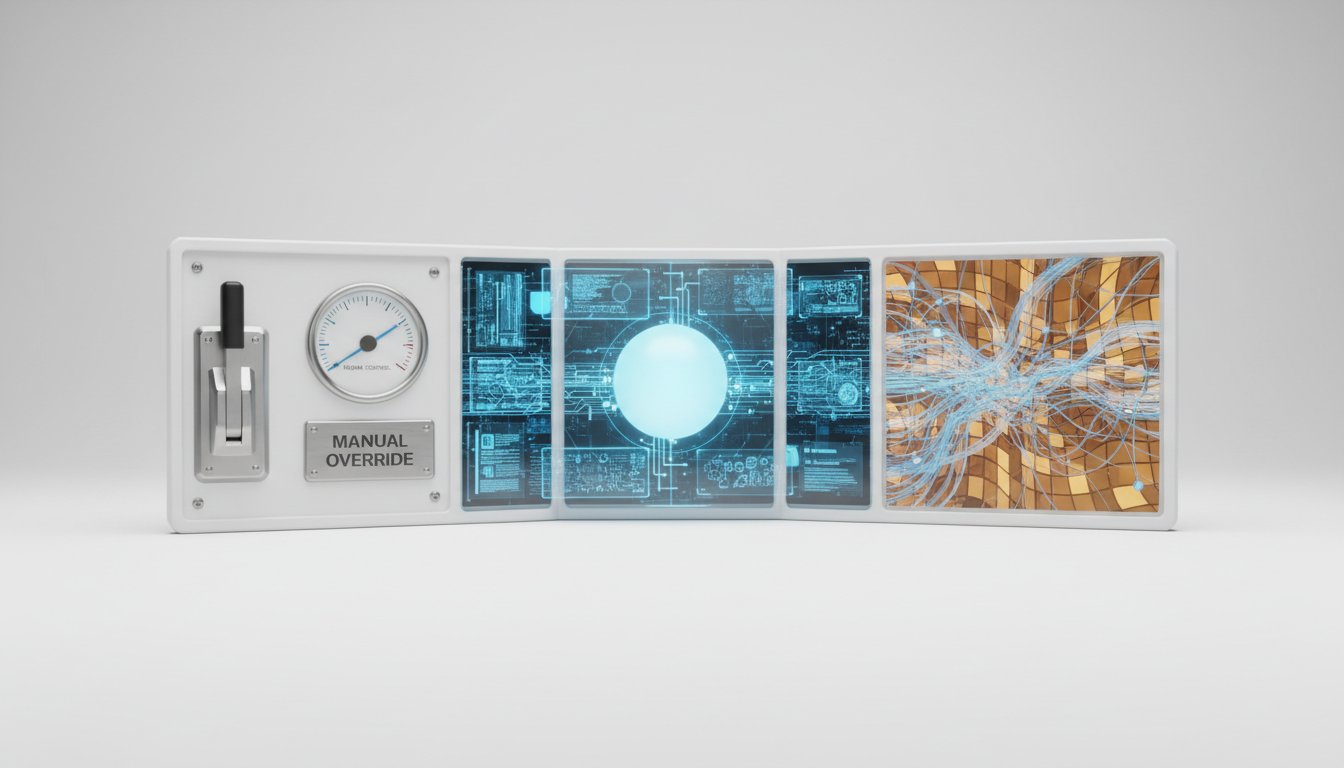

The core of Brex’s success, Reggio explains, stems from a deliberate, three-pillar AI strategy: Corporate AI for enhancing employee workflows, Operational AI for cost and compliance leverage, and Product AI to embed Brex into customer AI strategies. This framework, while seemingly straightforward, demands a deep understanding of how each pillar interacts and influences the others. The immediate payoff of automating a KYC process, for instance, is clear. But the downstream consequence, as Reggio highlights, is the need to redefine the roles of operations teams, shifting them from SOP executors to prompt engineers and evaluators. This isn't just about efficiency; it's about evolving the human element within an AI-driven system.

A critical insight emerges from Brex’s approach to building their internal AI platform. Instead of rigidly picking winners in the rapidly evolving LLM and agentic tool landscape, they’ve opted for an “employee-built stack” model. This strategy, while seemingly decentralized, is underpinned by a sophisticated understanding of how to manage complexity and foster innovation. By allowing employees to experiment with various tools--ChatGPT, Claude, Gemini, Cursor, Windsurf--and measuring adoption through usage trends rather than vanity metrics, Brex gains agility. The hidden consequence? This approach creates a powerful feedback loop, informing which tools provide genuine value and enabling the company to resist vendor lock-in. It’s a strategy that prioritizes learning and adaptation over rigid adherence to a single technology.

"We are not going to try to pick winners in the horse race between the foundational model providers or the agentic coding tools or like basically anywhere where there's, there's an active horse race. What we do, and instead of like trying to pick a single solution, is we will procure like a small number of seats, like multiple solutions, and then we'll give employees the ability to pick whatever one they want to."

-- James Reggio

This philosophy extends to their multi-agent network architecture. Rather than relying on a single, monolithic agent to handle complex tasks, Brex orchestrates a network of specialized agents. This design is a direct response to the limitations of single-agent systems when dealing with diverse product lines and workflows. The immediate benefit is improved performance for specific tasks (e.g., expense management, travel booking, policy inquiries). The deeper, systemic advantage is that it allows domain experts--the teams who understand travel or reimbursements best--to iterate on their specific agents without impacting the entire system. This modularity creates resilience and accelerates development, a crucial competitive advantage in the fast-paced AI development cycle.

"What we found is that the wide range of different product lines that exist on Brex made it difficult for one agent to perform well, being responsible for everything from expense management to finding and booking travel to answering policy and procurement questions. And so that's when we started breaking down the problem into a variety of sub-agents that sit behind an orchestrator."

-- James Reggio

The conversation also highlights the critical role of evaluation and the subtle dangers of AI-generated code. While metrics like "% of code written by AI" might seem impressive, Reggio points to more nuanced second-order effects: code slop, drift in codebase understanding, and ownership ambiguity. This suggests that the true measure of AI adoption isn't just in the volume of code produced, but in the maintainability, clarity, and long-term health of the codebase. The proactive approach of re-interviewing the entire engineering organization using agentic coding scenarios, not for performance scoring but for skill development, exemplifies this commitment to understanding and mitigating downstream effects. It’s an investment in the engineers themselves, ensuring they evolve with the technology rather than being displaced by it.

"The adoption is there, and now we have to figure out like how to mature in our usage of these tools, so that we, you know, quality or like long-term maintainability doesn't suffer, as well as like maybe one of the other facets of being able to generate a lot more code more quickly is like the, the drift between team members as far as like understanding of the, the code that's in their services increases."

-- James Reggio

Ultimately, Brex’s strategy is a testament to systems thinking in practice. They understand that AI is not a standalone tool but a force that reshapes the entire organizational ecosystem. By focusing on platform development, modular agent design, flexible tool adoption, and a culture of continuous learning, Brex is building AI capabilities that are not only powerful today but also adaptable and resilient for the future. This deliberate approach to managing complexity and anticipating consequences is where true, lasting competitive advantage is forged.

Key Action Items

- Implement a Flexible Tooling Strategy: Instead of committing to a single vendor for foundational models or agentic tools, procure multiple solutions and allow teams to experiment. Track usage and performance to inform long-term procurement decisions. (Immediate Action)

- Develop a Multi-Agent Architecture: Break down complex workflows into specialized agents that can communicate and collaborate. This enhances modularity, maintainability, and allows domain experts to own specific components. (Develop over the next 3-6 months)

- Redefine Operational Roles: Proactively train operations teams in prompt engineering, evaluation techniques, and AI tool usage. Frame this as an evolution of their roles, not a replacement, to foster buy-in and mitigate fear. (Ongoing investment, significant payoff in 12-18 months)

- Focus on Second-Order Code Quality Metrics: Move beyond simple "AI-generated code" percentages. Track metrics related to code maintainability, clarity, and team understanding of codebases. Implement rigorous code review processes that account for AI-generated contributions. (Immediate Action, long-term payoff in codebase health)

- Establish AI Fluency Frameworks and Training: Create clear learning pathways for different levels of AI proficiency (User, Advocate, Builder, Native). Celebrate progress through spotlights and spot bonuses to encourage adoption and innovation across all departments. (Develop over the next quarter, ongoing payoff)

- Adapt Engineering Interviews for Agentic Coding: Revamp interview processes to include agentic coding tasks, focusing on how candidates leverage AI tools and understand the generated output, not just on raw coding ability. (Implement in the next 1-2 months)

- Invest in Grounding Knowledge Bases: Develop and maintain curated internal knowledge bases to ground LLM applications, ensuring accuracy and preventing hallucinations about company-specific products, policies, and ICPs. (Begin development immediately, ongoing refinement)