ARC-AGI: Redefining AI Progress Through Generalization and Adaptability

TL;DR

- ARC-AGI redefines AI progress by prioritizing generalization and adaptability over memorization, shifting focus from SAT-like benchmarks to a human-solvable test of learning new skills.

- Current AI benchmarks often rely on creating specific reinforcement learning environments, which is a "whack-a-mole" approach that fails to foster true generalization for novel problems.

- ARC-AGI 3 introduces an interactive, instruction-free benchmark with video game environments, requiring AI to infer goals and learn through trial-and-error, mirroring real-world interaction.

- Measuring AI efficiency will expand beyond accuracy to include training data volume and energy consumption, aligning with human learning capabilities and preventing brute-force solutions.

- Solving ARC-AGI is a necessary but not sufficient condition for AGI, indicating a system's strong generalization ability rather than full artificial general intelligence.

- Major AI labs like OpenAI and Google DeepMind are adopting ARC-AGI for performance reporting, signaling its growing importance as a standard for evaluating advanced AI.

Deep Dive

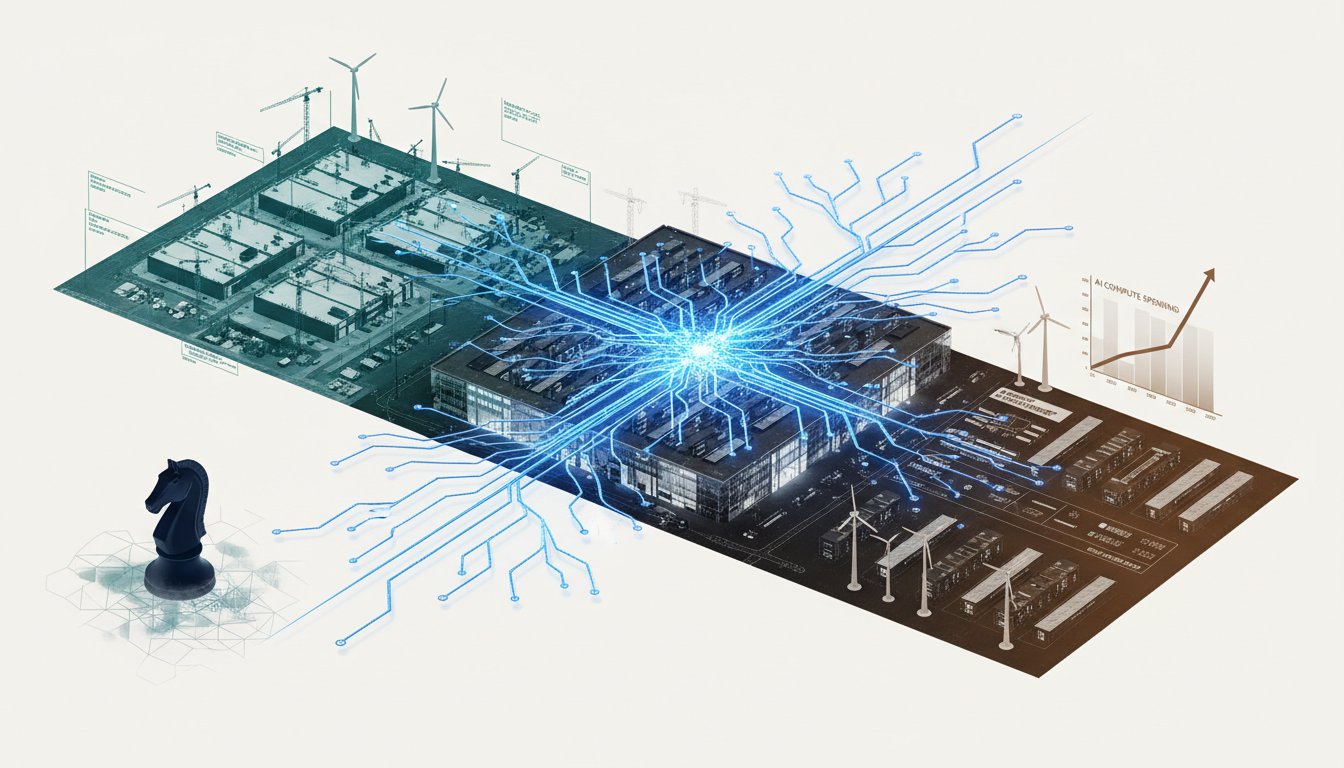

The ARC Prize Foundation is redefining progress toward Artificial General Intelligence (AGI) by shifting focus from memorization and scale to core human-like capabilities: reasoning, generalization, and adaptability. This approach challenges traditional benchmarks, which are increasingly being surpassed by current AI models, and aims to identify true intelligence by testing a system's ability to learn new things efficiently, mirroring human learning processes.

Traditional AI benchmarks, such as MMLU and its successors, have become "PhD problems" that leading models now solve with superhuman accuracy, rendering them ineffective for measuring genuine intelligence. In contrast, the ARC benchmark, developed by François Chollet, defines intelligence as the capacity to learn new things. It presents tasks that are solvable by average humans but have historically stumped AI, highlighting a critical gap in AI's ability to generalize. Early iterations of AI models, including base versions of GPT-4, performed poorly on ARC, scoring around 4%. However, recent advancements, particularly in reasoning paradigms, have led to significant performance jumps, with models like O1 reaching scores of 21%. This progress indicates that the ARC benchmark is now a standard metric for major AI labs, including OpenAI, XAI, DeepMind, and Anthropic, to report their frontier model performance.

The ARC Prize Foundation is developing ARC-AGI 3, an evolution of its benchmark that moves beyond static tasks to interactive environments. Unlike previous versions, ARC-AGI 3 will feature approximately 150 video game-like environments with no explicit instructions. Success will require participants to infer goals through interaction and feedback, a process that more closely mirrors real-world problem-solving. This interactive approach is crucial because it tests a system's ability to adapt and learn in novel situations, a hallmark of true intelligence that current reinforcement learning (RL) environments often fail to capture. The foundation emphasizes that solving ARC is a necessary, but not sufficient, condition for AGI, and that while mastery of ARC signifies a significant leap in generalization, it does not equate to full AGI.

The shift in evaluation metrics is critical for understanding AI's true progress. While accuracy remains important, the ARC Prize focuses on efficiency, measuring intelligence by the amount of training data and energy required to acquire new skills, and the number of actions needed to solve a problem. This contrasts with brute-force methods that rely on vast computational resources or millions of frames to achieve results. By normalizing AI performance against average human capabilities in terms of actions taken, ARC-AGI 3 aims to prevent AI from simply "spamming" solutions. The ultimate goal of the ARC Prize is to provide a robust framework for identifying and declaring when true AGI has been achieved, moving beyond mere benchmark performance to a deeper understanding of generalized intelligence.

Action Items

- Audit AI benchmarks: Evaluate 3-5 current benchmarks for reliance on memorization or scale, not reasoning or generalization.

- Design generalization test: Create a novel benchmark focusing on efficient learning of new skills, not just solving harder problems.

- Measure AI efficiency: Track training data and energy consumption for AI models against human benchmarks for 3 core tasks.

- Analyze interactive AI performance: For 5-10 AI agents, measure action efficiency in novel, instruction-less game environments.

Key Quotes

"And he actually proposed an alternative theory which is the foundation for what arc prize does and he actually defined intelligence as your ability to learn new things so we already know that ai is really good at chess it's superhuman you know the ai is really good at go it's superhuman we know that it's really good at self driving but getting those same systems to learn something else a different skill that is actually the hard part"

Greg Kamradt explains that the ARC Prize Foundation bases its work on an alternative definition of intelligence, proposed by Francois Challet, which focuses on the ability to learn new things. Kamradt contrasts this with AI's current superhuman abilities in specific domains like chess or driving, highlighting that the true challenge lies in a system's capacity to acquire entirely new skills.

"so whereas other benchmarks they might try to do what i call phd problems harder and harder so we had mmlu we had an mmlu plus and now we have humanity's last exam those are going superhuman right arc benchmarks normal people can do these and so we actually test all our benchmarks to make sure that normal people can do them"

Greg Kamradt points out a key difference between ARC benchmarks and other AI evaluations. Kamradt states that while many benchmarks focus on increasingly difficult "PhD problems" that AI can surpass, ARC benchmarks are designed to be solvable by average humans, ensuring they accurately measure generalizable intelligence rather than just advanced problem-solving in narrow fields.

"so actually we used arc to identify that reasoning paradigm was huge that was actually transformational for what was contributing towards ai at the time so much so that now all the big labs xai openai are actually now using arc agi as part of their model releases and the numbers that they're hitting so it's become the standard now"

Greg Kamradt highlights the impact of the ARC benchmark, explaining that it was instrumental in identifying the importance of the reasoning paradigm in AI development. Kamradt notes that this recognition has led major AI labs like OpenAI and xAI to adopt ARC-AGI as a standard for reporting their model performance, indicating its growing influence in the field.

"and core to arc agi is novelty and novel problems that end up coming in the future which is one of the reasons why we have a hidden test set by the way so i think while that's cool and while you're going to get short term gains from it i would rather see investment into systems that are actually generalizing and you don't need the environment for it because if you see or if you compare it to humans humans don't need the environment to go and train on that"

Greg Kamradt emphasizes that novelty and the ability to handle future, unknown problems are central to the ARC-AGI benchmark. Kamradt suggests that focusing on systems that generalize without needing specific environments, much like humans do, is more valuable for long-term progress than relying on solutions that only perform well in pre-defined scenarios.

"and the big difference with arc agi 3 is it's going to be interactive so if you think about reality and the world that we all live in we are constantly making an action getting feedback and kind of going back and forth with our environment and it is in my belief that future agi will be declared with an interactive benchmark because that is really what reality is"

Greg Kamradt describes the upcoming ARC-AGI 3 benchmark, explaining its interactive nature. Kamradt believes that future Artificial General Intelligence (AGI) will be validated through interactive benchmarks because they better reflect real-world interaction, where agents take actions and receive feedback from their environment.

"so back in the old um atari days and 2016 when they were making a run of video games then they would use brute force solutions and they would need millions and billions of frames of video game and they would need millions of actions to basically spam and brute force the space um we're not going to let you do that on arc 3 so we're basically going to normalize ai performance to the average human performance that we see"

Greg Kamradt explains how ARC-AGI 3 will prevent brute-force solutions, contrasting it with older methods used in AI development for video games. Kamradt states that ARC-3 will normalize AI performance against average human performance, preventing AI from achieving high scores through sheer computational power or repetitive actions.

Resources

External Resources

Books

- "The Measure of Intelligence" by Francois Challet - Referenced as the paper that proposed the alternative theory and foundation for the ARC Prize.

Research & Studies

- ARC-AGI - Discussed as a benchmark for measuring intelligence based on generalization and adaptability, rather than memorization or scale.

- ARC Benchmark - Mentioned as a test designed to assess the ability to learn new things.

People

- Greg Kamradt - President of the ARC Prize Foundation, interviewed about AI benchmarks and intelligence measurement.

- Francois Challet - Proposed the definition of intelligence as the ability to learn new things and created the ARC benchmark.

- Diana Hu - Host of the Y Combinator Startup Podcast, interviewed Greg Kamradt.

Organizations & Institutions

- ARC Prize Foundation - A non-profit organization focused on advancing open progress towards systems that can generalize like humans.

- Y Combinator Startup Podcast - The platform where the interview took place.

- NeurIPS 2025 - The conference where the interview was conducted.

- OpenAI - Mentioned as one of the major labs now using ARC-AGI for model releases.

- XAI - Mentioned as one of the major labs now using ARC-AGI for model releases.

- DeepMind - Mentioned as one of the major labs now using ARC-AGI for model releases.

- Anthropic - Mentioned as one of the major labs now using ARC-AGI for model releases.

Other Resources

- MMLU - Mentioned as a benchmark that AI models were chasing, focusing on harder and harder problems.

- MMLU Plus - Mentioned as a benchmark that AI models were chasing.

- Humanity's Last Exam - Mentioned as a benchmark that AI models were chasing.

- Imagenet - Referenced as an example from 2012 where showing a cat image could stump a computer.

- GPT-4 (base model) - Mentioned as performing poorly on the ARC benchmark before 2024.

- O1 - Mentioned as a model that showed a significant jump in performance on the ARC benchmark.

- O1 Preview - Mentioned as a model that showed a significant jump in performance on the ARC benchmark.

- Grok 4 - Mentioned as a frontier model release by XAI using ARC-AGI.

- Gemini 3 Pro - Mentioned as a frontier model release by Google (DeepMind) using ARC-AGI.

- Opus 45 - Mentioned as a frontier model release by Anthropic using ARC-AGI.

- RL environments - Discussed as a method that can lead to short-term gains but is not a sustainable path to generalization.

- Atari days (2016) - Referenced in the context of brute force solutions for video games requiring millions of frames and actions.