Reid Hoffman: AI Is Human Potential Amplifier, Not Threat

In a world grappling with geopolitical instability and the disruptive potential of artificial intelligence, Reid Hoffman, a titan of Silicon Valley, offers a compelling counter-narrative to pervasive fear. This conversation reveals that AI is not merely a technological advancement but the "greatest human amplifier" for quality of life, a perspective often obscured by political anxieties and a desire to "pause" progress. Hoffman argues that clinging to outdated models or resisting AI's integration is a form of "stupid thinking," akin to rejecting industrial progress for fear of losing a horse-grooming job. This analysis is crucial for leaders, investors, and policymakers who must navigate the complex landscape of AI, understanding that proactive engagement and courageous leadership are essential to steer towards a beneficial future, rather than succumbing to unproductive anxieties. The advantage lies with those who embrace this proactive stance, recognizing that delayed adoption of AI's benefits will cede ground to those who act decisively.

The Uncomfortable Truth: AI as the Ultimate Amplifier, Not the Ultimate Threat

The discourse surrounding artificial intelligence often feels like a broken record, dominated by anxieties about job displacement, security risks, and the specter of a tech bubble. Yet, in a recent conversation with Bob Safian on "Rapid Response," Reid Hoffman, co-founder of LinkedIn and a prominent tech investor, cuts through the noise with a perspective that is both audacious and deeply pragmatic: AI is the most significant amplifier of human potential ever created. This isn't a call to blind optimism, but a reasoned argument for embracing change and actively shaping its trajectory. Hoffman contends that many current conversations, particularly those emanating from forums like the World Economic Forum, are "non-productive" or even "counterproductive" because they focus on avoiding negative outcomes rather than steering towards positive ones.

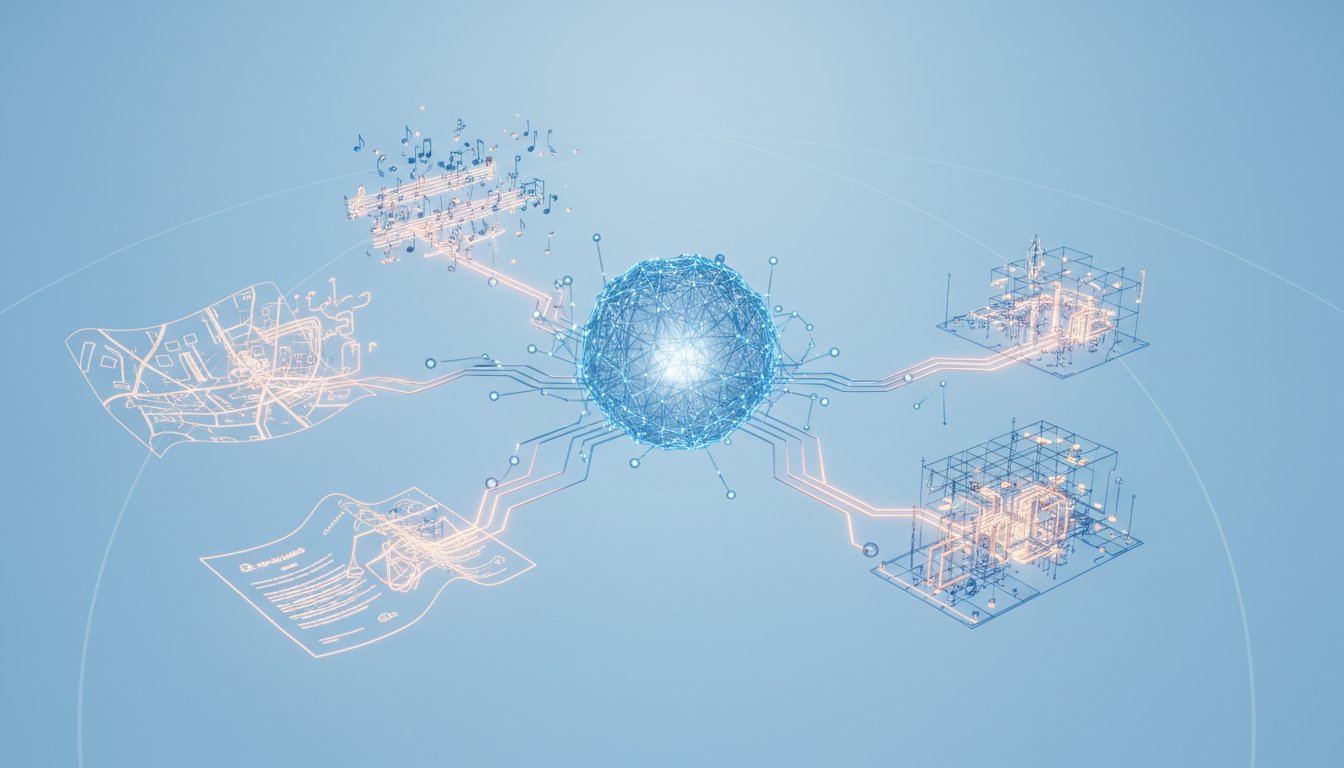

The core of Hoffman's argument lies in reframing AI not as a replacement for human capability, but as an extension of it. He likens the current resistance to AI to the Luddite fallacy, where progress is feared and resisted, ultimately hindering societal advancement. The creation of AI-generated Christmas music, a personal project Hoffman shared, serves as a tangible, albeit whimsical, demonstration of this principle. It showcases how AI can democratize creative expression, allowing individuals without traditional musical training to produce human-expressive art. This isn't about replacing artists, but about expanding the toolkit for creativity, much like coding tools empowered non-programmers.

"It's like the Bernie Sanders stupid, 'No data centers here, build them all in Canada, have Canada get all the economic benefit, let's make sure we Americans don't.' It's crazy thinking, it's stupid thinking. You have responsibilities that commensurate with your power and so you need to speak up."

This sentiment highlights a critical consequence of resistance: the forfeiture of economic and technological leadership. Hoffman argues that countries or organizations that attempt to "pause" AI development or restrict its infrastructure, like data centers, are not only acting against their own long-term interests but are also failing to acknowledge the inherent directionality of technological progress. The analogy of cars replacing horses and buggies is potent here; while it disrupted livelihoods in the horse-and-buggy industry, it fundamentally improved global quality of life and economic productivity. Those who resist such fundamental shifts, Hoffman implies, are choosing to remain in the past.

The implications for businesses and investors are profound. The conventional wisdom of waiting for AI technology to mature before investing or integrating it is, in Hoffman's view, a recipe for obsolescence. He emphasizes the need for continuous engagement, experimenting weekly with AI tools to understand their evolving capabilities and potential applications. This proactive approach, where "immediate pain"--the effort of learning and adapting--is embraced, creates "lasting moats." Companies that invest in understanding and integrating AI now, even with the inherent uncertainties and potential for early missteps, will build a foundational advantage that competitors who wait will struggle to overcome. This is where the delayed payoff creates significant competitive advantage; the upfront investment in understanding and adoption yields long-term strategic superiority.

The Hidden Costs of Hesitation: Why "Pausing" AI is a Strategic Error

Hoffman's critique extends to the very nature of leadership and decision-making in the face of transformative technology. He observes that many leaders, particularly in the political sphere, advocate for pausing AI development or implementing regulations that stifle innovation. This desire to pause, however, is fundamentally at odds with the reality of global technological advancement.

"I constantly talk to people who are like, well, I'd really like just like us to pause like until we sort out this particular problem, we should all pause. And you think 8 billion people are going to pause? So it's like, no, no, we're going in that direction and it's a question of like, you know, you're kind of going with the rapids and it's a question of how you row your boat, where you're going to, what you're trying to do."

This metaphor of navigating rapids is particularly insightful. It suggests that AI is an unstoppable force, and the only meaningful agency lies in how we choose to direct our efforts within its flow. To attempt to stop the rapids is futile; to learn to row effectively is to harness its power. The consequence of inaction, or of advocating for a collective pause, is not safety, but a loss of control over the direction of progress. This can lead to a scenario where other, perhaps less scrupulous actors, dictate the terms of AI's integration, potentially leading to suboptimal or even harmful outcomes for society.

The financial implications of this hesitation are also significant. Hoffman touches upon the concept of an "AI bubble," but distinguishes it from mere speculative overvaluation. A true bubble, in his view, implies a catastrophic unwind that destabilizes the entire economic system. While acknowledging that some AI investments may be speculative, he argues that the fundamental long-term value of AI is undeniable. The mistake is not in investing in AI, but in doing so without a clear understanding of defensible moats and sustainable business models.

When discussing investment strategy, Hoffman stresses the importance of moats--sustainable competitive advantages. In the AI space, where foundational models are becoming increasingly accessible, the true moat might lie in proprietary data, unique applications, or a deep understanding of specific industry needs. Companies that raise capital at exorbitant valuations without a clear path to building such moats are taking on excessive risk. Conversely, investors who identify genuine moats, even at high valuations, can achieve "epic" returns. The conventional wisdom that high valuations automatically equate to high risk is challenged by Hoffman’s perspective: valuation is a risk-reward calculation, and a strong moat can justify significant investment, especially if the probability of achieving massive scale is high and not fully appreciated by the market.

The failure of conventional wisdom is starkly illustrated when considering the pace of AI development. Many organizations adopt a "wait and see" approach, intending to evaluate and adopt AI once the technology has "settled." Hoffman dismisses this as a losing strategy. The rapid evolution of AI means that waiting forever is a real possibility. The true advantage, he suggests, comes from adopting and experimenting now, learning from both successes and failures, and adapting iteratively. This requires a mindset shift from seeking perfect solutions to embracing continuous learning and adaptation.

Navigating the Rapids: Actionable Steps for an AI-First Future

Reid Hoffman's insights offer a clear mandate for leaders, investors, and individuals: embrace AI proactively, understand its amplifying power, and actively shape its integration. The consequence of hesitating or resisting is not safety, but obsolescence and a loss of agency. Here are actionable takeaways derived from his perspective:

-

Embrace AI Experimentation Weekly: Commit to regularly exploring and experimenting with AI tools. This isn't about finding perfect solutions immediately, but about building an ongoing understanding of AI's capabilities and potential applications within your specific context.

- Immediate Action: Dedicate 1-2 hours per week to exploring new AI tools or features.

- Longer-Term Investment (6-12 months): Establish a small internal team or assign individuals to track and report on AI developments relevant to your industry.

-

Develop a "Broad Conception of Self-Interest": Frame your engagement with AI not just in terms of individual or company benefit, but as part of a larger societal and human advancement. This perspective can unlock innovative applications and foster more responsible development.

- Immediate Action: When evaluating AI projects, ask: "How does this amplify human potential and improve quality of life beyond immediate profit?"

-

Identify and Build Defensible Moats: In the AI landscape, a strong moat is crucial for long-term success. Focus on proprietary data, unique application development, deep domain expertise, or novel integration strategies rather than solely on foundational models.

- Immediate Action: Analyze your current competitive advantages and identify how they can be strengthened or translated into AI-specific moats.

- Longer-Term Investment (12-18 months): Invest in data infrastructure and talent that can create unique datasets or AI applications inaccessible to competitors.

-

Cultivate Courageous Leadership: Resist the urge to "pause" or shy away from societal issues amplified by technology. Speak up, engage in constructive dialogue, and advocate for responsible AI development and integration.

- Immediate Action: Identify one societal issue related to AI where your organization can take a principled stand or contribute to a solution.

- Longer-Term Investment (6-12 months): Develop a framework for ethical AI deployment and communication within your organization.

-

Adopt AI-First Principles Iteratively: Recognize that becoming AI-first is a dynamic, ongoing process, not a destination. Continuously learn, adapt, and integrate AI into workflows, understanding that some attempts will fail but yield valuable lessons.

- Immediate Action: Implement an AI tool for a specific, low-risk task and measure its impact.

- Longer-Term Investment (Ongoing): Foster a culture where experimentation with AI is encouraged, and learnings are shared widely across teams.

-

Leverage AI for Second Opinions and Critical Thinking: Employ AI tools not just for answers, but as "critics," "contrarians," or "experts" to challenge assumptions and deepen understanding. This requires a deliberate prompting strategy.

- Immediate Action: When tackling a complex problem, use AI role prompting (e.g., "Be my critic," "Argue against this") to explore alternative perspectives.

-

Advocate for Rational Immigration Policies: Understand that attracting global talent is a critical component of technological leadership and economic prosperity. Resist policies that hinder this flow.

- Immediate Action: Support industry initiatives or public discourse that advocates for sensible immigration policies for skilled workers.