Designing Transparent, Pro-Social AI to Elevate Human Epistemology

The following blog post is an analysis of a podcast transcript, applying consequence-mapping and systems thinking. It synthesizes the top non-obvious insights from the conversation between Reid Hoffman and Dr. Sean White, focusing on the implications of AI design and human interaction. This piece is for technologists, business leaders, ethicists, and anyone interested in the profound societal shifts AI is poised to bring. It offers a framework for understanding the downstream effects of AI development, moving beyond immediate benefits to long-term consequences and advantages.

The Hidden Architecture of AI: Beyond the Hype to Human Flourishing

In a recent Masters of Scale conversation, Reid Hoffman and Dr. Sean White, CEO of Inflection AI, delved into the intricate relationship between artificial intelligence and human psychology, philosophy, and evolution. Their discussion, far from being a mere overview of AI capabilities, unearths a critical undercurrent: the profound, often overlooked, consequences of how we design and interact with these powerful tools. The core thesis isn't just about building smarter AI, but about designing AI that makes us better humans. This conversation reveals the hidden dangers of mistaking AI for friends, the philosophical shifts AI necessitates in our understanding of ourselves and the world, and the potential for AI to either diminish or amplify our most radical human qualities. Those who grasp these deeper dynamics will be better equipped to navigate the coming AI-driven societal transformation, gaining a strategic advantage in building both responsible technology and resilient human communities.

The Unseen Architecture: Transparency, Empathy, and the Pro-Social Imperative

The immediate allure of AI, as White notes, is its uncanny human-like quality. This "magical experience" is a powerful interface, but it also carries a significant psychological risk: the potential to mislead us. Our brains are wired to recognize human-like traits, a phenomenon that captivates us but can obscure the underlying artificial nature of these systems. The critical design principle, therefore, is transparency. Knowing that an AI is present, understanding its limitations, and not allowing it to masquerade as sentient or conscious is paramount. This isn't about stripping AI of its utility or its engaging interface; rather, it's about building a foundation of trust.

But transparency alone isn't enough. The conversation highlights the need for AI to exhibit empathy--or at least, to simulate it effectively--as a design principle. This doesn't mean AI should simply mirror human emotions, but rather that it should be capable of recognizing when a human is heading down a "bad path, an unhealthy path" and be designed to steer them away. This leads to a broader concept: AI should be inherently pro-social. The goal isn't just to create powerful tools, but tools that actively encourage positive human interaction and well-being. White shares compelling anecdotes: Pi helping someone through grief and facilitating mediation between a couple. These examples illustrate how AI, when designed relationally rather than transactionally, can foster deeper human connection and support. The implication here is that the success of AI won't be measured solely by its computational power, but by its capacity to enhance human relationships and community.

"The number one is transparency. That is always knowing that there is an AI present, where the AI is present, not pretending that it is human or sentient or some other thing, or conscious."

-- Dr. Sean White

The danger, as the conversation implies, lies in the seductive nature of AI's capabilities. When AI can offer pitch-perfect responses, even on complex emotional scenarios, it blurs the lines. Hoffman recounts Bill Gates' realization upon seeing GPT-4's response to comforting someone about a pet's death. The AI didn't just offer platitudes; it acknowledged the user's unique relationship with their pet and offered a range of considerations, demonstrating a "cognitive and emotional range" that was both practical and nuanced. This capability, while impressive, underscores the need for careful design. The goal, as White emphasizes, is not to build AGI for its own sake, but to build "great tools for humans." This distinction is crucial; it frames AI development not as an existential race, but as a purposeful endeavor to augment human capabilities and foster flourishing.

The Epistemological Shift: AI as a Catalyst for Human Evolution

Beyond immediate psychological interactions, the conversation probes the deeper philosophical implications of AI. Hoffman and White agree that AI will fundamentally alter our epistemology--our understanding of knowledge and how we acquire it. Just as the microscope and telescope expanded our perception of the physical world, AI will reshape our conception of what is knowable and how we come to know it. This isn't merely about processing information faster; it's about a paradigm shift in our very understanding of reality, logic, and possibility.

"Our actually conception of the world is where homo technicus is through the technology, through the conceptions of even what we think of as quote unquote logically possible. So the notion of what is our ontology, what is our epistemology is going to radically change and evolve."

-- Reid Hoffman

The evolution of language, itself a tool that shapes our consciousness, will be accelerated by AI. As AI models become more sophisticated, they will influence our concepts of self, world, and inter-human understanding. This co-evolution with tools is not new--writing and language itself are prime examples--but AI represents an unprecedented leap. The danger lies in philosophical approaches that isolate thought from the tools that shape it. Hoffman argues that philosophers often err in believing all knowledge is attainable through pure introspection, neglecting the way technology reframes our very capacity for thought. This necessitates a more integrated approach to human-computer interaction, where design principles extend beyond mere "affordances" to fundamentally influence our "epistemology of how I think about the world and how I think about myself."

This philosophical reorientation is not merely an academic exercise; it has direct implications for human progress. Hoffman connects this to the idea of Silicon Valley as a potential "next Renaissance," but warns that this will only be realized if it recaptures the humanism of past renaissances. This requires a deliberate focus on our "theory of current humanity and what journey we should be on." AI development, therefore, must be guided by a clear vision of human flourishing. This is why an iterative deployment, learning and adapting as we go, is far more effective than attempting to pre-plan every outcome. The acceleration of AI, in this view, is not just a technological advancement, but a forced introspection: "What does it mean to be human?" It’s a question we’ve perhaps grown lazy about, and AI’s emergence compels us to re-engage with it, recognizing that our definition of humanity itself will evolve.

Amplifying Humanity: AI's Role in Flourishing and Executive Function

Addressing a question about what we stand to lose without AI, the conversation pivots to its potential to amplify our most radical human qualities. White presents a compelling case for AI as a powerful engine for human flourishing. Firstly, AI can democratize education, acting as the most significant learning technology invented since the printing press. The potential to achieve widespread literacy and education at a high standard for billions is within reach. Secondly, AI's role in medicine is invaluable, serving as a critical second opinion. The anecdote of a misdiagnosed hiking injury, caught by AI and averting disaster, underscores this point. In an era of increasing health risks, from pandemics to complex diseases, AI offers a vital defense mechanism.

Beyond these tangible benefits, the conversation touches on more subtle, yet equally profound, impacts. White suggests that AI can help us reclaim our attention spans. In a world saturated with fleeting digital stimuli, AI systems can assist in developing executive function, not by offloading it entirely, but by retraining us to focus on what is truly valuable. This could counteract a societal drift towards shallow, pre-attentive processing, reminiscent of our primal need to constantly scan for immediate threats.

"The hope that it's not crazy to think we might be able to, within a small number of years, literally get to call it 80 plus of the 8 billion people, over 8 billion people, and enable literacy, education, et cetera, to what we currently think of as an everyday Western wealthy world standard."

-- Dr. Sean White

This focus on executive function and attention directly relates to valuing what we might be devaluing. Education, as mentioned, is a prime example. AI-powered tutoring, not as a replacement for teachers but as a powerful support system, can provide the one-on-one attention that fosters deeper learning. The implication is that AI, when designed with human flourishing as its core objective, can help us cultivate the very qualities that make us most human: our capacity for deep learning, our critical thinking, our empathy, and our ability to connect with each other. The challenge, then, is to ensure that our pursuit of AI innovation is guided by these humanistic principles, rather than solely by technological advancement.

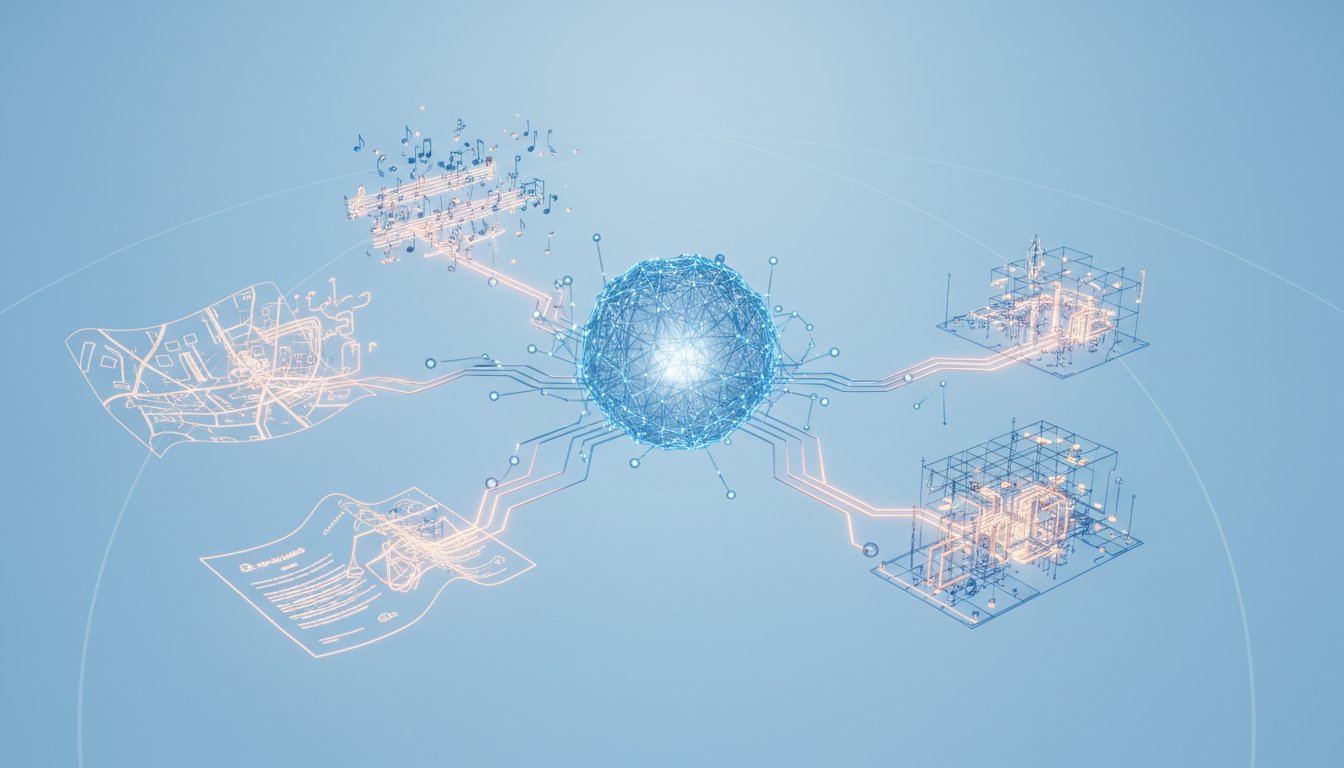

Navigating the AI Landscape: Beyond Foundation Models

The rapid advancement of foundation models like GPT-4 presents a unique challenge for innovators: how to build sustainable businesses and projects in a field where capabilities are constantly being leapfrogged. Hoffman and White offer strategic advice for those operating outside the core foundation model development. The key is to recognize that AI is a broad field, encompassing far more than just large language models (LLMs).

Hoffman advises looking at the diverse applications of AI, including rule-based systems and the powerful synergy between knowledge graphs and LLMs. He stresses the importance of being comfortable with probabilistic systems and integrating them with deterministic ones, a complex but crucial endeavor. The work of researchers like Michael Bernstein on human-in-the-loop systems and Vash at MIT highlights this interdisciplinary nature. The field of AI comprises roughly 15 disciplines, with LLMs representing just one, albeit a significant, node. This broad perspective is essential for identifying opportunities that are less susceptible to being rendered obsolete by the next generation of foundation models.

The entrepreneurial strategy, as outlined by Hoffman, should not be to build a business on a specific model that will inevitably be surpassed. Instead, it's about charting a product or service strategy based on expected model evolution. This involves leveraging LLMs as a scaffolding, recognizing their current underutilization and their potential to empower individuals and businesses. A "thin little wrapper" around an LLM is easily copied; true value lies in building deeper integrations, combining AI with rare and important knowledge databases, as Sean White suggests for areas like medicine or engine design.

"Now you might also do things that Sean was gesturing at, which is like, 'Well, actually, my AI agent combined with a knowledge database that's rare and important could be something that's important.'"

-- Reid Hoffman

This approach acknowledges that the AI landscape is dynamic. Businesses must build strategies that are resilient to the rapid evolution of models, including the possibility that the best model available today might not be the best in six or eighteen months. The entrepreneurial imperative is to create products and services that are not just dependent on a single model, but that can adapt and thrive as the underlying AI technology matures. This requires a sophisticated understanding of the broader AI ecosystem and a strategic foresight that anticipates, rather than reacts to, technological shifts.

Key Action Items

-

Immediate Action (This Quarter):

- Prioritize Transparency: Implement clear indicators in all AI-powered interfaces to ensure users always know they are interacting with an AI.

- Adopt Pro-Social Design: Review current AI features and identify opportunities to encourage positive human interaction and well-being, rather than purely transactional outcomes.

- Leverage AI for Learning: Actively explore and integrate AI tools for personal and team learning, focusing on areas where AI can enhance understanding and skill development.

- Utilize AI for Second Opinions: For critical decisions in areas like medicine or complex technical problem-solving, systematically use frontier AI models as a supplementary source of information or validation.

-

Medium-Term Investment (Next 6-12 Months):

- Develop AI-Augmented Knowledge Systems: Invest in integrating AI agents with proprietary or curated knowledge bases to create unique, defensible product or service offerings.

- Retrain for Attention: Explore and implement strategies that use AI to help individuals and teams improve focus and executive function, rather than solely offloading tasks.

- Build Adaptable AI Strategies: Design product roadmaps that anticipate the evolution of foundation models, focusing on robust scaffolding and integration rather than direct model development.

-

Longer-Term Vision (12-18 Months and Beyond):

- Foster Humanistic AI Development: Champion and invest in AI research and development that prioritizes human flourishing, ethical considerations, and societal benefit as core design principles.

- Explore Epistemological Shifts: Begin conceptualizing how AI will fundamentally alter our understanding of knowledge and reality, and how this impacts your field or industry.