Mitigating Existential Risks: Aging, Cosmic Threats, Nuclear War, and AI

TL;DR

- Aging is the most probable existential risk for individuals, yet it receives less attention than other threats due to societal habituation and a perceived lack of immediate sensory danger.

- Deflecting asteroids, demonstrated by NASA's successful mission, offers a tangible example of humanity's capability to mitigate cosmic threats, though public awareness of this achievement remains low.

- Space expansion, particularly through Mars colonization, serves as a crucial long-term civilizational resilience strategy against catastrophic events that could render Earth uninhabitable.

- Nuclear war remains a central catastrophic risk, with potential for rapid escalation and widespread destruction, underscoring the persistent need for arms control and diplomacy.

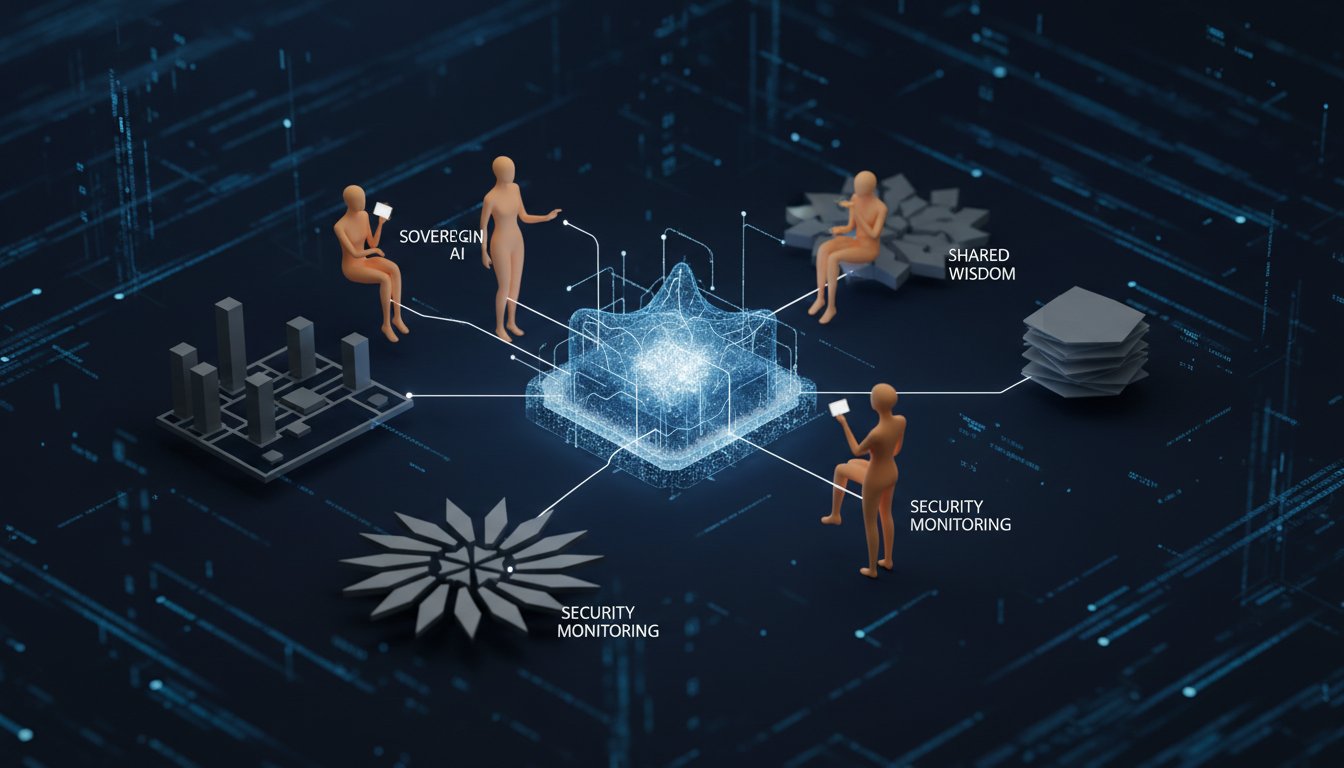

- AI risk, encompassing both uncontrolled AI and malicious misuse, presents a complex governance challenge due to inherent uncertainties and the difficulty of predicting emergent behaviors.

- Supervolcano eruptions pose a devastating threat through volcanic winters and atmospheric poisoning, with proposals for mitigation including long-term geothermal heat extraction for energy.

- The "thick-skinned weirdo" archetype, characterized by disagreeableness, open-mindedness, and resilience, is crucial for identifying and raising awareness of significant risks before they become widely accepted.

Deep Dive

Humanity faces a spectrum of global catastrophic risks, from the predictable inevitability of aging to the low-probability but high-impact threats of asteroid impacts, supervolcanoes, nuclear war, and artificial intelligence. While aging poses the most certain threat to individuals, its impact on species continuation is debated, unlike other existential risks that could lead to human extinction. The field dedicated to mitigating these "Big Things" is populated by resilient individuals, often described as "thick-skinned weirdos," who identify and address risks before they become widely accepted, akin to Cassandras.

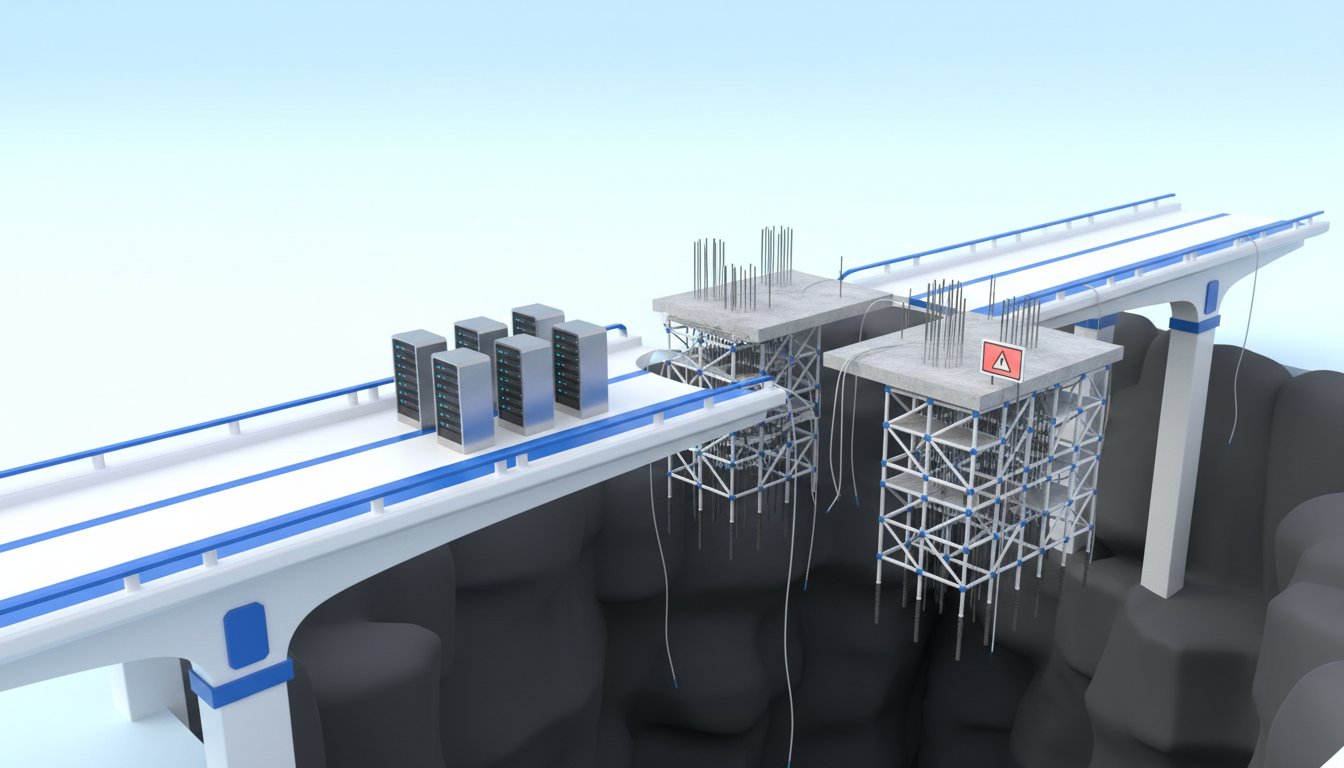

Addressing these risks requires a multifaceted approach. The successful deflection of an asteroid by NASA demonstrates humanity's growing capacity to manage extraterrestrial threats, though larger, less predictable objects remain a concern. This underscores the long-term imperative for space expansion, such as establishing a self-sufficient Mars colony, as a crucial hedge against planetary-scale disasters. Regarding natural threats, while direct prevention of supervolcanoes is speculative, managing their secondary effects through redundancy in critical infrastructure, like submarine internet cables, is a pragmatic mitigation strategy. Technological advancements in clean energy, including nuclear and potentially fusion, are essential for both mitigating climate change and, in the case of geothermal energy, potentially moderating the risk of supervolcano eruptions.

Anthropogenic risks, such as nuclear war and AI, present distinct challenges. Nuclear war remains a potent threat due to the potential for escalation and the lack of effective defense against nuclear warheads, underscoring the critical role of diplomacy and arms control. Historical near-misses, like the actions of Stanislav Petrov and Vasili Arkhipov, highlight the importance of individual judgment in averting catastrophe. The risk posed by AI is more theoretical but equally profound. While current AI development has not yet led to the dramatic societal shifts some predicted, the potential for an intelligence superior to our own, free from biological limitations, necessitates careful governance, interpretability research, and international cooperation to prevent misuse or loss of control. The collective probability of these existential threats, or "p(doom)," remains a subject of intense debate, but a sober assessment suggests it is significantly higher than acceptable, demanding continued focus and proactive mitigation strategies.

Action Items

- Audit aging research: Identify 3-5 key areas for intervention and assess current progress toward mitigating aging as a catastrophic risk.

- Develop asteroid defense strategy: Outline 2-3 potential deflection methods and assess their feasibility for large, near-Earth objects.

- Implement AI safety protocols: Design and test 3-5 interpretability techniques to understand AI decision-making processes.

- Track nuclear risk indicators: Monitor 5-10 geopolitical events that could escalate to nuclear conflict and assess their potential impact.

- Research supervolcano mitigation: Investigate 2-3 proposals for managing geothermal heat around supervolcanoes and evaluate their technical viability.

Key Quotes

"I think that the best thing that can be done about it is nuclear arms control and diplomacy and I can't really think of a scenario where the technology goes away it's also very difficult to think of a technology that can with 100 accuracy remove the risk posed by an oncoming warhead they go incredibly fast they're hypersonic you have to be incredibly accurate and fast to have a hope of catching one of them let alone all of them and a hundred could be launching at once."

The author, Tom Ough, argues that nuclear arms control and diplomacy are the most effective ways to mitigate the risk of nuclear war. He highlights the difficulty of developing defensive technologies against hypersonic missiles, suggesting that negotiation is the primary defense against this existential threat.

"The thick skinned weirdo who is the the archetype who's most likely to call attention to some big risk before it's commonly accepted that it is a risk and so there's there's you know people who did that about asteroids and there are people who did that about ai and we might call them cassandras but certainly there's like a type of psychology where you're quite disagreeable and quite open minded and quite resilient and those typically are the first people within some some field maybe longevity as well at the moment you had to be quite thick skinned to to be part of that field I think."

Tom Ough describes a specific psychological archetype, the "thick-skinned weirdo," who is crucial in identifying and raising awareness about significant risks before they are widely recognized. He notes that individuals with traits like disagreeableness, open-mindedness, and resilience often pioneer these fields, enduring skepticism until their concerns are validated.

"I think that the best counter argument to what I've just said is that humanity is continuing despite aging existing right so it doesn't really matter much to the continuation of the species that three of us are going to die in fact it might be quite good in some ways because it creates room for it creates room for the next generation."

Tom Ough presents a counterargument to the idea that aging is an existential risk, acknowledging that humanity has persisted despite it. He notes the perspective that natural death and aging can be beneficial by making space for new generations, a viewpoint he contrasts with his own sympathy for longevity science.

"I think that the most pragmatic thing to do in respect to those is to have lots of redundancy in in our systems and so one good example of these are kind of submarine internet cables and I write in the book about how there's an awful lot of them going through the straits of Malacca which is quite close to a few active volcanoes and if a volcano erupts then there's a chance that places like that might have kind of turbidity currents under under the surface of the sea and that could mess with the cables so I would like to see us having you know more satellite internet and and more submarine cables that kind of thing so you know we can't stop these volcanoes going off but we we can sure that there's some mitigation ready."

Tom Ough suggests that redundancy in systems is the most practical approach to mitigating risks from natural disasters like volcanic eruptions. He uses submarine internet cables as an example, advocating for more satellite internet and diversified cable routes to ensure connectivity even if certain areas are affected by volcanic activity.

"I think that the ai safety movement has you know raised the alarm early and there's a lot of concern around the time that gpt 4 was unveiled and that the fear is that kind of you know within a short number of years life would be unrecognizable and that hasn't happened yet I I think for for many reasons principally because it's actually quite difficult to get far above human performance in in certain tasks because all the data is based on human performance and we we can't train ais in a very energy efficient way yet so so things haven't accelerated so far in the way that the kind of most worried safety people were forecasting."

Tom Ough observes that the AI safety movement has proactively raised concerns about rapid AI advancement, citing the period around GPT-4's release as an example of fears that life would soon become unrecognizable. He explains that this accelerated change has not yet materialized due to challenges in surpassing human performance across all tasks and limitations in energy-efficient AI training.

Resources

External Resources

Books

- "The Anti-Catastrophe League" by Tom Ough - Mentioned as the author's book about individuals and efforts to prevent humanity's extinction.

- "The Case Against Death" by Patrick Linden - Mentioned as a book by one of the podcast co-hosts.

- "Evigt ung: Min och människans dröm om odödligheten" by Peter Ottsjö - Mentioned as a book by one of the podcast co-hosts, available only in Swedish.

Articles & Papers

- "The Anti-Catastrophe League" (UnHerd) - Mentioned as the subject of Tom Ough's work and his role as Senior Editor.

People

- Tom Ough - Journalist and writer, Senior Editor at UnHerd, author of "The Anti-Catastrophe League," and co-host of the Anglofuturism podcast.

- Patrick Linden - Philosopher and author, co-host of the LEVITY podcast.

- Peter Ottsjö - Journalist and author, co-host of the LEVITY podcast.

- Nick Bostrom - Mentioned as a source for the taxonomy of existential risks.

- Stanislav Petrov - Mentioned for his role in preventing a potential nuclear exchange by not initiating a retaliatory strike.

- Arkhipov - Mentioned as a submarine deputy commander who vetoed the launch of a nuclear torpedo during the Cuban Missile Crisis.

Organizations & Institutions

- UnHerd - Online magazine of culture and opinion where Tom Ough is Senior Editor.

- The Telegraph - Former employer of Tom Ough.

- NASA - Mentioned for its mission to deflect an asteroid.

- Pentagon - Mentioned in relation to concerns about nuclear weapons use during the invasion of Ukraine.

- IPCC - Mentioned for providing data and projections on climate change.

Websites & Online Resources

- reachlevity.com/subscribe - URL for subscribing to the LEVITY newsletter.

- reachlevity.com/t/desci - URL for LEVITY DeSci content.

- acast.com/privacy - URL for Acast privacy information.

Podcasts & Audio

- LEVITY - The podcast where this episode is featured.

- Anglofuturism - Podcast co-hosted by Tom Ough.

Other Resources

- Aging - Discussed as a catastrophic risk comparable to other existential threats.

- Asteroid impact - Discussed as a natural risk to humanity.

- Space expansion and Mars - Discussed as a component of long-term civilizational resilience.

- Climate change - Discussed as a significant risk, though potentially overestimated as an existential threat to humanity.

- Super-volcanoes - Discussed as a natural risk with potentially devastating consequences.

- Nuclear war - Discussed as a central catastrophic risk.

- AI risk - Discussed as a technological threat with governance challenges.

- p(doom) - Explained as the likelihood of all humanity being wiped out within a century.

- Mutual Assured Destruction (MAD) - Mentioned as a doctrine during the Cold War related to nuclear war.

- Geothermal energy - Discussed as a potential source of clean energy and a method for mitigating super-volcanoes.

- Fracking - Mentioned in relation to transferable technology and expertise for geothermal energy.

- Submarine internet cables - Discussed in the context of mitigating risks from volcanic activity.

- Satellite internet - Mentioned as a redundancy measure for communication systems.

- Nuclear energy - Discussed as a pragmatic way to address climate change.

- Fusion energy - Mentioned as an ambitious form of clean energy.

- Mechanist interpretability - Discussed as a method for understanding the internal workings of AI.

- GPT-4 - Mentioned in relation to concerns about AI progress.