Agentic AI Security Risks: Expanding Attack Surface and Unpredictable Behavior

TL;DR

- Agentic AI systems, by increasing flexibility and dynamism, expand the attack surface, making them inherently harder to secure against malicious attacks and increasing the potential for severe consequences when misused.

- The complexity of modern AI systems, where even powerful LLMs function as black boxes with unpredictable behavior, creates a significant concern regarding security and the ability to guarantee safety for critical actions.

- Agentic AI's broad spectrum of capabilities, from static tools to dynamic runtime selection, increases the risk of vulnerabilities like prompt injection and jailbreaks, necessitating robust security measures.

- The rapid advancement of frontier AI, outpacing even expert predictions, highlights the need for continued exploration beyond current paradigms like next-token prediction to address efficiency and inherent limitations.

- Agentic AI systems, despite their power, exhibit "jagged intelligence," performing exceptionally well in some areas while making simple mistakes in others, indicating limitations in generalization and compositional complexity.

- The development of standardized, reproducible agent evaluation methodologies is crucial for community progress, as current model-level evaluations do not adequately capture the complexities of agent harnesses and task performance.

- The increasing power and privileges granted to agentic AI systems, coupled with a lack of understanding of their internal workings, create a substantial risk, underscoring the need for provable security guarantees.

Deep Dive

Dr. Dawn Song's research and leadership in responsible decentralized intelligence, particularly in agentic AI, highlight a critical shift in how we design and trust intelligent systems. The core argument is that as AI systems become more autonomous and capable, ensuring their safety, security, and ethical alignment is paramount to realizing their potential benefits. This requires moving beyond simple AI capabilities to developing systems that are not only intelligent but also inherently trustworthy, especially as they are increasingly tasked with critical actions.

The implications of this work extend across several domains. Firstly, the increasing sophistication of agentic AI, characterized by its flexibility, dynamism, and autonomy, dramatically expands the attack surface. This means that securing these systems themselves against malicious attacks becomes significantly more challenging than with current AI models, which already suffer from vulnerabilities like prompt injection and hallucinations. The complexity and opacity of underlying models like large language models (LLMs) exacerbate this, creating systems that are powerful and privileged but poorly understood, making them prone to unpredictable behavior and breakdown.

Secondly, the power of agentic AI presents a dual threat: not only are the systems themselves harder to secure, but attackers can also misuse these powerful agents to launch more sophisticated and impactful attacks on other systems and the broader internet. This raises the stakes for cybersecurity, transforming it from a field of managing existing threats to one of preventing emergent, AI-driven ones. The traditional advice to never trust user input, born from the relative simplicity of earlier systems, is now obsolete in a world where complex, distributed AI agents act on our behalf, potentially with access to sensitive data and credentials.

Finally, the very nature of these advanced AI systems challenges our ability to comprehend and manage them. The "black box" nature of LLMs, even when they exhibit impressive capabilities like solving complex math problems, is a significant concern. Their "jagged intelligence" means they can perform exceptionally well in some areas while failing on simple tasks, and their generalization capabilities remain limited, especially with increasing problem difficulty and compositional complexity. This lack of understanding, coupled with the granting of significant privileges to these agents, creates a fundamental tension between utility and safety. Dr. Song's work, therefore, emphasizes the urgent need for new approaches to develop agentic AI systems with provable security guarantees, moving beyond current paradigms to ensure that as these agents become more capable, they also become demonstrably trustworthy for critical decision-making and actions.

Action Items

- Audit agentic AI systems: Evaluate 3-5 core agent functionalities for security vulnerabilities and potential misuse by attackers.

- Design agent evaluation framework: Develop standardized, reproducible metrics for assessing agent performance and safety across diverse tasks.

- Implement agent workflows: Create custom agents to automate 3-5 repetitive or time-consuming personal research and administrative tasks.

- Measure agent generalization: Test 3-5 agent capabilities on novel problems with increased difficulty and compositional complexity to identify limitations.

Key Quotes

"actually when i started working in cybersecurity so first of all uh the field was really really small um i mean the conferences that you go to is only like maybe hundreds a couple hundred people -- and also when i started i just actually transitioned uh switched from being a physics major to computer science so so yeah so i actually did my you know undergrads in physics and i only switched to computer science in grad school and when i first switched i was trying to figure out what i want to focus on like you know the domain and i actually found security really interesting and also i like the combination of theory and practice so that's why i chose it"

Dr. Song explains that her initial entry into cybersecurity occurred when the field was nascent, characterized by small conferences and a significant personal transition from physics to computer science. This background highlights her early engagement with a developing area and her preference for interdisciplinary work combining theoretical and practical aspects.

"i think in some sense it does give me maybe like more freedom more a sense of courage is to really explore uh things that uh right i find interesting that uh i feel like can be uh impactful in the future so yeah so actually my trajectory after the macarthur fellowship has really even further broadened my research domain and i think uh i actually have been taking a quite unusual path than i think a lot of uh a lot of people"

Dr. Song reflects on how receiving the MacArthur Fellowship provided her with increased freedom and courage to pursue research interests that she believed could have future impact. She notes that this recognition led to a broadening of her research scope and an adoption of a less conventional academic path.

"i mean everybody has their own path everybody has their own preferences and people make contributions in their own ways and i wouldn't say people who are just working on their papers maybe you know in their offices are talkers i think they are i mean some of you know some great work actually came out of that you know that kind of settings as well and so yes i wouldn't say necessarily right one you know one approach is necessarily better than others but i think people they people have different aspirations people like to do different things"

Dr. Song emphasizes that individuals contribute to their fields through diverse paths and preferences, cautioning against labeling those focused on traditional academic work as mere "talkers." She asserts that valuable contributions can emerge from various settings and that different people have distinct aspirations and enjoy different types of work.

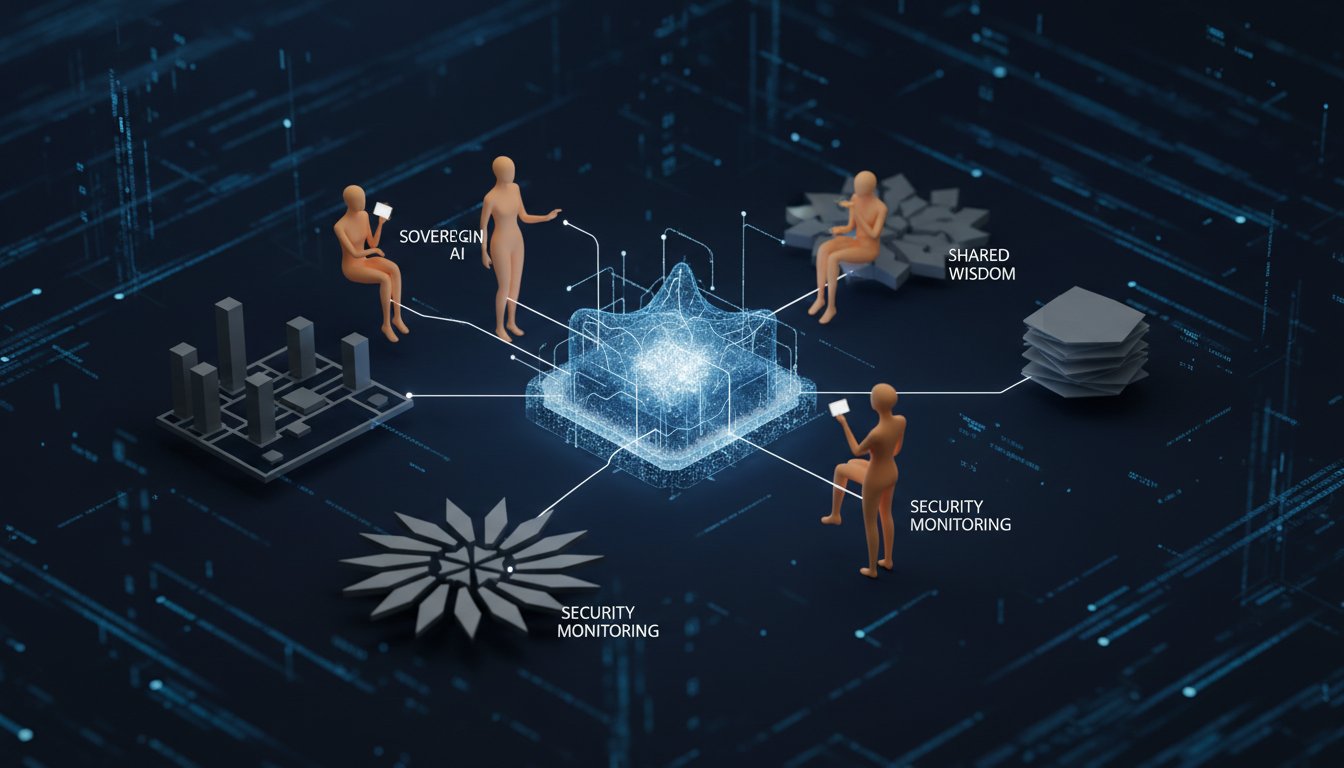

"the berkeley center rdi responsible decentralized intelligence uh works at the intersection of responsible innovation decentralization and intelligence as ai for example and i would say generative ai is actually a very good example of the kind of work that we focus on if you look at generative ai we wanted to be it's really important that it's safe and secure and responsible so we need to we want generative ai to help with responsible innovation and also right intelligence is a key part of generative ai and also we will hope that the generative ai future that we build it's not centralized it's decentralized"

Dr. Song defines the mission of the Berkeley Center for Responsible Decentralized Intelligence (RDI) as operating at the confluence of responsible innovation, decentralization, and intelligence, using generative AI as a prime example. She stresses the importance of safety, security, and responsibility in generative AI, aiming for a decentralized future rather than a centralized one.

"i mean the truth of the matter is nobody really knows but so far we are continuing to see still the fast progress of smaller capabilities and also the you know the agent development and so on so i mean of course i think we would love to see more exploration on that more diverse ideas and so on and and even the current paradigm still there are many limitations shortcomings you know not very efficient and so on and so on so so we do hope that we can continue to make further progress and identify new ideas new breakthroughs and so on"

Dr. Song acknowledges the uncertainty surrounding the future sufficiency of current AI paradigms, such as next-token prediction and reinforcement learning, for achieving advanced capabilities. She expresses a desire for more exploration and diverse ideas, noting that existing methods still have limitations and inefficiencies, and hopes for continued progress and new breakthroughs.

"and also when we talk about you know safety and security of agentic ai there are actually two main difference different aspects so one is whether the agent ai system itself is secure whether it can be you know secure against malicious attacks on the agent ai system itself so for example in the example that you mentioned you have a little coding agent that works on your you know ai files and so on you want to be careful that there's no malicious attacks attacking the coding agent so that the coding agent somehow misbehaves delete your database and then send out and also send out like sensitive data right from your files to the attacker and so on so this is one type of concern and then another type of concern is these agents as they become powerful attackers may misuse them as well to launch attacks you know to other systems to the internet to the rest of the world and so on"

Dr. Song outlines two primary aspects of safety and security for agentic AI: first, ensuring the agent AI system itself is secure against malicious attacks that could cause it to malfunction or leak data, and second, addressing the potential misuse of powerful agents by attackers to launch broader attacks on other systems or the internet. She highlights that both dimensions present significant challenges.

Resources

External Resources

Books

- "The Hitchhiker's Guide to the Galaxy" by Douglas Adams - Mentioned as an example of a fictional AI assistant.

Articles & Papers

- "OmegaDelta" - Mentioned as a benchmark developed to evaluate LLM generalization.

People

- Dr. Dawn Song - Guest, professor in computer science at UC Berkeley, co-director of the Berkeley Center on Responsible Decentralized Intelligence, MacArthur Fellow, Guggenheim Fellow, ACM Fellow, IEEE Fellow.

- Scott Hanselman - Host of Hanselminutes.

Organizations & Institutions

- UC Berkeley - Institution where Dr. Dawn Song is a professor.

- Berkeley Center for Responsible Decentralized Intelligence (RDI) - Center co-directed by Dr. Dawn Song, focusing on responsible innovation, decentralization, and intelligence.

- ACM (Association for Computing Machinery) - Partner organization for the episode.

- Google DeepMind - Sponsor of the agent evaluation competition.

Websites & Online Resources

- agentbeats.org - Website to explore agent beats code.

- rdi.berkeley.edu - Website to learn about AgentX and AgentBeats.

- agentic.ai.learning.org - Website for the agentic AI MOOC.

Other Resources

- Agentic AI - Concept discussed as the next frontier in AI, involving intelligent agents.

- Generative AI - Discussed as an example of work focused on by the Berkeley Center RDI.

- Deep Learning - Field Dr. Dawn Song focused on before it became popular.

- Next Token Prediction - Paradigm discussed as a powerful approach in AI.

- Reinforcement Learning (RL) - Approach discussed as helpful for improving model capabilities and agent capabilities.

- Transformer Architecture - Architecture discussed in relation to AI advancements.

- Large Language Models (LLMs) - Systems discussed in relation to their capabilities, limitations, and security concerns.

- Prompt Injection - An intrinsic vulnerability of LLMs.

- Jailbreak - An intrinsic vulnerability of LLMs.

- Stochastic Parrot Analogy - Analogy discussed in relation to LLMs.

- Jagged Intelligence - Term used to describe LLMs' uneven capabilities.

- Massive Open Online Course (MOOC) - Educational resource offered by Dr. Dawn Song on Agentic AI.

- Agent Evaluation - Focus of a competition organized for the MOOC.

- Agentified Agent Assessments (AAA) - A new paradigm developed for open, reproducible, and standardized agent evaluation.

- Toil - Term used in robotics to describe dull, dirty, or dangerous tasks that can be automated.