Proactive AI Governance Essential to Mitigate Sprawl Risks

TL;DR

- Uncontrolled "shadow AI" adoption by employees, driven by a FOMO mentality, creates significant security risks and financial inefficiencies due to lack of oversight and redundant tool purchases.

- Banning AI is ineffective; instead, organizations must provide a secure platform that enables employees to use AI tools productively while offering visibility and control over data usage.

- AI sprawl leads to substantial value destruction, with studies indicating 95% of AI pilots fail to reach production, resulting in billions in wasted investment without tangible business returns.

- The rapid evolution of AI models necessitates an agile strategy, allowing organizations to easily swap providers and harness the latest capabilities at optimal costs, avoiding vendor lock-in.

- Agentic AI introduces novel security challenges, as autonomous agents with broad permissions can access multiple systems, creating ambiguity and making it difficult to track data access and system interactions.

- Organizations must prioritize discovery to identify all AI deployments, including vendor-enabled AI and shadow AI, to apply necessary protections and prevent unknown risks from undermining security and ROI.

Deep Dive

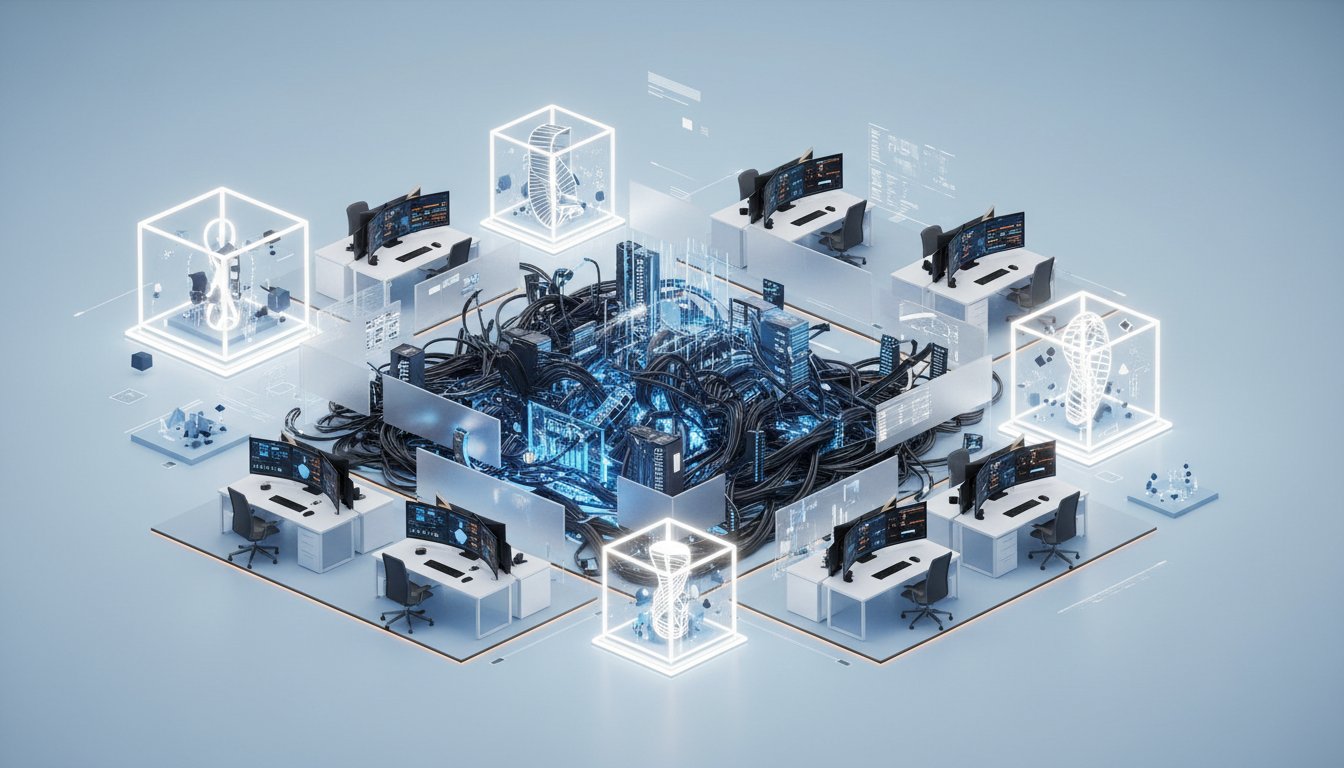

The proliferation of Artificial Intelligence (AI) within organizations, often termed "AI sprawl" and "Shadow AI," presents significant challenges to decision-makers attempting to manage its adoption, security, and return on investment. This uncontrolled expansion, driven by a fear of missing out and a desire for increased productivity, has led to a fragmented landscape of tools and models, creating substantial security risks and financial inefficiencies that undermine potential value.

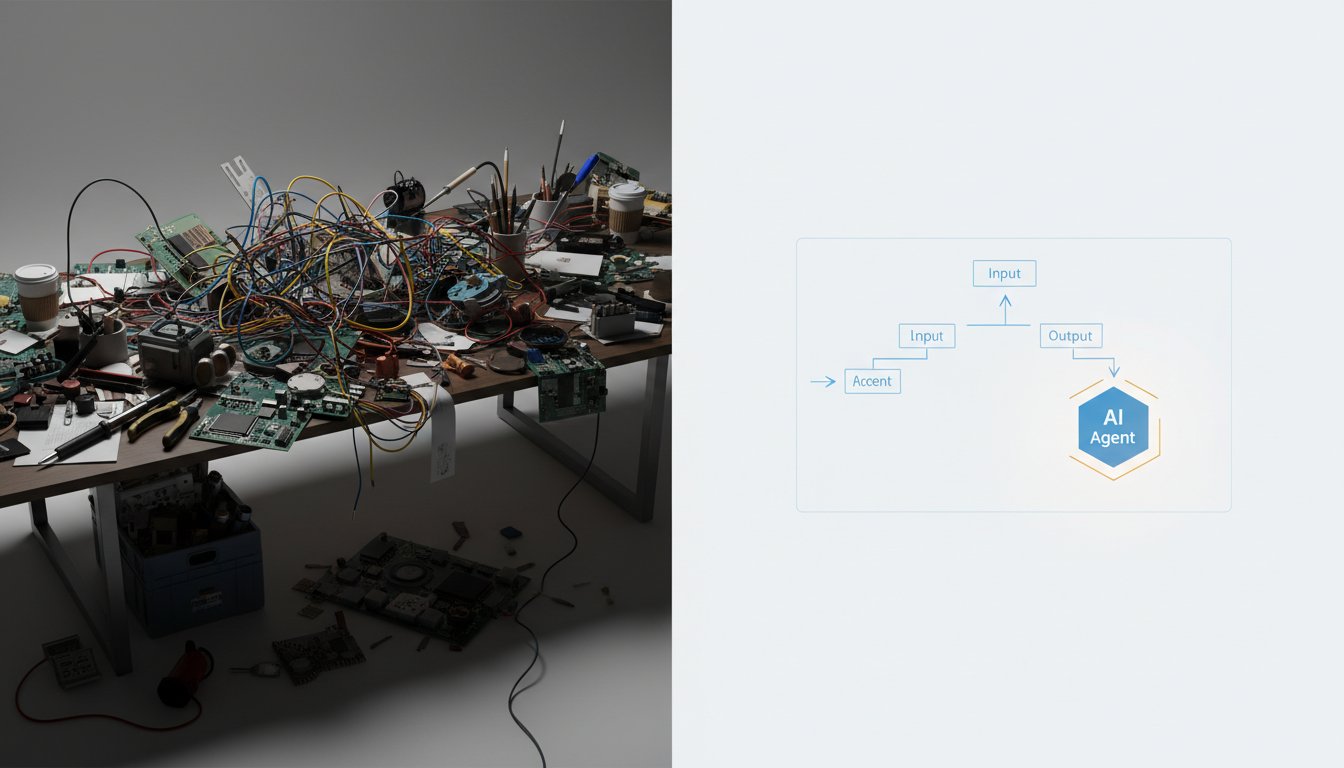

The core issue is the uncontrolled adoption of AI tools and models, creating a complex and often insecure environment. This "AI spaghetti" arises because organizations, vendors, departments, and even individual employees have rushed to implement AI solutions, often without a unifying strategy or adequate security oversight. This leads to a lack of visibility into what AI is being used, by whom, and how, creating vulnerabilities. For instance, employees using unauthorized tools like free versions of ChatGPT with company data introduce security risks because these platforms often lack confidentiality guarantees, potentially exposing sensitive information. Similarly, independently created "agents" can be granted overly broad permissions, allowing them to access more systems and data than intended, especially when the employee who created them leaves the company, leaving a security gap. This uncontrolled adoption also leads to financial waste, with many initial AI pilots failing to reach production, representing billions in unrecouped investment. Organizations are essentially "drowning in different invoices" from various vendors without clear insight into project performance or actual ROI.

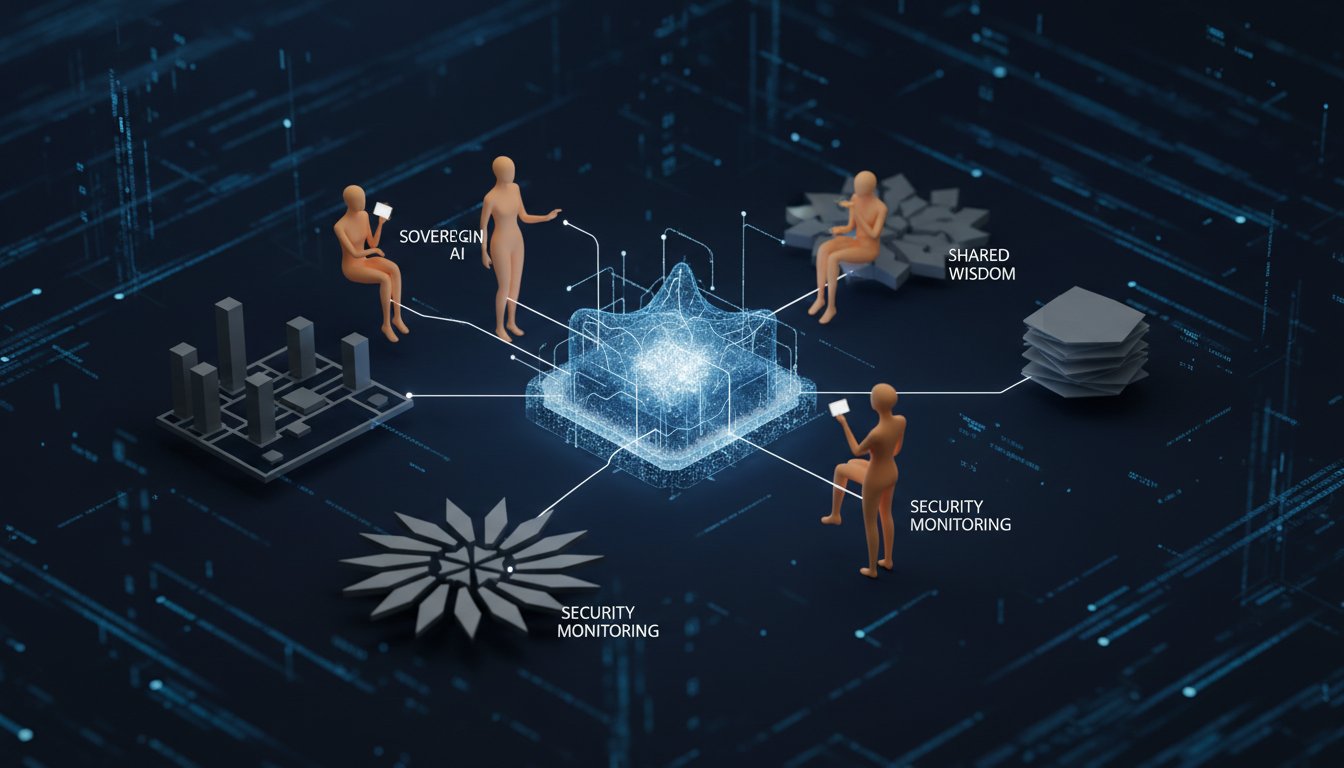

The implications of this sprawl extend to security, financial management, and strategic agility. From a security perspective, the lack of a central inventory of AI usage means organizations are vulnerable to prompt injection attacks and data breaches, as existing security stacks are not designed for the autonomous and interconnected nature of AI agents. The rise of agentic AI, where AI is given goals and autonomy to achieve them, coupled with the inherent ambiguity of natural language commands, creates new attack vectors. Furthermore, AI enables attacks at a scale and sophistication previously impossible, with swarms of agents capable of identifying and exploiting vulnerabilities rapidly. Financially, the "duct tape AI" approach, where disparate tools are cobbled together, can create more long-term work than value. The inability to integrate and manage these tools effectively means that even productivity gains might be offset by the need to rewrite business processes, negating positive ROI. Strategically, organizations risk vendor lock-in by becoming too dependent on specific AI providers. The rapid pace of AI innovation means models quickly become outdated or more expensive than newer alternatives. A modular approach, allowing for the agility to swap models and providers, is crucial for harnessing the latest advancements at the most efficient rates and ensuring business continuity against potential outages.

The primary takeaway is that organizations must move beyond reactive adoption and implement proactive governance for AI. This requires investing in discovery to identify all AI usage, both sanctioned and unsanctioned, and then applying necessary controls and security measures. Without this centralized visibility and control plane, AI sprawl will continue to quietly destroy value through security risks, financial inefficiencies, and a loss of strategic flexibility, preventing organizations from realizing the true potential of AI.

Action Items

- Audit AI usage: Identify 5-10 unsanctioned AI tools or vendors currently in use across departments.

- Create AI inventory: Document all AI tools, models, and their associated data usage and permissions.

- Implement AI guardrails: Define and enforce security policies for 3-5 critical data types used with AI.

- Develop AI runbook: Outline procedures for managing AI model deprecation and vendor changes.

- Measure AI ROI: Track 3-5 key performance indicators for AI initiatives to assess value realization.

Key Quotes

"Because, yeah, some companies have approved AI tools, others don't, and sometimes you have entire departments using unsanctioned AI, kind of this shadow AI, that's casting a huge shadow over, "Are you even getting a return?" It is extremely difficult for decision-makers in organizations to not just know, "Hey, what's the right platform to use? Maybe take advantage of the best models regardless of the provider," but also how to do it in a secure way that can manage the chaos and actually lead to a positive ROI."

Jordan Wilson highlights the pervasive issue of "shadow AI," where departments or individuals use unapproved AI tools, creating a lack of visibility and making it difficult for decision-makers to track return on investment and ensure secure implementation. This situation complicates the landscape for organizations trying to leverage AI effectively and securely.

"Well, all of that sprawl has created the spaghetti mess that we're in now, where you've got all these different tools, each with their own management needs that may or may not be met, and that's huge security risks. You've got agents that are being created by employees, often being given employees' individual permissions, which are very broad versus maybe the agent was supposed to go do this one thing, but instead now it's been given all these capabilities to do a lot of other things that can create risk."

Kevin Kiley describes the resulting "spaghetti mess" from AI sprawl, where numerous tools require individual management, leading to significant security risks. He specifically points out the danger of employee-created AI agents being granted overly broad permissions, enabling them to perform actions beyond their intended scope and introduce potential vulnerabilities.

"It is often the well-intentioned employee that I mentioned to you, who, you know, they're really trying to move up, they've got a bandage to go build their piece of the business and drop some new product launch or whatever it might be. And they are not trying to do anything that would undermine security, but they're, of course, given the agent permissions, or maybe it's uploading sensitive data that in their context doesn't look that dangerous, but if a bad actor were to get to it, or your competition were to get to it, or someone else that could take advantage, that's where the risks really start to get introduced."

Kevin Kiley emphasizes that the primary concern with shadow AI often stems from well-intentioned employees seeking to increase productivity. He explains that these employees may inadvertently introduce risks by granting excessive permissions to AI agents or uploading sensitive data, which, while seemingly innocuous in their context, could be exploited by malicious actors or competitors.

"So back to your question, again, being able to provide almost like a model garden for your employees is an important part of this, where we can serve up a list of models that we consider to be trusted, or perhaps we'd already done the procurement work behind the scenes, so you don't have to worry about putting in a garden and, you know, all the different spend that's kind of gone out of control. Let's have a central view into where we're consuming, set up budgets, read and thresholds for notifications."

Kevin Kiley suggests that providing a curated "model garden" for employees is crucial for managing AI adoption. This approach involves offering a selection of trusted AI models, potentially with procurement already handled, and establishing a central platform for monitoring AI consumption, setting budgets, and configuring alerts to maintain control over spending and usage.

"Well, the first thing I would point to is again, that there's this arms race of innovation happening right now, where month to month, sometimes week to week, you're seeing a new leader take that top inch mark for performance accuracy. We just all continuing to be blown away by how quickly this space is evolving. And it's performance, it's the fact that they may be shedding that old model that you got hooked on, or maybe the cost implications, you know, if you started on ChatGPT 4.0 last year and somehow try to stay on that, very powerful and really again, was so exciting when it first came out, but it was also quite expensive."

Kevin Kiley highlights the rapid pace of innovation in AI, where new models quickly surpass previous leaders in performance. He points out that staying agile is essential because models can become outdated, and costs can fluctuate significantly, as seen with the evolution from earlier versions of ChatGPT to newer, more cost-effective iterations.

"Well, the big problem that has been well documented in a lot of different studies, and most recently there was the MIT Gen AI Divides, I think it's called the State of AI in Business report that was really kind of an earthquake across the industry, and I suspect a lot of your audience has had a chance to see this or at least read parts of it, where they saw that 95% of these pilots never get to production. And that's staggering when you think about all the money that's being spent and the good intention behind all the tools that are being purchased, implemented, consultants, you know, it's a lot of ways, unfortunately, that thus far has just not been able to deliver real value to the business."

Kevin Kiley references a significant finding from the MIT State of AI in Business report, indicating that a staggering 95% of AI pilots fail to reach production. He notes that this represents a substantial amount of investment and effort that has not yet translated into tangible business value, underscoring a widespread challenge in AI implementation.

Resources

External Resources

Books

- "The State of AI in Business" (MIT) - Mentioned as a report indicating that 95% of AI pilots do not reach production.

People

- Kevin Kylie - CEO of Area, discussing AI orchestration and security platforms.

- Jordan Wilson - Host of the Everyday AI Show, discussing AI simplification and practical advice.

Organizations & Institutions

- Area - An AI orchestration and security platform company.

- MIT - Mentioned in relation to a report on AI in business.

- Hugging Face - Referenced as a platform with over 2 million models.

- OpenAI - Mentioned as a provider of AI models.

- Google - Mentioned as a provider of AI models.

- Microsoft - Mentioned as a partner and provider of AI models.

- Nvidia - Mentioned as a partner.

- Anthropic - Mentioned as a provider of AI models.

- Mistral - Mentioned as a provider of AI models.

Websites & Online Resources

- youreverydayai.com - Website for the Everyday AI Show, offering recaps, news, and a newsletter.

Other Resources

- ChatGPT - Mentioned as a significant AI moment that redefined work.

- Perplexity - Mentioned as an AI tool employees might use.

- Deepfake - Mentioned as a technology with associated risks.

- Prompt Injection - Mentioned as a security risk in AI.

- Indirect Prompt Injection - Mentioned as a security risk in AI.

- Agentic AI - Discussed in relation to AI capabilities, autonomy, and security risks.

- Shadow AI - Refers to the use of unsanctioned AI tools within organizations.

- AI Sprawl - The uncontrolled proliferation of AI tools and models within organizations.

- AI Spaghetti - An analogy used to describe the chaotic state of AI adoption.

- Duct Tape AI - Described as organizations using different models without a unifying platform.

- Model Garden - A concept for serving up a curated list of trusted AI models.

- Vendor Lock-in - The risk of becoming overly dependent on a single AI provider.

- Generative AI (Gen AI) - Discussed in relation to business ROI and strategy.