Balancing Speed and Stability--Mitigating Tech Debt from Software Development Shortcuts

TL;DR

- Business pressure to accelerate releases often leads to the adoption of "home-brewed" tooling and configuration utilities, which bypass established SDLC governance and scrutiny, increasing the risk of incidents and making root cause analysis difficult.

- AI code generation, while promising efficiency, can circumvent traditional peer review processes if not managed carefully, necessitating a re-emphasis on human oversight to prevent the introduction of subtle errors or security vulnerabilities.

- Shortcuts taken in software development, particularly in the MVP phase, directly contribute to technical debt by neglecting foundational platform design, API creation, and extensibility, leading to more complex and costly refactoring later.

- The emphasis on small, agile teams can lead to duplicated functionality and conflicting solutions across the organization, increasing tech debt due to a lack of overarching planning and clear top-down direction.

- Implementing a "golden path" with a supported set of tools and techniques, overseen by a central Center of Excellence, provides a scalable solution for large organizations to standardize processes and ensure consistent quality.

- Defining clear success criteria and failure modes during the planning phase, rather than solely focusing on lead time and deployment speed, allows teams to build with foresight and align with business objectives.

- Automation in release processes, combining control strategies with measurement, allows for risk-based release pacing, ensuring that riskier changes undergo slower, more controlled exposure while simpler changes can move faster.

Deep Dive

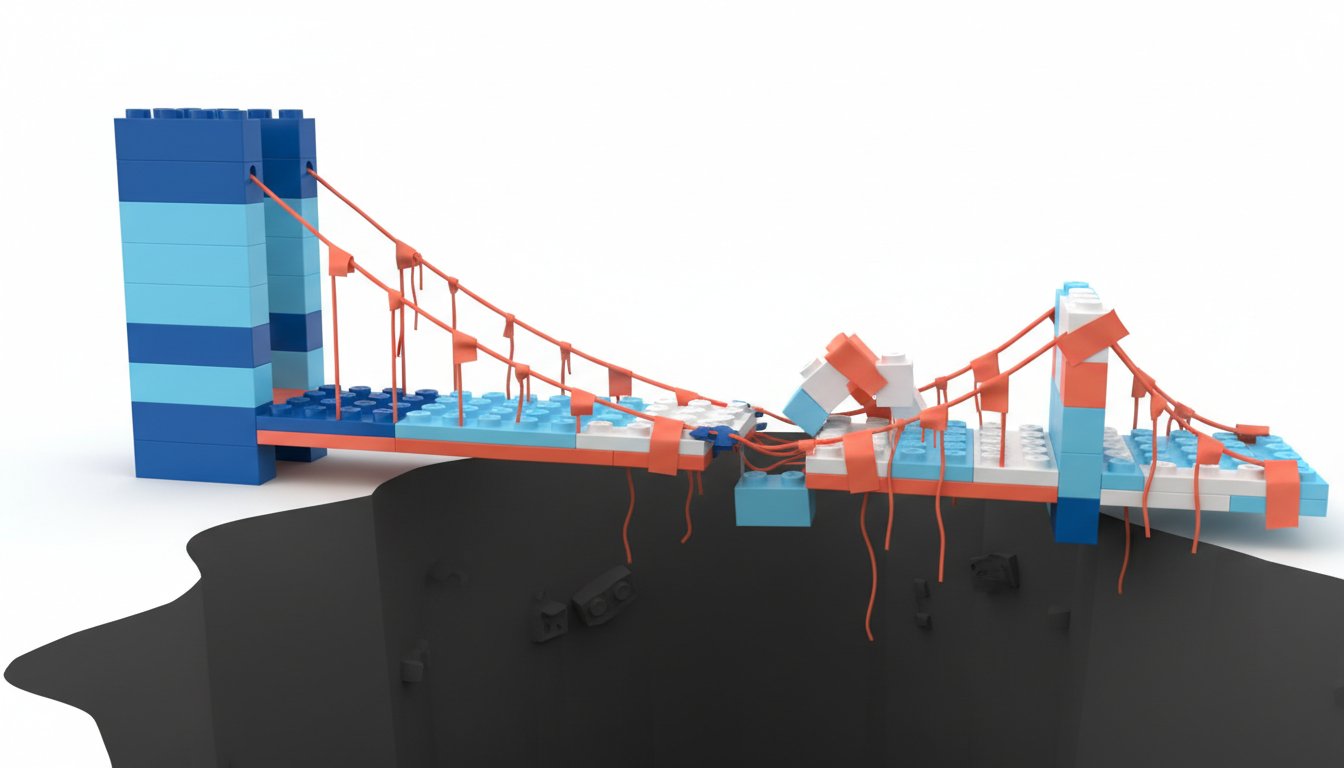

Shortcuts in software development, driven by business pressure for speed and enabled by new tools like AI code generators, often lead to technical debt and systemic instability. While engineers' natural inclination toward efficiency can be beneficial, unchecked "corner cutting" bypasses crucial safeguards in the software development lifecycle (SDLC), creating hidden risks that manifest as incidents and increased long-term costs.

The core issue lies in the tension between rapid feature delivery and maintaining system integrity. Home-grown tooling, configuration utilities that circumvent established processes, and AI-generated code that bypasses human review are prime examples of shortcuts. These shortcuts, while seemingly efficient in the short term, can lead to a proliferation of technical debt because they avoid the upfront work of building robust, extensible, and secure systems. This debt increases the cost of future development and maintenance, as teams must spend more time unwinding these shortcuts or dealing with the consequences of incidents. For instance, a duct-taped configuration utility might enable faster deployments but makes root cause analysis during an incident exponentially more difficult. Similarly, AI-generated code that skips peer review, even if the reviewer is the one who prompted the AI, removes a critical human checkpoint, increasing the risk of errors or vulnerabilities being introduced.

The implications of these shortcuts extend to organizational structure and planning. The industry's shift towards small, nimble, agile teams with complete ownership, while fostering autonomy, can lead to duplicated functionality and a lack of overarching strategic direction. This "shipping your organizational chart" means solutions can become fragmented and conflicting, exacerbating tech debt. To counteract this, a renewed emphasis on thorough planning, defining success and failure metrics upfront, and establishing clear architectural principles is necessary. This doesn't mean returning to waterfall, but rather ensuring that individual features are understood within the broader system context. Companies that successfully balance speed with sustainability often do so through standardized "golden paths" supported by platform teams or by fostering a grassroots movement towards common practices that eventually gain centralized ownership. These approaches, akin to Jeff Bezos's mandate for everything to be a platform with defined inputs and outputs, enable faster, more reliable progress in the long run by making systems more interoperable, observable, and replaceable.

Ultimately, the most effective way to argue for sustainable release processes against the pressure for speed is through smart automation and a clear understanding of business value. By categorizing releases by risk level and implementing automated control and measurement strategies, organizations can ensure that faster, less risky changes are indeed processed quickly, while more critical changes undergo slower, more controlled exposure. This requires upfront investment in establishing reusable metrics and control mechanisms, such as a centralized observability strategy. This "slow as fast" approach, supported by data and a clear articulation of how current practices protect customers and maintain system uptime, provides a defensible position against leadership-driven changes that could introduce instability. Thinking conceptually about first principles, rather than specific tooling, is key to navigating the rapidly evolving landscape, especially with the increasing influence of AI.

Action Items

- Audit configuration management utilities: Identify 3-5 home-brewed tools and assess their potential for incident causation or security bypass.

- Implement AI-generated code review process: Require at least two human reviewers for all AI-generated code to prevent unchecked submissions.

- Create release automation strategy: Define categories for releases based on risk levels and architectural areas, establishing corresponding control and measurement practices.

- Develop feature success and failure criteria: During planning, define specific metrics for success and identify potential failure modes for new features.

- Build observability wrapper: Create an internal platform around observability standards (e.g., OpenTelemetry) to allow for flexible vendor and strategy changes.

Key Quotes

"some of the best engineers out there are fundamentally lazy in that they will take the path of least resistance you know if you are measuring something they will try to gamefy that measurement and as long as the reports look good as long as it's easy and they're not getting bothered they are going to take whatever shortcut they want so yeah happy to get into specifics there but it's like water right that's going to flow wherever it's easiest to go"

Tom Totenberg argues that engineers often take the path of least resistance, which can lead them to exploit or "game" measurements if those measurements appear good and do not cause them immediate trouble. This tendency, Totenberg explains, is akin to water flowing downhill, seeking the easiest route.

"a concrete example of that is stuff like configuration management people have recognized the value of decoupling deployments which is the technical act of putting code into production or wherever you're deploying it right from releasing which is the business decision to expose new features and functionality to end users right those are two separate things deployment versus release and so i have seen so many weird cobbled together arcane strange configuration utilities out there that do operate in runtime that allow you to deploy darkly but then oh my gosh if anything goes off the rails it's an incident it's so tough to navigate and figure out the underlying root cause"

Totenberg highlights configuration management as a specific area where shortcuts can lead to problems, particularly when custom, "cobbled together" tools are used to decouple deployment from release. He explains that while these tools might allow for dark deployments (deploying code without making it visible to users), they can become difficult to troubleshoot during incidents, making root cause analysis challenging.

"something that i saw at a customer was they didn't modify their peer review process so a human so ryan you could go tell claude hey go generate some code work on this thing and then great it's going to get a peer review ryan you're a peer you can review that so you can tell it to create something the ai creates the code you review it no other human sees that and so being able to even circumvent that shortcut even in this forward thinking organization of saying okay if it's ai generated code we need at least two humans because that will make sure that one person isn't going to be the person who shoves something out the door with the assistance of claude or gemini or whatever llm you're relying on"

Totenberg describes a concerning shortcut observed with AI code generation where a peer review process was not adequately adapted. He explains that if a human reviews AI-generated code, and that human is the only reviewer, it bypasses the intended safety net, allowing a single person to potentially push unvetted code with AI assistance. Totenberg suggests that requiring at least two human reviewers for AI-generated code is necessary to mitigate this risk.

"so tech debt i think is a result of shortcuts right shortcuts can absolutely lead to tech debt and tech debt comes in multiple forms right whether this is something like wrapping code in some extra debugging observability wrapping code in feature flag or tech debt can also absolutely be the concept of someone trying to get the mvp out the door without thinking about the underlying fundamental platform that maybe should support that feature"

Totenberg clarifies that technical debt is a consequence of taking shortcuts. He explains that tech debt can manifest in various ways, such as adding extra debugging or feature flags, or more fundamentally, by prioritizing the Minimum Viable Product (MVP) without considering the underlying platform's long-term supportability.

"now i'm not saying that we need to go back to the 90s and early 2000s and you know create these these waterfall and massive gantt charts or anything but what we should be doing is taking the time upfront to be able to not just define what is this individual thing that we are building but where does this fit into the overall picture so so at least do that so that we're not doing things like duplicating functionality that another team may have already built is this something that could be reused great that is time well spent if it takes you one and a half times to build this in an extensible way then that is better than the two x time from a business perspective that it would have taken to build it twice"

Totenberg advocates for a return to more intentional planning, without reverting to old waterfall methods. He emphasizes that taking time upfront to understand how an individual feature fits into the larger picture prevents duplicated effort and promotes reusability. Totenberg argues that building something extensibly, even if it takes slightly longer initially, is more efficient in the long run than building it twice.

"so for each of these different categories of releases we should be able to have not just the control strategy but the measurement strategy right so how do you actually know how will you confirm that this thing is going well and there are likely some pretty good reusable metrics this is actually one of the reasons that i was talking about a centralized observability strategy before is like if there's high level things that you care about is the overall system actually up or not which is you know we've got status pages and and we should be able to see if that microservice is responsive or not that sort of thing we should be able to reuse these but but they're not going to be the same signals for every single change category right"

Totenberg stresses the importance of both control and measurement strategies for different release categories. He explains that reusable metrics, often facilitated by a centralized observability strategy, are crucial for confirming that a release is performing well. Totenberg notes that while high-level system status is important, specific signals will vary depending on the nature of the change being released.

Resources

External Resources

Books

- "The Most Dangerous Game" - Mentioned as a parallel to the topic of dangerous shortcuts.

- "The Bezos Mandate" - Mentioned in relation to Jeff Bezos's directive for everything to be a platform with defined inputs and outputs.

People

- Bill Gates - Mentioned in relation to an apocryphal quote about giving tasks to lazy people.

- Elon Musk - Mentioned in relation to his takeover of Twitter and subsequent changes.

- Jeff Bezos - Mentioned for his directive that everything needs to be a platform.

- Boris Gorlick - Awarded a "great question" badge for his question about removing handlers from Python's logging loggers.

Organizations & Institutions

- LaunchDarkly - Mentioned as the company where Tom Tuttenberg is Head of Release Automation.

- Stack Overflow - Mentioned as the host of the podcast and the source of the blog and podcast.

- AWS (Amazon Web Services) - Mentioned as a product that evolved from Jeff Bezos's platform directive.

- Twitter - Mentioned in the context of Elon Musk's takeover and subsequent changes.

- New Germany (car manufacturer) - Mentioned as an example of a company where everyone could see the product line.

Websites & Online Resources

- LaunchDarkly.com - Mentioned as the company website.

- LinkedIn - Mentioned as a platform to find Ryan Donovan and LaunchDarkly.

- Twitter - Mentioned as a platform to find LaunchDarkly.

- YouTube - Mentioned as a platform to find LaunchDarkly.

Other Resources

- AI Code Gen - Mentioned as a technology where cautious and aggressive implementation strategies exist.

- AI-generated code - Discussed in the context of peer review processes.

- Value Stream Management - Mentioned as a concept where everyone in an organization can see the product line and understand the business purpose.

- OpenTelemetry (OTel) - Mentioned as an open standard framework and language around observability (metrics, errors, logs, traces).

- Microservices - Discussed as primarily an organizational feature rather than an engineering feature.

- MVP (Minimum Viable Product) - Mentioned in the context of shortcuts and tech debt.

- SDLC (Software Development Life Cycle) - Mentioned in relation to configuration management tools bypassing secure processes and the importance of planning.

- Waterfall methodology - Mentioned for its thorough planning phase, though not advocating for a return to its full structure.

- Agile methodology - Mentioned in the context of smaller, nimble teams and potential conflicts or duplicated efforts.

- Continuous Deployment/Delivery/Release - Mentioned as business pressures driving faster release cadences.

- Home-brewed tooling - Discussed as a shortcut that can become problematic at scale.

- Configuration Management - Mentioned as an area where cobbled-together utilities can cause incidents.

- Observability - Discussed as a key area for standardization and automation.

- Feature Flags - Mentioned as a form of tech debt or a way to manage releases.

- Blue/Green Deployments - Mentioned as a release strategy.

- Canary Rollouts - Mentioned as a release strategy.

- PII (Personally Identifiable Information) - Mentioned in the context of risky changes to data schemas.

- MTTR (Mean Time To Recovery) - Mentioned as a business metric for uptime.