AI Integration Challenges: Code Review, Terminal Evolution, and Network Security

This episode of Python Bytes delves into the evolving landscape of software development, particularly at the intersection of AI, terminal interfaces, and open-source project management. The core thesis is that while AI offers powerful new tools, its integration demands a thoughtful approach, especially within established open-source communities. The conversation reveals hidden consequences in how AI-generated code impacts review processes and the potential for new tools to redefine terminal-based development. Developers, project maintainers, and anyone interested in the practical application of AI in software engineering will find strategic advantages here by understanding the friction points and opportunities presented, allowing them to navigate these changes more effectively than those who react only to the immediate benefits.

The Hidden Costs of "Smart" Code and Terminal Evolution

The rapid advancement of AI tools presents a double-edged sword for open-source projects. While the promise of accelerated development is alluring, the integration of AI-generated code into established workflows introduces complex second-order effects that can strain community resources and dilute the value of human contribution. This episode highlights how projects like FastAPI are proactively addressing this by establishing clear guidelines, underscoring the need for developers to consider the downstream impact of their contributions, especially when leveraging AI.

Michael Kennedy introduces GreyNoise's IP Check tool, a seemingly simple utility that reveals the broader implications of an increasingly interconnected and potentially compromised internet. In a world saturated with IoT devices and user-installed software, the ability to monitor your network's external behavior is no longer a niche cybersecurity concern but a fundamental aspect of digital hygiene. The tool's effectiveness hinges on its ability to detect "background radiation"--the constant barrage of scans and probes--and flag any unusual outgoing traffic originating from within a user's network. This isn't just about protecting your own devices; it's about ensuring your network isn't inadvertently participating in malicious activities, a subtle but critical consequence of modern connectivity.

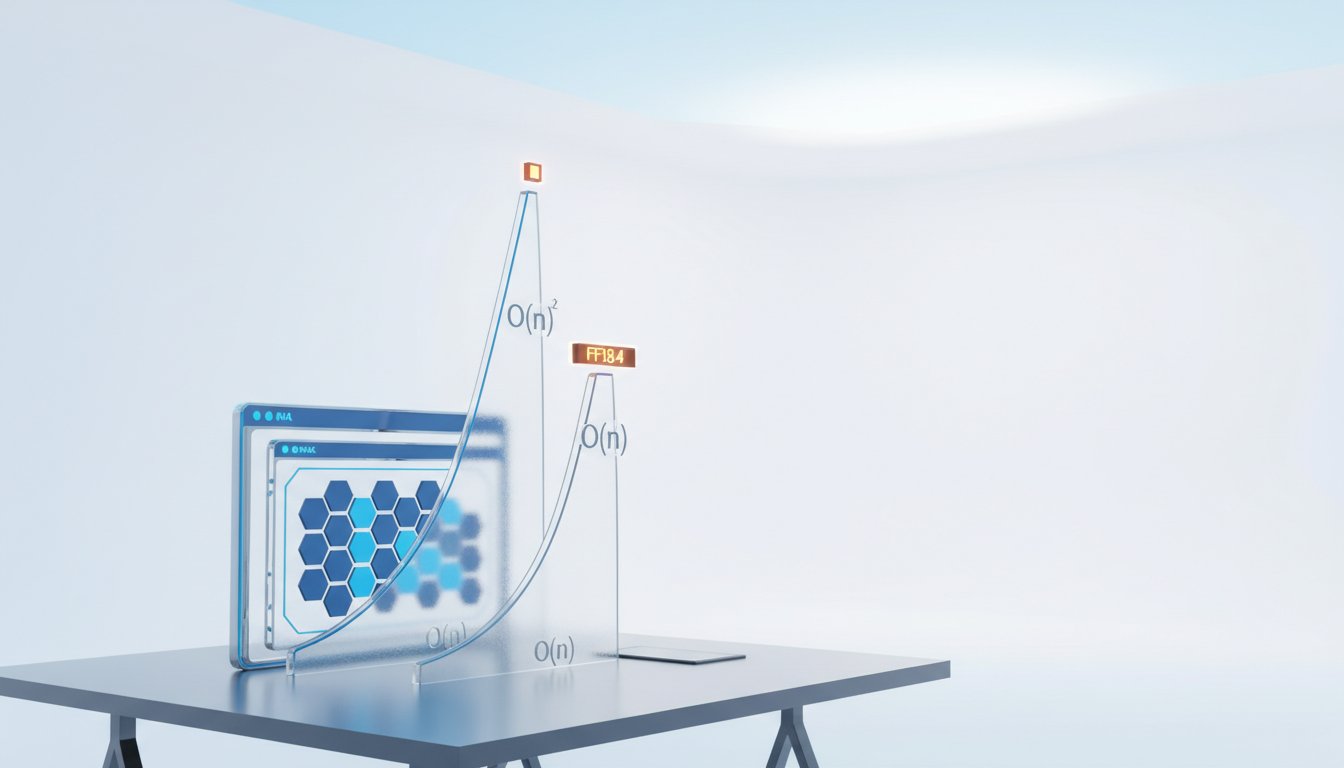

Brian Okken then pivots to tprof, a targeting profiler that addresses a common pain point in performance optimization. Traditional profilers offer a broad, often overwhelming, view of an application's execution. tprof allows developers to focus on specific functions, cutting through the noise of setup and boilerplate to pinpoint actual performance bottlenecks. This targeted approach is crucial because, as Okken notes, much of an application's perceived slowness can be attributed to initial setup or module loading--processes that happen once and can obscure the performance of the core logic. The ability to isolate and compare specific functions, especially with its delta time reporting, offers a significant advantage in iterative optimization, allowing developers to see the real impact of their changes without the distraction of unrelated overhead.

"The scale problem is theoretical. The debugging hell is immediate."

This sentiment, echoed in the discussion around AI contributions to projects like FastAPI, captures the essence of consequence mapping. While AI might promise theoretical scalability or rapid code generation, the immediate reality for maintainers is the increased burden of reviewing and validating that code. The FastAPI team's contribution guidelines, specifically the "Human Effort Denial of Service" section, illustrate this dynamic. They recognize that while AI can generate code quickly, the human effort required to review it can be substantial. Submitting AI-generated code that doesn't demonstrably save the reviewer time, or worse, requires extensive debugging, creates a net negative impact. This proactive stance is a strategic move, aiming to preserve the project's maintainability and the sanity of its core contributors by acknowledging that the "obvious" solution (using AI for speed) has hidden costs.

The conversation then shifts to toad, Will McGugan's new AI-powered terminal UI. This project tackles the challenge of making AI interactions within the terminal more intuitive and powerful. Traditional terminal interfaces, while efficient for many tasks, can be cumbersome for complex AI prompts that involve file context, code snippets, or rich markdown. toad aims to bridge this gap by providing a more sophisticated user experience, including better prompt input, fuzzy file searching, and even embedded terminal support for TUI applications. This represents a significant step in evolving the command-line interface itself, making powerful AI tools more accessible and integrated into developers' existing workflows. The implication is that as AI becomes more pervasive, the tools we use to interact with it will need to adapt, moving beyond simple text prompts to richer, more context-aware interfaces.

"If the human effort put in a PR, e.g. writing LLM prompts, is less than the effort we would need to put to review it, please don't submit the PR."

This quote from the FastAPI contribution guidelines starkly illustrates the second-order consequences of AI integration. It frames the decision to submit AI-assisted code not just by the time saved in writing, but by the net time saved across the entire development lifecycle, including review. Projects that fail to consider this balance risk being overwhelmed by low-quality, AI-generated contributions, effectively creating a bottleneck where human review capacity is outstripped by the volume of incoming code. This highlights a critical systemic issue: optimizing for individual contribution speed without considering the collective review overhead can lead to project stagnation.

The discussion on UV bytecode compilation further underscores the theme of delayed payoffs and hidden efficiencies. Michael Kennedy's adoption of the --compile-bytecode flag for UV installs, particularly in Docker deployments, is a prime example. While this flag might slightly increase the build time of a Docker image, it significantly reduces application startup time. For services that are frequently restarted or scaled, this seemingly minor optimization translates into tangible improvements in availability and responsiveness. It’s a classic case where immediate, minor discomfort (a slightly longer build) yields a substantial, long-term advantage (faster restarts). This contrasts with approaches that prioritize immediate build speed, potentially leading to slower application launches and increased downtime.

Key Action Items

- Implement Targeted Profiling: For performance-critical Python applications, adopt tools like

tprofto isolate and measure the performance of specific functions, rather than relying on broad, noisy profiler output.- Immediate Action: Identify one key function in a performance-sensitive area of your codebase and profile it using

tprof.

- Immediate Action: Identify one key function in a performance-sensitive area of your codebase and profile it using

- Review AI Contribution Policies: For open-source projects or internal development teams, establish clear guidelines for AI-generated code contributions, focusing on the net impact on review effort and maintainability.

- Immediate Action: Draft or review existing policies for AI-assisted code submissions, emphasizing the reviewer's effort.

- Explore Enhanced Terminal UIs: Investigate tools like

toadto improve the experience of interacting with AI models and other command-line tools, especially for tasks involving code context or complex prompts.- Immediate Action: Install and experiment with

toadfor a week, using it for AI interactions or complex terminal tasks.

- Immediate Action: Install and experiment with

- Optimize Application Startup: For containerized or frequently restarted applications, leverage UV's

--compile-bytecodeflag to pre-compile Python bytecode, reducing application launch times.- Immediate Investment: Integrate

--compile-bytecodeinto your CI/CD pipeline for all UV installations. This pays off in 1-3 months with faster deployments and reduced downtime.

- Immediate Investment: Integrate

- Monitor Network Activity: Utilize tools like GreyNoise's IP Check to periodically assess your network's external behavior and identify potential compromises or misconfigurations originating from connected devices.

- Immediate Action: Run a GreyNoise IP Check on your primary network connection.

- Document AI Integration Rationale: If integrating AI tools or features into your projects, clearly document the reasons, benefits, and any specific guidelines for their use, similar to FastAPI's approach.

- Longer-Term Investment: Develop a formal policy document for AI usage in development, outlining best practices and ethical considerations. This pays off in 6-12 months by preventing misuse and ensuring consistent quality.

- Prioritize Core Functionality Testing: When developing new features or widgets, ensure that the "happy path" or primary use case is robustly tested before or alongside extensive edge-case testing.

- Immediate Action: Review your current testing strategy to ensure core functionality is prioritized.