Integrating Three Frameworks to Understand Human and Artificial Intelligence

This conversation with Tom Griffiths, author of The Laws of Thought, offers a profound reframing of artificial intelligence, moving beyond the hype to reveal the underlying mathematical and cognitive frameworks that shape both human and machine intelligence. The core thesis is that understanding intelligence requires embracing three distinct yet complementary mathematical lenses: rules and symbols, neural networks, and probability. The non-obvious implication is that current AI, particularly large language models, excel in specific areas due to their training on vast data and computational power, but they also exhibit limitations in systematic generalization and learning from sparse data--areas where human cognition, shaped by different constraints, still holds an advantage. This insight is crucial for anyone building, deploying, or simply trying to understand AI. It provides an advantage by fostering more realistic expectations, identifying areas for human-AI complementarity, and guiding future AI development by learning from human cognitive biases and inductive reasoning.

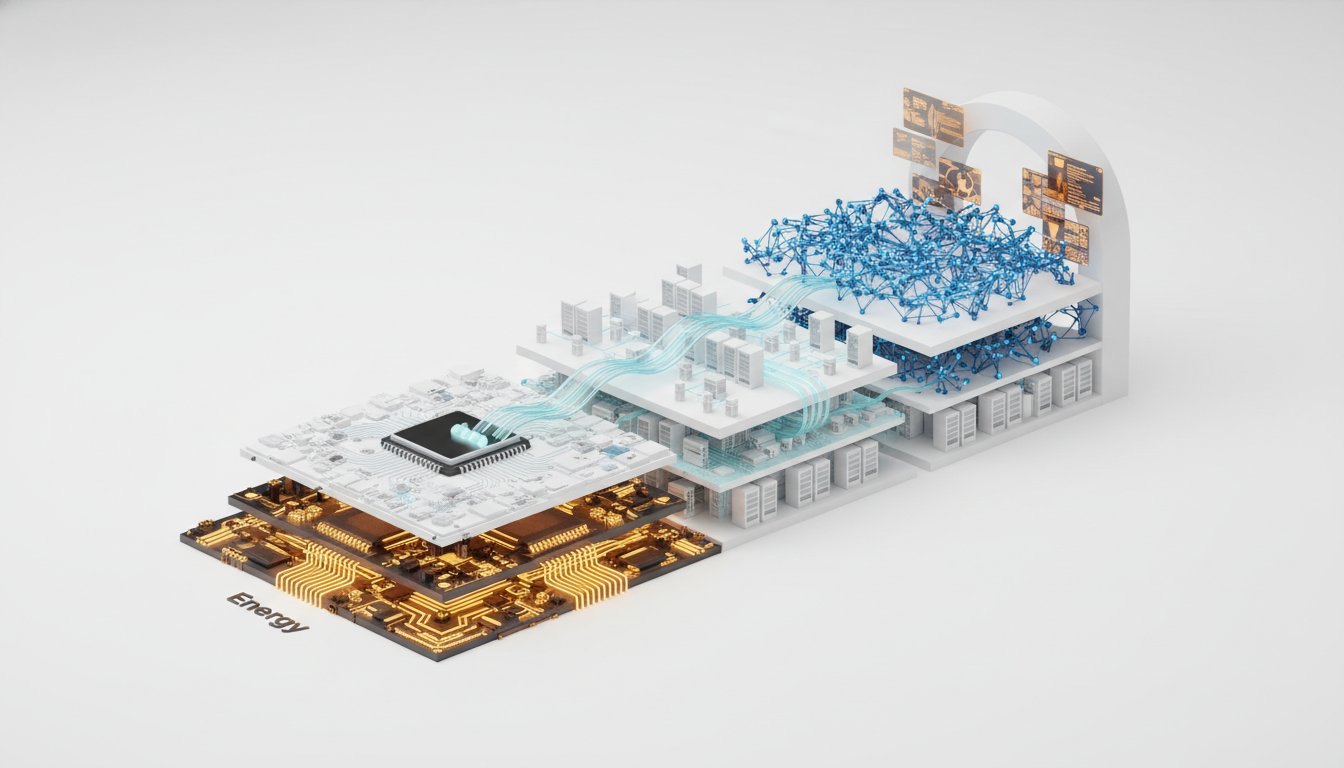

The Unseen Architecture: How Three Mathematical Frameworks Define Intelligence

The prevailing narrative around artificial intelligence often fixates on the latest model's impressive feats, like generating text or solving complex problems. Yet, beneath the surface of these capabilities lies a deeper, more fundamental structure: the mathematical frameworks that define intelligence itself. In his conversation with Sam Ransbotham, Tom Griffiths, author of The Laws of Thought, unpacks these frameworks--rules and symbols, neural networks, and probability--demonstrating how they not only underpin AI but also illuminate the nature of human cognition. The real power of this conversation lies not in cataloging AI achievements, but in understanding the why behind them, revealing how these distinct mathematical languages offer complementary explanations for intelligence, and where current AI falls short precisely because it doesn't share human cognitive constraints.

The Legacy of Logic: When Rules Aren't Enough

The story of understanding the mind, both human and artificial, begins with logic and symbols. This approach, championed by figures like George Boole, provided the first rigorous mathematical language for thought. It proved incredibly effective for deductive reasoning, problem-solving, and even understanding the structural grammar of language, as highlighted by Noam Chomsky. This framework laid the groundwork for early computing and cognitive science. However, as Griffiths points out, this symbolic approach hit a wall when trying to explain learning and concepts with fuzzy boundaries. How do we acquire language, or understand nuances like whether an olive is a fruit? Logic alone struggled to capture this dynamic, fuzzy reality.

"And so for a while it seemed like that was going pretty well, right? So it turned out that those systems of rules and symbols worked well for describing things like deductive reasoning, things like problem solving or planning, things like even the structure of languages through the work of people like Noam Chomsky. But after a while, they started to realize that maybe that wasn't going to be all that we need in order to understand how minds work."

-- Tom Griffiths

This limitation created a vacuum, leading to the exploration of new mathematical languages.

The Rise of Neural Networks: Learning from Data's Fog

The limitations of the rules-and-symbols approach paved the way for artificial neural networks. These systems, inspired by the structure of the brain, offered a new way to represent concepts not as discrete symbols, but as regions in an abstract space. This shift was critical for tackling the learning problem. Neural networks could learn from data, mapping relationships between these abstract representations without explicit symbolic rules. This proved instrumental in areas like image recognition and, more recently, large language models (LLMs). LLMs, Griffiths explains, are built upon this neural network architecture, allowing them to learn complex patterns from vast amounts of text.

However, the reliance on neural networks introduces its own set of challenges. While they excel at learning from massive datasets, they often struggle with systematic generalization and learning from scarce data--precisely where human cognition, shaped by different evolutionary pressures, shines. The ability of a child to learn a language with relatively little input, or to grasp abstract concepts quickly, points to a gap that current neural network architectures don't fully bridge.

Probability's Power: Navigating Uncertainty and Inference

The third crucial framework is probability and statistics, the science of inductive inference. This approach provides the tools to understand how we make sense of incomplete information and draw conclusions from observed data. Griffiths emphasizes that probability theory is essential for understanding why modern AI methods, particularly LLMs, are so effective. The training process for LLMs, which involves predicting the next token in a sequence, is inherently probabilistic. This probabilistic modeling allows these systems to generate coherent and contextually relevant text.

Yet, this probabilistic approach, while powerful, also highlights differences. The systematic generalization seen in human reasoning, where understanding a principle allows application to novel situations, is not always mirrored in AI. Griffiths notes that LLMs can sometimes behave in ways that seem illogical to humans because their generalization is tied to the patterns in their training data, not necessarily to underlying abstract rules or causal understanding.

"And so that training is explicitly setting this up as a probabilistic model, right? What it's trying to do is to learn a probability distribution over sequences of tokens and what it's trying to do when you're interacting with it is make inferences about what sequences of tokens you might want it to generate based on the sequences of tokens that you've typed into it."

-- Tom Griffiths

Complementarity and the Human Edge: Where Constraints Breed Intelligence

The real breakthrough, Griffiths suggests, is not in choosing one framework over another, but in understanding their complementarity. Drawing on David Marr's levels of analysis (computational, algorithmic, and implementation), he posits that logic and probability operate at the abstract computational level, defining ideal problem-solving, while neural networks provide a mechanism for implementing algorithms that approximate these solutions. This integration allows for a more holistic understanding of intelligence.

Crucially, human intelligence is shaped by constraints that AI systems do not share: limited lifespans, finite cognitive resources, and inefficient communication. These constraints, rather than being limitations, have driven the evolution of human cognitive abilities like judgment, curation, and metacognition--the ability to think about our own thinking. This is where humans retain a distinct advantage. While AI can process vast amounts of data and compute at incredible speeds, it lacks the inherent inductive biases and the deep understanding of context and consequence that humans possess.

"And so that set of constraints, I would say that's what makes human intelligence what it is, right? It's sort of we've evolved minds in response to those constraints. And if you look at what's going on for AI systems, really none of those things are true."

-- Tom Griffiths

This difference implies that we should not expect AI to simply become "better humans." Instead, the future lies in complementarity, where human and artificial intelligence work together, leveraging their respective strengths. The "meta-cognitive labor" of guiding AI, curating its outputs, and setting strategic direction becomes increasingly valuable.

Key Action Items:

- Reframe AI Expectations: Understand that AI intelligence is fundamentally different from human intelligence, shaped by data and computational constraints rather than life experience and evolutionary pressures. Adjust your expectations for generalization and problem-solving accordingly. (Immediate)

- Embrace Complementarity: Identify tasks where AI excels (e.g., data processing, pattern recognition) and where human strengths are paramount (e.g., judgment, strategic thinking, ethical reasoning). Design workflows that leverage both. (Immediate)

- Invest in Metacognitive Skills: Focus on developing your ability to guide AI systems, formulate effective prompts, critically evaluate AI outputs, and understand the underlying principles of AI operation. This "meta-cognitive labor" will be a key differentiator. (Ongoing)

- Learn the Three Frameworks: Gain a foundational understanding of rules and symbols, neural networks, and probability to better comprehend AI capabilities and limitations. This knowledge will inform more effective AI deployment and development. (Over the next quarter)

- Prioritize Systematic Generalization: When developing or evaluating AI, look for systems that demonstrate systematic generalization beyond their training data, a key area where human intelligence currently outperforms AI. (This pays off in 12-18 months)

- Develop Nuanced Problem-Solving: Recognize that AI's proficiency in specific tasks (like math problems) does not automatically translate to broad intelligence. Avoid making sweeping assumptions about AI capabilities based on narrow successes. (Immediate)

- Foster Human-AI Collaboration: Explore how AI can augment human decision-making rather than replace it. Focus on creating systems that work in harmony with human cognitive strengths, leading to more robust and reliable outcomes. (This pays off in 6-12 months)