On-Policy Learning, End-to-End Reasoning, and Data Efficiency Drive AI Progress

The future of AI hinges not on incremental improvements, but on a fundamental shift in how we learn and adapt, moving beyond mere imitation and scaling to embrace the messy, iterative process of genuine understanding. This conversation with Yi Tay, a key figure in Google DeepMind's reasoning and AGI efforts, reveals the non-obvious implications of this shift: the profound advantage of embracing "on-policy" learning, the strategic value of delayed gratification in AI development, and the critical need to move beyond superficial benchmarks to tackle problems where cause and effect are deeply intertwined. Those who grasp these dynamics will gain a significant edge in navigating the complex landscape of AI progress, understanding that true advancement lies in mastering the art of learning from our own mistakes, not just copying others. This is essential reading for anyone aiming to build or leverage cutting-edge AI, offering a roadmap to competitive advantage by focusing on the difficult, yet ultimately rewarding, path of deep learning and reasoning.

The Unseen Costs of "Off-Policy" AI and the Power of "On-Policy" Learning

The prevailing wisdom in AI development often leans towards imitation, a form of "off-policy" learning where models learn by observing and replicating successful trajectories. This mirrors supervised fine-tuning (SFT) in large language models (LLMs), where models are trained on existing datasets and expert outputs. While efficient for initial learning, this approach can lead to a critical blind spot: a failure to truly innovate or adapt when faced with novel situations. Yi Tay contrasts this with "on-policy" learning, where models learn from their own generated outputs and experiences, much like humans learn by making mistakes.

"Humans learn by making mistakes, not by copying."

This distinction is crucial. Off-policy learning, akin to blindly following instructions, can create a superficial understanding. On-policy learning, however, fosters a deeper, more robust intelligence by forcing the model to grapple with consequences and refine its approach based on its own feedback loops. This is not merely a technical detail; it's a philosophical divergence that impacts how we approach AI development. The analogy extends to human learning: while imitation has its place, true mastery comes from experimentation and self-correction. Tay suggests that humans, like on-policy models, benefit from an iterative process of trial and error, refining their "world model" through direct interaction. This approach, while potentially slower in the short term, builds a more resilient and adaptable intelligence, capable of navigating complex, unpredictable environments. The challenge lies in identifying when to transition from imitation to genuine exploration, a balance that Tay believes is essential for both human and artificial learning.

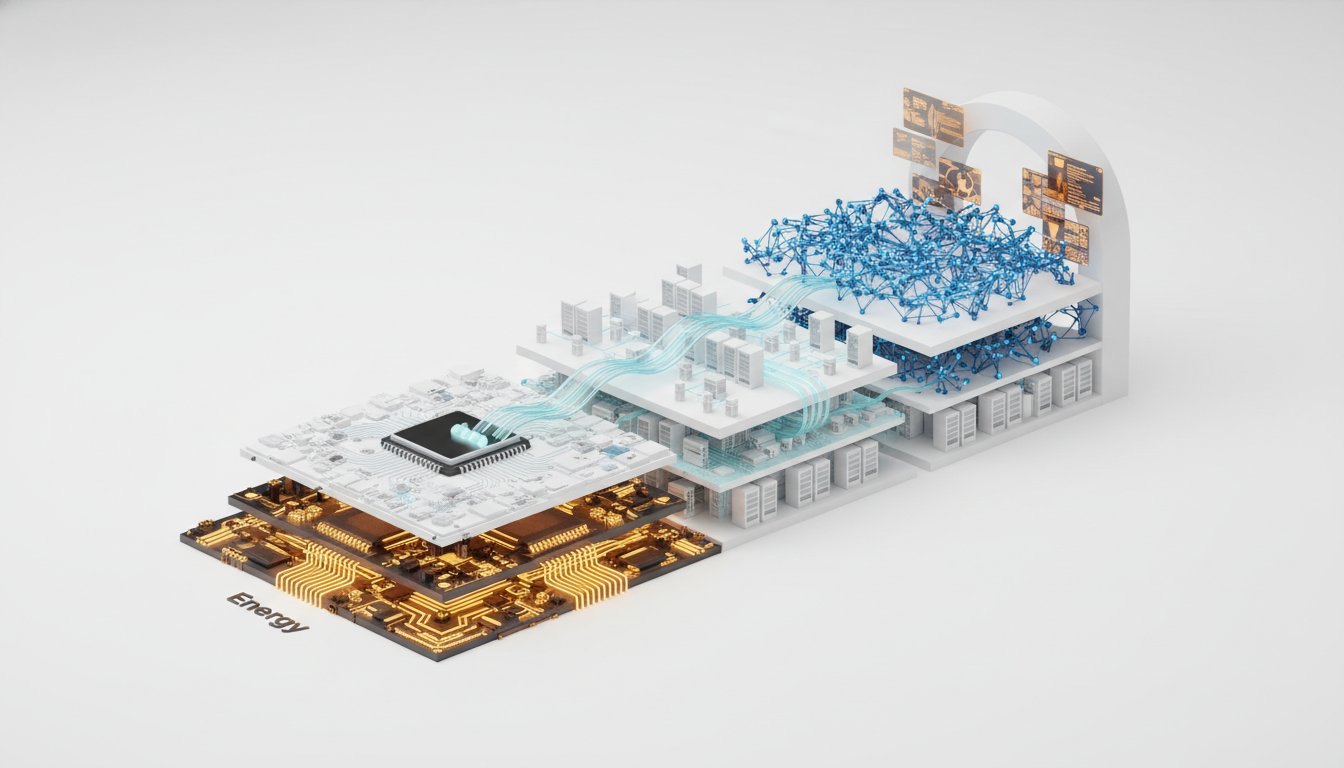

The IMO Gold Medal Gambit: A Bold Bet on End-to-End Reasoning

The International Mathematical Olympiad (IMO) Gold Medal effort stands as a testament to the power of a bold, non-consensus bet on end-to-end reasoning. For years, the approach involved specialized systems and symbolic reasoning, like AlphaProof. However, the team, led by figures like Tang and Quoc, made a significant pivot: abandoning these specialized tools to bet entirely on Gemini, an end-to-end LLM, for solving IMO problems. This was a radical departure, driven by the question: if these models can't achieve top-tier reasoning on a complex task like IMO, can they truly reach Artificial General Intelligence (AGI)?

The training itself was a compressed, high-stakes endeavor, with the core model checkpoint prepared in roughly a week. The competition was not a static benchmark but a live event in Australia, where professors submitted problems in real-time. The tension was palpable, as the "Gold" status was contingent on a percentile score, meaning the team wouldn't know if they'd achieved it until the human participants' scores were tallied. This live, unpredictable environment highlights a critical aspect of advanced AI: its ability to perform in dynamic, unscripted scenarios.

"If one model can’t do it, can we get to AGI?"

The decision to discard AlphaProof and rely solely on Gemini represented a profound trust in the emergent reasoning capabilities of LLMs. It signaled a shift from building AI through modular, specialized components to developing a single, powerful model capable of tackling diverse, complex tasks. This approach, while requiring immense faith in the underlying architecture and training methodology, offers the promise of a more unified and generalizable form of intelligence. The success of this gambit, achieving IMO Gold, validates the strategy of pushing the boundaries of end-to-end LLM reasoning, suggesting that the path to AGI might indeed lie in refining a single, highly capable model rather than assembling a collection of specialized tools.

The Data Efficiency Frontier: Why Humans Still Hold the Key

A persistent question in AI research is the stark difference in data efficiency between humans and current models. Humans can learn from orders of magnitude less data, a discrepancy that Tay frames as a fundamental puzzle: "Is it the architecture, the learning algorithm, backprop, off-policyness, or something else?" This points to a critical area where current AI paradigms may be fundamentally misaligned with natural learning processes.

The discussion around "world models" offers a potential avenue for improvement. Tay outlines three schools of thought:

1. Genie/Spatial Intelligence: Video-based world models that capture spatial reasoning.

2. JEPA + Code World Models: Modeling internal execution states, particularly in code.

3. Resolution of Possible Worlds: Curve-fitting to find the world model that best explains the data.

The implication is that by developing more sophisticated internal models of the world, AI could learn more efficiently, reducing its reliance on massive datasets. The challenge lies in determining the true bottleneck: is it the computational power, the memory, the learning algorithm itself, or perhaps the fundamental architecture like the transformer? Tay suggests that while scaling compute and data has yielded impressive results, the true frontier for achieving human-level data efficiency might lie in discovering new learning paradigms that allow models to extract more meaning from each data point. This is where the "sweet lesson" of AI--that ideas, not just scale, drive progress--becomes paramount. The ability to learn from fewer examples, to generalize effectively from limited experience, is not just an efficiency gain; it's a critical step towards more robust and adaptable AI.

AI Coding: From "Lazy" Assistance to Indispensable Partner

The utility of AI in coding has crossed a significant threshold, evolving from a convenience for the "lazy" to an indispensable partner for experienced engineers. Tay recounts his own journey, moving from using AI to automate tedious tasks like generating plots from spreadsheets to relying on it for complex debugging. The pivotal moment came when he began pasting bug reports directly into AI models like Gemini, often without even inspecting the fix, trusting the model to resolve issues in his ML training jobs.

"At the start, I was a bit like, I did check it, look at the thing. And then at some point, I'm like, 'Maybe the model knows better than me.'"

This shift signifies a profound change: AI is no longer just executing instructions; it's actively participating in problem-solving, often exceeding the immediate understanding or efficiency of the human user. This has significant downstream implications. While it empowers experienced engineers, it also raises questions about the future of junior roles. If AI can handle routine debugging and even complex problem-solving, how does this impact the training and development of new AI practitioners? Tay suggests that rather than replacing individuals, AI acts as a force multiplier, buffing everyone's capabilities and freeing up human talent for higher-level strategic thinking and innovation. The key takeaway is that AI coding assistance has moved beyond simple task automation to become a genuine collaborator, capable of tackling problems that were once the sole domain of human expertise.

Actionable Takeaways: Navigating the AI Frontier

- Embrace On-Policy Learning: Prioritize training methodologies that allow models to learn from their own generated outputs and experiences, rather than solely relying on imitation. This fosters deeper understanding and adaptability. (Immediate Action)

- Invest in End-to-End Reasoning: Focus on developing and leveraging single, powerful models capable of complex reasoning, rather than solely relying on specialized systems. This is key to unlocking AGI-level capabilities. (Long-term Investment)

- Prioritize Data Efficiency: Actively research and implement techniques that enable models to learn effectively from less data. This is crucial for closing the gap with human learning capabilities. (Ongoing Research & Development)

- Integrate AI Coding as a Collaborator: Treat AI coding tools not just as assistants but as partners in the development process. Trust their capabilities for debugging and problem-solving, but maintain oversight for critical validation. (Immediate Integration)

- Focus on "Difficult" Problems: Invest in tackling AI challenges where cause and effect are deeply intertwined or where immediate solutions have hidden downstream costs. This is where true competitive advantage lies. (Strategic Focus)

- Develop Robust World Models: Explore and refine world model approaches that allow AI to build more accurate and internally consistent representations of the world, leading to more efficient and effective learning. (Mid-term Investment)

- Validate Through Real-World Dynamics: Move beyond static benchmarks to evaluate AI performance in dynamic, unpredictable environments that mimic real-world complexity. (Strategic Validation)