AI Hardware Race Shifts Economics, Drives Usefulness and Industry Disruption

TL;DR

- The ongoing AI infrastructure race, particularly the delay in Nvidia's Blackwell GPUs, created an 18-month gap that was bridged by reasoning capabilities, preventing a stall in AI progress and market downturns.

- Google's temporary advantage in AI pre-training stems from its TPU v6/v7 chips, which are more advanced than the delayed Blackwell, allowing it to be the lowest-cost producer of tokens and strategically pressure competitors.

- The successful deployment of Nvidia's Blackwell GPUs, especially the GB300, will shift the cost dynamics, potentially ending Google's low-cost token production advantage and profoundly altering the economic landscape of AI.

- AI's ability to automate tasks with verifiable outcomes, like accounting or sales, is a critical driver of usefulness, enabling new applications such as personal assistants and revolutionizing customer support and sales functions.

- The SaaS industry's reluctance to adopt AI's lower gross margins, prioritizing existing high margins, is a strategic mistake mirroring brick-and-mortar retailers' failure to embrace e-commerce, potentially leading to their obsolescence.

- Data centers in space offer a superior, lower-cost solution for AI compute due to abundant solar energy and free cooling, presenting a fundamental advantage over terrestrial data centers and potentially reshaping infrastructure economics.

- The convergence of Tesla, SpaceX, and XAI creates synergistic advantages, with XAI's intelligence modules powering Tesla's Optimus, SpaceX providing space-based data centers, and all benefiting from shared customer bases for AI applications.

Deep Dive

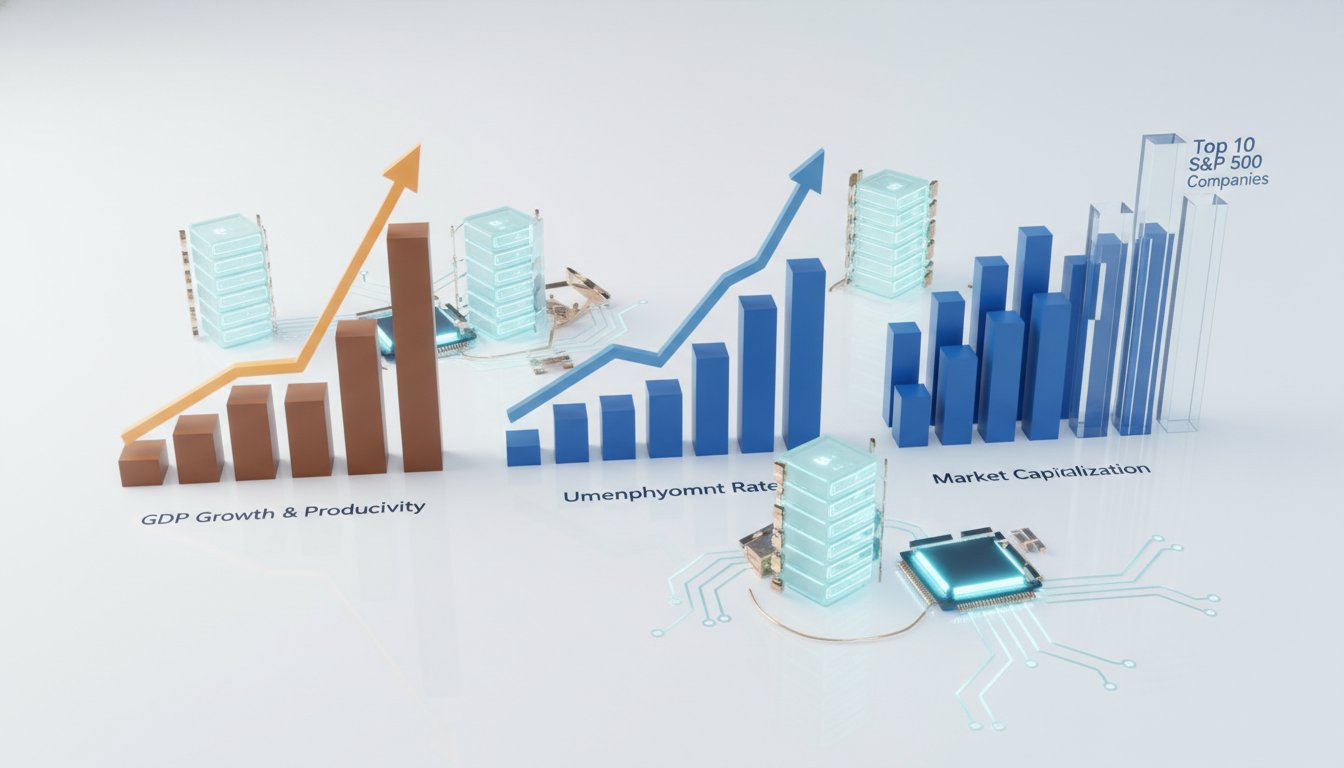

Gavin Baker argues that the economic landscape of AI is fundamentally shifting, driven by the escalating competition between major tech players and the rapid advancement of hardware. This dynamic is creating profound implications for AI development, market positioning, and the very economics of producing AI tokens, suggesting a future where cost efficiency will become a primary differentiator.

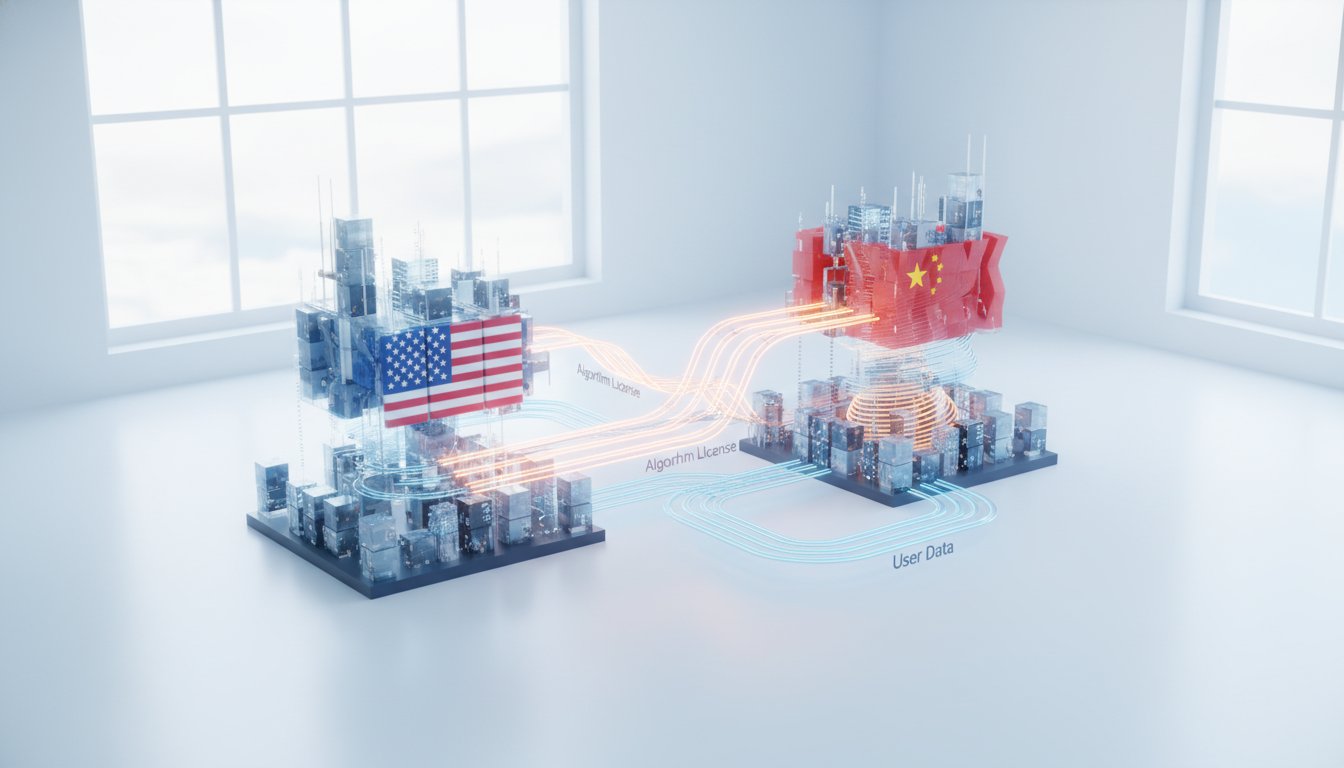

The core of this shift lies in the GPU versus TPU battle, primarily between Nvidia and Google. Nvidia's Blackwell GPUs, despite initial delays due to extreme complexity in cooling and power requirements, are poised to redefine AI training and inference. This is because, while Google's TPUs have offered a temporary advantage in pre-training and been the lowest-cost producer of tokens, Blackwell's superior architecture, especially with the GB300 chip, promises to democratize cost-effective token production. This development challenges Google's strategy of economically squeezing competitors and signals a potential rebalancing of power, as Blackwell-powered data centers are expected to become the new benchmark for efficient AI operations. The successful deployment of Blackwell, potentially first by Elon Musk's XAI due to their rapid data center build-out capabilities, will enable AI models to process significantly more context, moving beyond mere intelligence to tangible usefulness, enabling complex tasks like vacation planning and revolutionizing industries like customer support and media.

The implications of this hardware race extend to the broader AI ecosystem and investment landscape. The increasing cost and complexity of AI infrastructure, coupled with the potential for data centers in space to offer superior power and cooling, suggest that raw compute availability and efficiency will become paramount. This hardware-centric approach is also driving a resurgence in semiconductor venture capital, as new companies are required to innovate across the entire hardware stack to keep pace with the annual cadence of chip advancements. Furthermore, the SaaS industry faces a critical juncture, as its traditional high-margin model is incompatible with the low-margin, high-compute demands of AI. Companies that fail to adapt by embracing AI-native cost structures risk obsolescence, mirroring the mistakes of brick-and-mortar retailers in the face of e-commerce. Ultimately, the relentless pursuit of compute and efficiency, coupled with the "whatever AI needs, it gets" phenomenon, points towards a future where AI's economic returns will continue to be positive, driven by breakthroughs in usefulness and potentially, though less predictably, artificial superintelligence.

Action Items

- Audit AI model usage: For 3-5 core AI tools, track token costs and identify opportunities to optimize for lower-cost tiers or alternative models.

- Create AI adoption runbook: Define 5 key sections for evaluating and integrating new AI models, focusing on cost-effectiveness and performance benchmarks.

- Measure AI-driven productivity gains: For 3-5 internal processes, quantify time savings or output increases attributed to AI tools.

- Evaluate AI infrastructure costs: Analyze spending on AI compute and identify 2-3 areas where cost reduction strategies can be implemented.

- Track AI model performance drift: For 3-5 critical AI models, establish a monitoring system to detect and address performance degradation over time.

Key Quotes

"I mean, I think the first thing is you have to use it yourself and I would just say I'm amazed at how many famous and august investors are reaching really definitive conclusions about AI well no based on the free tier. The free tier is like you're dealing with a 10-year-old, right? And you're making conclusions about the 10-year-old's capabilities as an adult. And you could just pay, and I do think actually you do need to pay for the highest tier, whether it's Gemini Ultra, Super GK, whatever it is, you have to pay the $20 per month tiers, whereas those are like a fully-fledged 30, 35-year-old."

Gavin Baker argues that superficial engagement with AI, limited to free tiers, leads to inaccurate conclusions about its capabilities. Baker emphasizes that to truly understand AI, one must utilize the highest-tier paid versions, which offer a more complete picture of the technology's potential, akin to understanding an adult's capabilities by observing them, not just a child's.

"The reason we've had all this progress, maybe we could show like the Arc AGI slide where you had zero to eight over four years, zero to eight intelligence, and then you went from eight to 95 in three months when the first reasoning model came out from OpenAI. We have these two new scaling laws of post-training, which is just reinforcement learning with verified rewards. Verified is such an important concept in AI."

Gavin Baker highlights that recent AI progress is driven by two key post-training scaling laws: reinforcement learning with verified rewards and test-time compute. Baker explains that "verified" is a crucial concept in AI, implying that any task with a clear right or wrong outcome can be automated, a principle he attributes to Andrej Karpathy.

"I analogize it to imagine if to get a new iPhone, you had to change all the outlets in your house to 220 volt, put in a Tesla Powerwall, put in a generator, put in solar panels. That's the power. You know, put in a whole home humidification system and then reinforce the floor because the floor can't handle this. It was a huge product transition."

Gavin Baker uses this analogy to illustrate the complexity and scale of the transition from Nvidia's Hopper to Blackwell chips. Baker explains that the power and cooling requirements for Blackwell are so significantly increased that they necessitate substantial infrastructure upgrades, comparable to overhauling a home's electrical and environmental systems for a new device.

"And this is really important because what Google has been doing as the low-cost producer is they have been sucking the economic oxygen out of the AI ecosystem, which is an extremely rational strategy for them. And for anyone who is the low-cost producer, let's make life really hard for our competitors."

Gavin Baker points out that Google's strategy as a low-cost producer of AI tokens is a rational move to gain market advantage. Baker suggests that by offering lower prices, Google aims to make it difficult for competitors to secure funding and remain viable in the AI ecosystem.

"The reason that's important is that, you know, I forget the number, but I mean, there are thousands of parts in a Blackwell rack and there's thousands of parts in a TPU rack. And in a Blackwell rack, maybe Nvidia makes two or 300 of those parts. Same thing in an AMD rack. And they need all of those other parts to accelerate with them. So they couldn't go to this one-year cadence if the difficulty was not keeping up with them."

Gavin Baker explains the critical role of the broader semiconductor ecosystem in enabling rapid hardware advancements. Baker notes that companies like Nvidia and AMD rely on numerous third-party component manufacturers to keep pace with their aggressive product development cycles, underscoring the collaborative nature of the industry.

"And that is the fundamental reason that eventually every brick-and-mortar retailer was really slow to invest in e-commerce. And now here we are and, you know, Amazon has higher margins and their North American retail business than a lot of retailers that are mass market retailers. So margins can change."

Gavin Baker draws a parallel between brick-and-mortar retailers' reluctance to invest in e-commerce and the current hesitation of SaaS companies to embrace AI. Baker argues that just as retailers initially prioritized existing margin structures over the emerging e-commerce model, SaaS companies may be making a similar mistake by resisting the lower margins associated with AI.

Resources

External Resources

Books

- "Market Wizards" by Jack Schwager - Referenced as part of learning about investing.

- "One Up On Wall Street" by Peter Lynch - Referenced as foundational reading for investing.

- "The Intelligent Investor" by Benjamin Graham - Referenced as foundational reading for investing.

- "Stocks Go Up and Down" - Referenced as a book for learning accounting.

- "What Technology Wants" by Kevin Kelly - Referenced in the context of technology's growth and advancement.

Articles & Papers

- "The Economist" - Mentioned as a publication read for current events.

- "Newsweek" - Mentioned as a publication read for current events.

- "Time" - Mentioned as a publication read for current events.

- "US News & World Report" - Mentioned as a publication read for current events.

- "The Motley Fool" - Referenced for early discussions on return on invested capital.

- "Wall Street Journal" - Referenced for reading news about stocks.

People

- Gavin Baker - Guest on the podcast, managing partner and CIO of Atreides Management.

- Patrick O'Shaughnessy - Host of the podcast "Invest Like the Best."

- Andrej Karpathy - Referenced for his writings on AI.

- Jensen Huang - CEO of Nvidia, referenced for statements on data center construction speed.

- Elon Musk - Referenced for statements on data center construction speed and the convergence of Tesla, SpaceX, and xAI.

- Michael Steinhardt - Quoted on the balance between conviction and humility in investing.

- Peter Lynch - Author of "One Up On Wall Street," referenced for investing books.

- Warren Buffett - Referenced for his letters to shareholders and investing books.

- Jack Schwager - Author of "Market Wizards," referenced for investing books.

- Benjamin Graham - Author of "The Intelligent Investor," referenced for investing books.

- Kevin Kelly - Author of "What Technology Wants," referenced in the context of technology's growth.

- Eric Brynjolfsson - Quoted on foundation models as rapidly depreciating assets.

- Jeff Bezos - Quoted on the concept of a flywheel.

- Mark Zuckerberg - Referenced for a prediction about AI performance in 2025.

- Jan LeCun - Mentioned in the context of Meta's AI efforts.

- Dario Amodei - CEO of Anthropic, referenced for a deal with Nvidia.

- Patrick Gelsinger - Former CEO of Intel, referenced for his strategy.

- Leap Boo - Current executive at Intel, referenced for his role in leveraging Intel's fabs.

- Sam Altman - CEO of OpenAI, referenced for a past investment in OpenAI.

- Sam Darnold - NFL quarterback, mentioned as an example of talent needing a different system.

- Baker Mayfield - NFL quarterback, mentioned as an example of talent needing a different system.

- Daniel Jones - NFL quarterback, mentioned as an example of talent needing a different system.

Organizations & Institutions

- Atreides Management - Company where Gavin Baker is managing partner and CIO.

- Nvidia - Semiconductor company discussed extensively in relation to GPUs and AI.

- Google - Technology company discussed in relation to TPUs and AI.

- OpenAI - AI research lab, discussed in relation to its models and costs.

- Anthropic - AI research lab, discussed in relation to its partnership with Google and Amazon, and a deal with Nvidia.

- Meta - Technology company discussed in relation to its AI predictions and efforts.

- Microsoft - Technology company discussed in relation to AI and cloud transition.

- Amazon - Technology company discussed in relation to AI models and cloud.

- Apple - Technology company discussed in relation to its AI strategy and chip design.

- Tesla - Company discussed in relation to its AI integration with Optimus and SpaceX.

- SpaceX - Company discussed in relation to data centers in space and its convergence with Tesla and xAI.

- xAI - AI company founded by Elon Musk, discussed in relation to its models and data center plans.

- Ramp - Company providing spend management solutions, mentioned as a sponsor.

- Ridgeline - Company providing operating systems for investment managers, mentioned as a sponsor.

- AlphaSense - Market intelligence platform, mentioned as a sponsor.

- Tegus - Mentioned alongside AlphaSense as a tool for research.

- The Podcast Consultant - Provided editing and post-production for the episode.

- Positive Sum - Mentioned as the company of the podcast host, Patrick O'Shaughnessy.

- Broadcom - Company involved in the back-end of semiconductor design for Google's TPUs.

- Taiwan Semiconductor Manufacturing Company (TSMC) - Semiconductor foundry.

- Intel - Semiconductor company discussed in relation to its fabs and strategy.

- MediTek - Taiwanese semiconductor company, mentioned in relation to Google's supply chain strategy.

- Amazon Web Services (AWS) - Cloud computing service, mentioned in relation to training models.

- DARPA - Defense Advanced Research Projects Agency, mentioned for programs incentivizing technological solutions.

- Department of Defense (DoD) - Mentioned for programs incentivizing technological solutions.

- Huawei - Chinese technology company, mentioned in relation to its chips.

- DeepSeek - Chinese AI research organization, mentioned for its technical paper.

- Caterpillar - Company increasing manufacturing capacity for power generation.

- Donaldson, Lufkin & Jenrette (DLJ) - Investment bank where Gavin Baker had an internship.

- Dartmouth Mountaineering Club - Club Gavin Baker was part of in college.

- The Motley Fool - Financial media company, referenced for early discussions on return on invested capital.

- Vista - Mentioned in the context of private equity applying AI.

- Iconic - Mentioned for charts on AI productivity.

- a16z (Andreessen Horowitz) - Venture capital firm, mentioned for its "model busters" concept.

- CH Robinson - Logistics company, mentioned for AI-driven productivity gains.

- Cisco - Mentioned in the context of research reports.

- General Electric (GE) - Mentioned in the context of research reports.

- Adobe - Software company discussed in relation to its transition to SaaS.

- Microsoft Copilot - AI assistant for coding, mentioned as a business for Microsoft.

- Salesforce - CRM software company, mentioned in the context of AI adoption.

- ServiceNow - Workflow automation company, mentioned in the context of AI adoption.

- HubSpot - CRM and marketing software company, mentioned in the context of AI adoption.

- GitLab - DevOps platform, mentioned in the context of AI adoption.

- Atlassian - Software company, mentioned in the context of AI adoption.

- Nokia - Mobile phone company, referenced in a quote about burning platforms.

- Honeywell Quantum Solutions - Company involved in quantum computing.

- IBM - Technology company, mentioned as a leader in quantum computing.

- Starlink - SpaceX's satellite internet constellation, mentioned for direct-to-cell capability.

- Intel - Mentioned in relation to its fabs and strategy.

Tools & Software

- Gemini 3 - Google's AI model, discussed in relation to scaling laws.

- Gemini Ultra - Google's AI model, discussed in relation to paying for higher tiers.

- Super GK - AI model, discussed in relation to paying for higher tiers.

- TPU (Tensor Processing Unit) - Google's custom AI accelerator.

- GPU (Graphics Processing Unit) - Nvidia's accelerator, discussed as a competitor to TPUs.

- Blackwell - Nvidia's next-generation chip.

- Hopper - Nvidia's previous generation chip.

- GB200 - Nvidia chip.

- GB300 - Nvidia chip.

- MI450 - AMD chip.

- MI355 - AMD chip.

- Tanium - Amazon's AI accelerator.

- Inferentia - Amazon's AI accelerator.

- Graviton - Amazon's CPU.

- Nitro - Amazon's "superNIC."

- Pytorch - Deep learning framework.

- Jax - Deep learning framework.

- Grok - xAI's AI model, used in an example of data center in space user experience.

- Optimus - Tesla's humanoid robot.

- Copilot - Microsoft's AI coding assistant.

Websites & Online Resources

- ramp.com/invest - Website for Ramp, mentioned as a sponsor.

- ridgelineapps.com - Website for Ridgeline, mentioned as a sponsor.

- Alpha-Sense.com/Invest - Website for AlphaSense, mentioned as a sponsor.

- thepodcastconsultant.com - Website for The Podcast Consultant.

- joincolossus.com - Website for Colossus, mentioned for podcast transcripts and publication.

- psum.vc - Website for Positive Sum.

- x.com (formerly Twitter) - Social media platform, referenced for AI discussions and news.

- openrouter.ai - Platform for accessing various AI models, referenced for token processing data.

Other Resources

- Scaling Laws for Pre-Training - Concept in AI development.

- Reinforcement Learning with Verified Rewards - AI training methodology.

- Test Time Compute - AI computation during inference.

- Data Centers in Space - Concept for future data center infrastructure.

- Rare Earths - Minerals critical for technology, discussed in a geopolitical context.

- Return on Invested Capital (ROIC) - Financial metric.

- Edge AI - AI processed on local devices rather than the cloud.

- Artificial Super Intelligence (ASI) - Hypothetical AI with intelligence surpassing humans.

- KV Cache Offload - Technique for managing context windows in AI models.

- SaaS (Software as a Service) - Business model.

- AI Native Entrepreneurs - New generation of entrepreneurs focused on AI.

- Semiconductor Venture Capital - Investment in semiconductor startups.

- Technium - Concept from Kevin Kelly's book, representing the collective intelligence of technology.

- Nuclear Power (SMRs - Small Modular Reactors) - Energy source discussed as a potential solution for power constraints.

- Quantum Computing - Advanced computing technology.

- Quantum Supremacy - Milestone in quantum computing.

- Fusion Power - Energy source.

- The Technium - Concept from Kevin Kelly's book.