Building Your Personalized AI Operating System for Extreme Productivity

This conversation reveals the profound, often overlooked, consequences of integrating advanced AI assistants like Claude Bot into daily life and business operations. Beyond the immediate productivity gains, the true value lies in constructing a personalized "Life Operating System" (Life OS) that can automate complex tasks, manage diverse responsibilities, and even anticipate needs. The hidden implication is a fundamental shift in how individuals and businesses operate, moving from reactive task management to proactive, AI-augmented orchestration. Those who embrace this shift now, particularly tinkerers and early adopters, gain a significant advantage by building sophisticated, personalized AI infrastructures that will become increasingly essential. This episode is for anyone looking to move beyond basic AI prompts and unlock extreme productivity by treating AI as a scalable, adaptable workforce.

The Unseen Architecture: Building Your Personal AI Operating System

The discourse around AI productivity often focuses on immediate gains--faster writing, quicker code generation, or automated summaries. However, this conversation with Kits dives deep into a more systemic, consequence-driven approach. It’s not just about using AI; it’s about architecting a personalized AI infrastructure, a "Life OS," that can manage and orchestrate various aspects of life and business. This involves moving beyond single-purpose bots to a multi-persona, interconnected system that learns, adapts, and executes across different platforms and responsibilities.

Kits illustrates this by detailing his setup, which prioritizes distinct AI personas for specific functions. For instance, "Gilfoil" acts as a professional engineer, equipped with technical skills, while "Dr. Cox" handles health-related data and "Kevin" manages accounting. This segmentation isn't just for novelty; it’s a strategic choice to prevent context bleed and allow for specialized AI agents to operate with focused expertise. The immediate benefit is a cleaner, more organized interaction with AI, but the downstream consequence is a more robust and capable system that can handle complex, multi-faceted tasks without confusion.

"This is kind of gimmicky but it's a nice separation because if you talk to only one bot all the time about everything sometimes you cannot separate things out so you can just create multiple give them fun personalities from tv shows movies whatever."

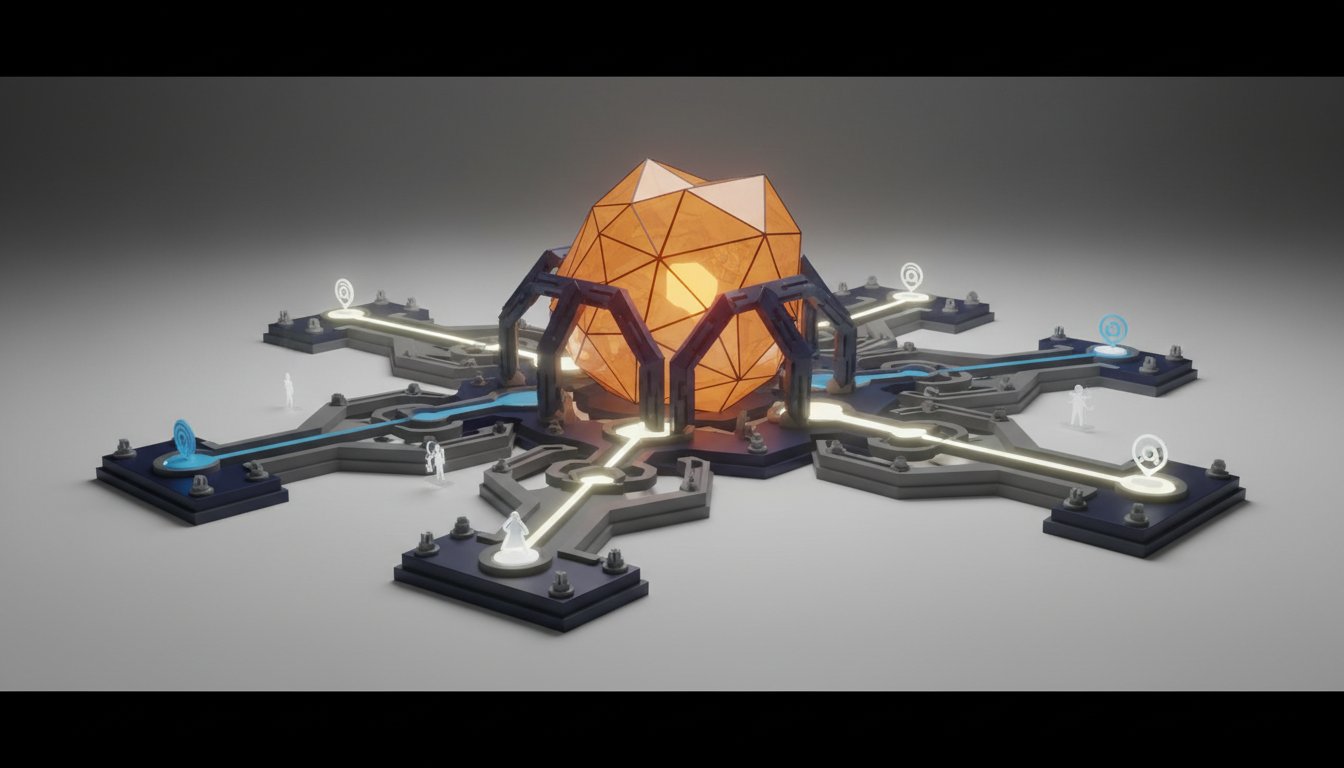

This layered approach directly combats the limitations of single-interface AI interactions. Conventional wisdom might suggest a single, all-knowing AI assistant is ideal. However, Kits’s model demonstrates that specialized agents, managed through a central gateway, offer superior performance and maintainability. The "gateway" concept is crucial here; it acts as the central nervous system, allowing various interfaces like Telegram, iMessage, and Discord to interact with a unified AI core. This architecture enables sophisticated automation, such as scraping customer emails, opening support threads, and initiating sub-agent processing, all managed from a single point of control. The competitive advantage emerges from the sheer scale and efficiency this system unlocks, allowing individuals to manage vastly more complexity than previously possible.

The conversation also highlights the critical role of self-learning and direct system access. Unlike standard AI tools that operate within a UI, Kits's setup allows his AI to interact directly with his network and computer. This is where the true "wow" factor lies--the AI can find printers, cast dashboards to TVs, and interact with home assistants. This level of integration moves beyond simple command execution to genuine problem-solving and environmental interaction.

"This has literally access to your anything that you can do on your computer and on your network this can do it too."

The implication for business is stark: teams that build and leverage these sophisticated AI infrastructures will operate at a fundamentally different level of efficiency and capability. While others might be struggling with manual processes or basic AI prompts, these individuals will have AI agents executing complex workflows, managing customer interactions, and even performing coding tasks. The delayed payoff here is immense--the initial effort in setting up these personalized systems creates a durable competitive moat that is difficult for others to replicate quickly. The conventional approach of relying solely on off-the-shelf AI tools will increasingly fall short as the complexity of business operations grows.

The Hidden Cost of "Easy" AI: Security and Model Choice

A significant portion of the discussion revolves around security and the implications of model choice, particularly when connecting sensitive data like email. Kits emphasizes a cautious approach, advising against immediate email integration for beginners and strongly recommending self-hosting on personal machines rather than VPS to avoid exposed ports and vulnerabilities. This is a critical downstream consequence of rapid AI adoption: the allure of immediate access can blind users to significant security risks.

"First of all if you're just starting don't even connect your email... while we're talking about security do not host it on a virtual private server whatever if you can host it on your own machine and dockerize it so it doesn't have access to everything start there."

The choice of AI model is presented not just as a performance decision but a security imperative. Using cheaper, less capable models when dealing with sensitive data is a recipe for disaster, as they are more susceptible to prompt injection attacks. These attacks could lead to data breaches, system wipes, or even malware installation. The "smartest models," like Opus and Codex for coding, are recommended for critical tasks. This highlights a systemic feedback loop: the more access and data an AI has, the more critical the underlying model's robustness and security become. The immediate benefit of a cheaper model is quickly overshadowed by the potentially catastrophic downstream cost of a security compromise. This is where patience and a willingness to invest in higher-quality tools create a long-term advantage, preventing costly and potentially irreparable damage.

The Interface of the Future: Beyond the Screen

The conversation ventures into the future of human-AI interaction, moving beyond keyboards and screens. The exploration of AI rings and programmable e-ink devices signifies a shift towards ambient, context-aware AI. The Pebble ring, with its API and microphone, represents a potential new superpower--a discreet, always-available interface for interacting with one's Life OS. When paired with location data from smartwatches and sensors, AI can gain unprecedented context, moving beyond generic commands to highly personalized, situationally aware assistance.

"This basically has an API and it just has a microphone you say whatever a voice note a memo whatever and they store it in their phone app through bluetooth and then you have an API to send that wherever you want which is going to be mental because you can use it to from controlling your lights to talking with your bot about customers and whatever like it's going to be the new superpower for like creating magic from your wrist."

This vision directly challenges the current limitations of voice assistants. By integrating AI with real-time environmental data, the "smart home" transforms from a collection of connected devices into a truly intelligent, responsive environment. The immediate advantage for early adopters of these interfaces is the ability to orchestrate complex actions with minimal effort, creating a seamless blend of physical and digital life. The long-term payoff is a level of personal efficiency and control that current technologies cannot match. This requires embracing technologies that might seem niche or experimental now, understanding that they are the building blocks for future productivity paradigms.

The Tinkerers' Advantage: Owning Your AI Future

A recurring theme is the divide between consumer AI and the "tinkerers" who are building their own AI infrastructures. Kits champions the latter, advocating for self-hosting and owning one's data and AI assistants. This approach offers a crucial advantage: control. By running AI locally, users are insulated from cloud outages, model deprecation, and the potential for AI capabilities to be "nerfed" by providers.

The "Tinker Club" is presented as a community for individuals who want to build and integrate these complex systems. This highlights the effort involved--acquiring powerful hardware, learning new APIs, and experimenting with various tools. The immediate discomfort of this learning curve and investment is precisely what creates the lasting advantage. Most consumers will opt for simpler, managed AI solutions, leaving the door open for those willing to put in the work to develop highly customized and powerful AI ecosystems. This is where true competitive separation occurs; it’s not about having the most AI, but about having the most effective AI, tailored precisely to one's needs and under one's control.

Key Action Items

-

Immediate Action (This Week):

- Experiment with Persona Creation: Set up 2-3 distinct AI personas with specific roles and personalities using your preferred AI tool (e.g., Claude, ChatGPT).

- Explore Interface Options: Test interacting with your AI through different channels like Telegram or Discord to understand their unique benefits and drawbacks. Avoid WhatsApp initially due to setup complexity.

- Review Security Practices: If you have connected sensitive data (like email) to your AI, immediately review your security settings, model choices, and hosting environment. Consider disconnecting if unsure.

-

Short-Term Investment (Next 1-3 Months):

- Develop a "Gateway" Concept: Begin conceptualizing how a central AI gateway could manage multiple specialized agents for your personal or business tasks.

- Investigate Self-Hosting: Research the feasibility and requirements for self-hosting an AI model on your local machine or a dedicated server. Prioritize Dockerization for easier management and isolation.

- Identify Core Automation Opportunities: Pinpoint 1-2 repetitive, complex tasks in your workflow that could be significantly improved by a multi-agent AI system.

-

Long-Term Investment (6-18 Months):

- Build a Basic Life OS: Start integrating and connecting your specialized AI agents through a central gateway, focusing on tasks that require context across different domains (e.g., customer support, personal finance).

- Explore Advanced Interfaces: Investigate emerging AI interfaces like AI rings or programmable displays to enhance contextual awareness and streamline interaction with your AI.

- Develop Custom AI Skills: Dedicate time to teaching your AI new skills or workflows that are highly specific to your unique needs, leveraging its ability to interact with your network and local systems. This is where significant personal and professional differentiation will occur.