GPU-Accelerated Storage Enables In-Place AI Data Preparation

TL;DR

- Bringing GPUs directly into traditional storage systems enables "in-place" data processing, eliminating costly data copying and reducing attack surfaces by operating on data where it resides.

- An AI Data Platform continuously prepares enterprise data for AI by finding, gathering, transforming, and embedding it, ensuring data is "AI-ready" without manual intervention.

- Decoupling AI compute from data storage allows independent scaling of each component, optimizing costs and enabling faster innovation in compute without disrupting stable data paths.

- Running AI agents directly within storage systems enhances data governance by allowing agents to monitor data for classification or policy violations in real-time, preventing unauthorized access.

- Transforming storage systems with GPUs to manage and prepare data for AI logically extends their existing capabilities beyond encryption and keyword search to semantic understanding.

- The AI Data Platform reference design empowers storage vendors to innovate by integrating GPU acceleration, allowing them to offer advanced AI-ready capabilities to their customers.

Deep Dive

The enterprise adoption of AI agents faces significant hurdles primarily related to accessing and preparing data for AI systems. While the technology for agents has advanced, moving from proof-of-concept to production requires overcoming challenges associated with legacy infrastructure not built for AI and the inherent difficulties of making vast amounts of unstructured enterprise data "AI-ready." This necessity for AI-ready data, which involves complex pipelines for gathering, transforming, and indexing data, becomes a continuous and costly operation due to high data velocity--the combination of data growth and constant changes. Furthermore, current methods of preparing data often involve copying it, which increases the attack surface and creates security risks through data drift and permission inconsistencies.

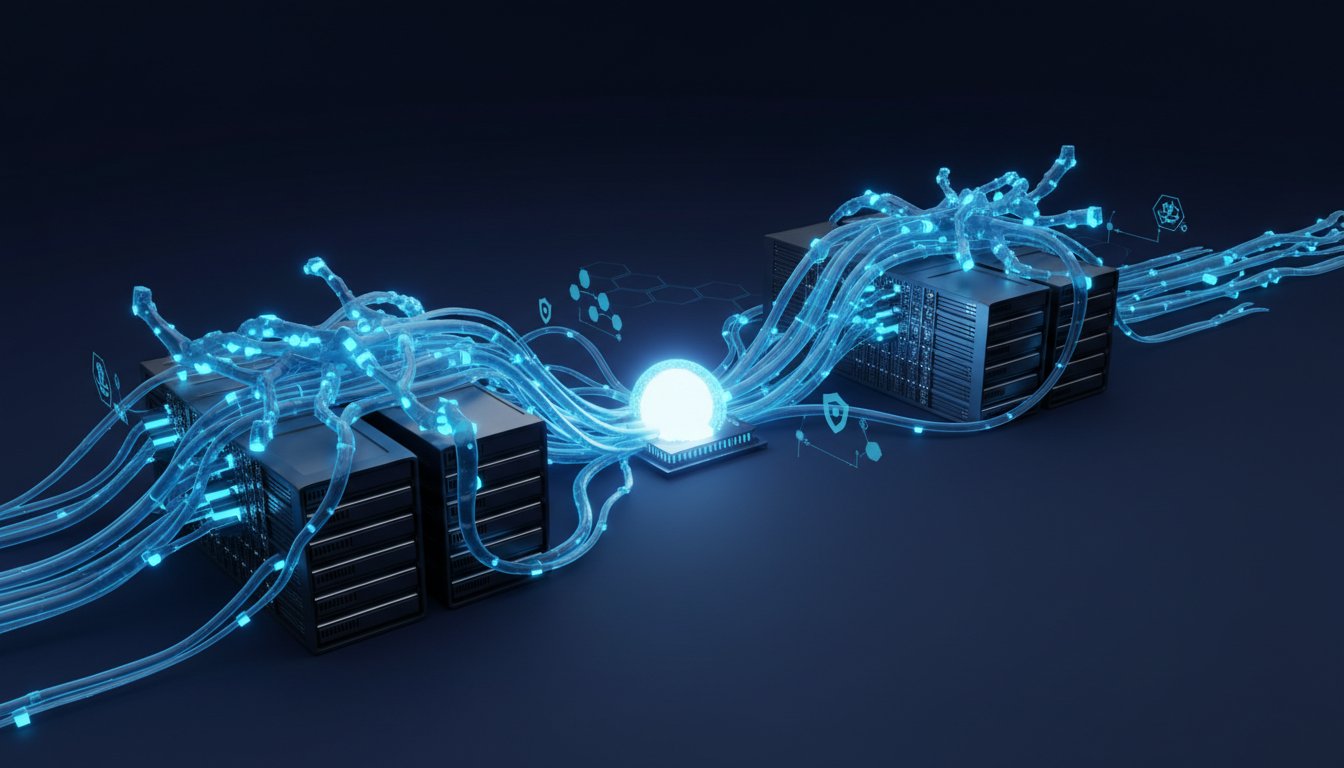

The core implication of these challenges is that traditional approaches to data management are ill-equipped for the demands of AI, leading to inefficiencies, security vulnerabilities, and slowed adoption. The continuous need to re-process data and the risks associated with data duplication necessitate a paradigm shift. This is where NVIDIA's AI Data Platform, a reference design enabling GPU acceleration directly within storage systems, offers a transformative solution. By bringing the GPU to the data, this approach eliminates the need for extensive data copying and movement, inherently enhancing security and efficiency. This "data gravity" principle--acknowledging that data is difficult and expensive to move--makes in-place processing a more logical and cost-effective strategy.

The AI Data Platform represents a natural evolution of storage infrastructure, extending existing capabilities like encryption and keyword search to more advanced AI operations such as embedding, indexing, and semantic search. Partners are integrating this reference design by running AI agents directly within storage systems, enabling background operations that continuously prepare and secure data. This allows for more efficient data governance, such as automatically identifying and flagging sensitive documents that might otherwise be misclassified. It also optimizes system administration through agents that monitor telemetry and recommend performance improvements. The broader consequence is the decoupling of compute and storage scaling and lifecycles, allowing organizations to innovate rapidly on the compute side (adding GPUs) without necessarily overhauling their data infrastructure, thereby streamlining AI adoption and maximizing the value of data assets.

Action Items

- Build AI-ready data pipeline: Collect, extract, chunk, enrich, embed, and index unstructured data for retrieval augmented generation (RAG) at enterprise scale.

- Implement GPU-accelerated storage: Deploy GPUs within traditional storage systems to process data in place, reducing data movement and increasing security.

- Audit data copies: Track and manage multiple copies of data sets across the enterprise to mitigate security risks and data drift from permission changes.

- Develop runbook template: Define 5 required sections (setup, common failures, rollback, monitoring) to prevent knowledge silos for AI data platform operations.

- Measure data velocity impact: Quantify the rate of data growth and change to continuously update AI-ready data transformations.

Key Quotes

"but it's still challenging for enterprises to put agents into production so it sounds like the technology is there or you know there enough because it's always advancing right what are the challenges then um that enterprises are facing to actually deploy and gain adoption of agents that's a great question so almost every enterprise and every ceo and cio cto they are increasing their spending on ai they want to deploy ai's to production but it's difficult to move from a poc state to production so the enterprise adoption is happening but it's still challenging there are still challenges can you dive into what some of the challenges are yes so many of the challenges revolve around access to data"

Jacob Lieberman explains that while AI agent technology has advanced significantly, enterprises face challenges in moving from proof-of-concept (POC) stages to full production deployment. He identifies that a primary hurdle is ensuring secure and efficient access to accurate, up-to-date data, which is fundamental for any AI application, including agents.

"regardless of how you're using ai whether it's an agent whether you're training a model whether you're fine tuning a model or even if you're retrieving data to feed it to an llm's context all ai still relies on secure access to accurate recent data great so you know you mentioned the human factor and that's uh separate episode separate podcast in itself right because that i mean for all of us change you know and this is we have to bring lawyers and hr folks for that one right exactly when it comes to the the systems and you know the legacy infrastructure and that kind of thing is it a situation where like you kind of have to burn it down and start from scratch or can you kind of you know update and transform existing data to be ai ready"

Lieberman emphasizes that all AI applications, from agents to model training, are fundamentally dependent on secure access to current and correct data. He notes that the challenges in enterprise AI adoption extend beyond the technology itself, touching upon human factors and the integration with existing legacy infrastructure, posing the question of whether a complete overhaul or an update of existing systems is necessary.

"there is this term that's emerging called ai ready data ai ready data has gone through a number of transformations to make it efficiently and securely useful by ai systems like retrieval augmented generation or data flywheels or ai agents and making enterprise data ai ready is challenging it's challenging because the vast majority of enterprise data is unstructured it has no structure to it it's it's things like powerpoint presentations and pdfs and audio files and videos and all kinds of things that you can't just shove into a database and query with a structured query language"

Jacob Lieberman introduces the concept of "AI-ready data," which has been transformed for efficient and secure use by AI systems. He highlights that making enterprise data AI-ready is difficult, primarily because most enterprise data is unstructured, existing in formats like presentations, PDFs, audio, and video, which cannot be easily queried with traditional structured query languages.

"the most typical use case for ai inference is retrieval augmented generation and to make your unstructured data ready for rag you have to collect all the data extract all the text the semantic knowledge within the data chunk it up into homogeneous sizes enrich it with metadata then embed it which means transform it into numeric representations that can be efficiently stored and searched and at the very end you index it into a vector database for retrieval and you're doing this at enterprise scale"

Lieberman details the process for preparing unstructured data for Retrieval Augmented Generation (RAG), a common AI inference use case. This involves collecting data, extracting semantic knowledge, chunking it, enriching it with metadata, embedding it into numeric representations, and finally indexing it into a vector database for retrieval, all performed at an enterprise scale.

"the big challenge to enterprises is from a governance standpoint they often don't know which data has changed and if you don't know which data has changed you have to reindex all of it so uh that reminds me you know when i'm at home and let's say i put a dish into the dishwasher and it's dirty and i don't know that the dishes have already been washed and then you know my wife tracy says to me hey which dish did you put in there and i can't remember so we end up having to rewash everything and that's what enterprises are doing right now to make their data ready they're rewashing all their dishes every time they have one dirty dish"

Jacob Lieberman uses an analogy of rewashing all dishes when unsure if they are already clean to explain a significant enterprise data governance challenge. He states that enterprises often lack visibility into which data has changed, forcing them to re-process or re-index all data to ensure it remains AI-ready, which is inefficient and analogous to rewashing a full load of dishes when only one is dirty.

"when you put your data into a pipeline to make it ai ready that generally involves copying the data you don't transform the source of truth documents you make a copy and you chunk that extract text embed that and every time you make a copy you're increasing the attack surface on your data and it becomes even more threatening if you have to move that data out of your storage because as soon as you're copying the data somewhere else it becomes disconnected from the source of truth so that means if the contents change like we just said we call that data drift the content changes might not be reflected in your ai representations but what's even worse is what if the permissions change what if noah should no longer have access to this document right his permissions are removed but he can still access all the copies and we're seeing that in a typical enterprise as chatbots and agents proliferate we're seeing multiple copies of the same data set 7 13 copies appearing all around the data center disconnected from the source of truth"

Lieberman points out a critical security issue in traditional AI data pipelines: data copying. He explains that creating copies of data to make it AI-ready increases the attack surface and creates risks, especially if permissions change. He notes that data drift and outdated permissions on these copies are common problems as AI applications like chatbots proliferate, leading to multiple, disconnected copies of data across the data center.

Resources

External Resources

Books

- "AI Data Platform" by NVIDIA - Mentioned as a reference design for GPU-accelerated storage built for AI.

People

- Jacob Liberman - Director of Enterprise Product Management at NVIDIA, discussed the AI Data Platform and its implications for enterprise storage and AI adoption.

- Noah Krabitz - Host of the NVIDIA AI Podcast.

Organizations & Institutions

- NVIDIA - Developer of the AI Data Platform and host of the NVIDIA AI Podcast.

Websites & Online Resources

- ai-podcast.nvidia.com - Website to browse the entire AI Podcast catalog.

- nvidia.com - Mentioned as a source for information on the AI Data Platform and partner implementations.

Other Resources

- AI Data Platform - A GPU-accelerated storage platform designed to make enterprise data AI-ready in place.

- Agentic AI - A technology discussed in relation to human and AI agent collaboration in the workplace.

- AI Factory - Infrastructure for data center-scale AI training and inference.

- Data Gravity - The concept that data is large, growing, and difficult to move, influencing the preference to bring compute to the data.

- Data Velocity - The rate at which data grows plus the rate at which existing data changes.

- Retrieval Augmented Generation (RAG) - A common use case for AI inference that requires data to be AI-ready.