AI Adoption Hindered by Infrastructure, Trust, and Data Gaps

TL;DR

- Enterprises face an infrastructure deficit, lacking sufficient power, compute capacity (GPUs), and network bandwidth to meet AI's growing demands, necessitating strategic data center placement and national AI infrastructure development for economic and security reasons.

- A significant trust deficit hinders AI adoption, as non-deterministic models produce unpredictable outputs, requiring proactive safety and security measures, including runtime enforcement guardrails and algorithmic jailbreaking, to ensure predictable and secure AI behavior.

- The data gap is critical, as publicly available training data is exhausted, shifting focus to machine-generated data from agentic workflows, which requires new infrastructure to correlate with human data for unlocking AI's full potential.

- The transition from AI chatbots to autonomous agents that perform tasks represents a fundamental shift from individual productivity to workflow automation, exponentially increasing the demand for data center capacity and computational resources.

- Overcoming AI adoption obstacles--infrastructure, trust, and data--is essential for companies to differentiate themselves, as the ability to harness data effectively and ensure system security will unlock AI's true potential.

- The perception of AI causing mass job loss is overhyped; instead, AI will reconfigure every job, while its underhyped potential lies in generating original insights beyond existing human knowledge to solve unprecedented problems.

Deep Dive

AI adoption is a critical priority for over 90% of enterprise leaders, yet fewer than 10% have successfully implemented AI across their organizations. This gap is not due to a lack of awareness, but rather three fundamental obstacles: insufficient infrastructure, a pervasive trust deficit, and a data gap. Overcoming these challenges is essential for enterprises to move beyond basic chatbots to autonomous agents that can automate workflows, driving significant value and preventing organizations from struggling for relevance in an AI-driven future.

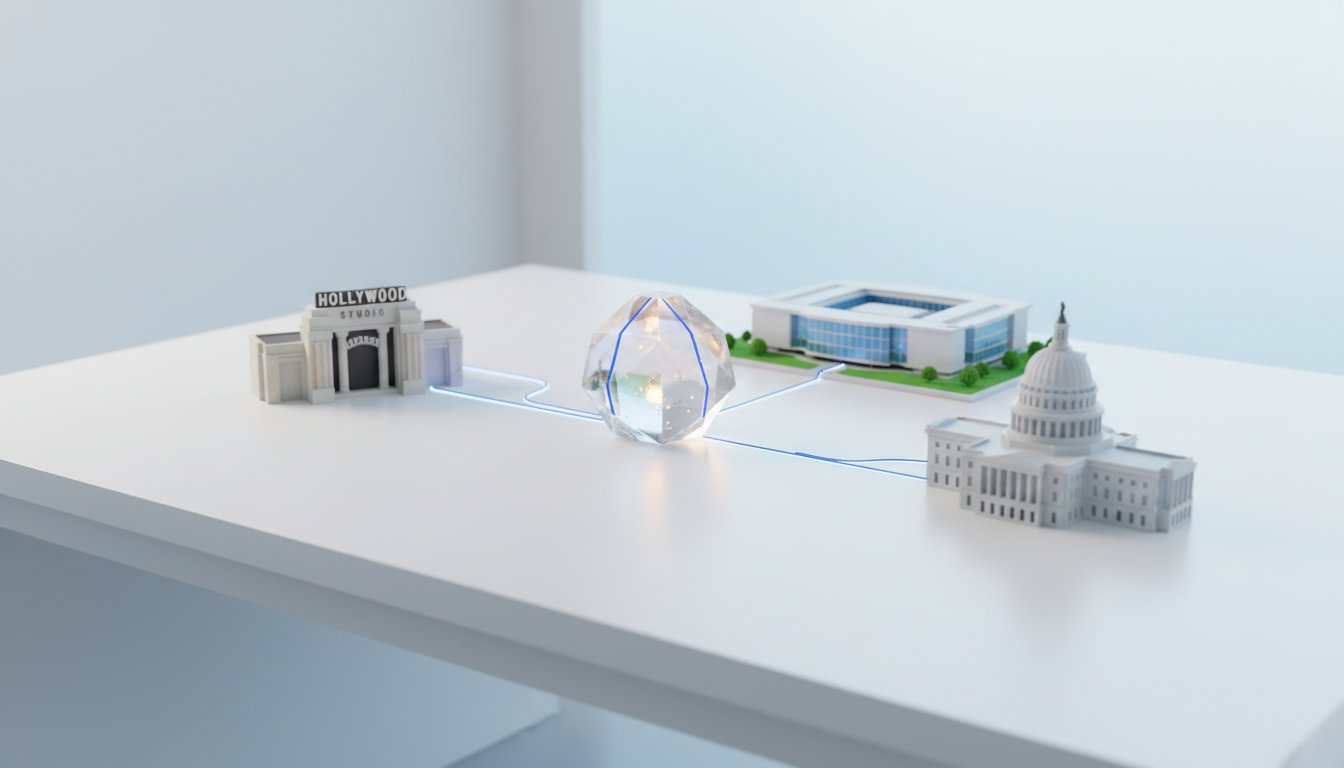

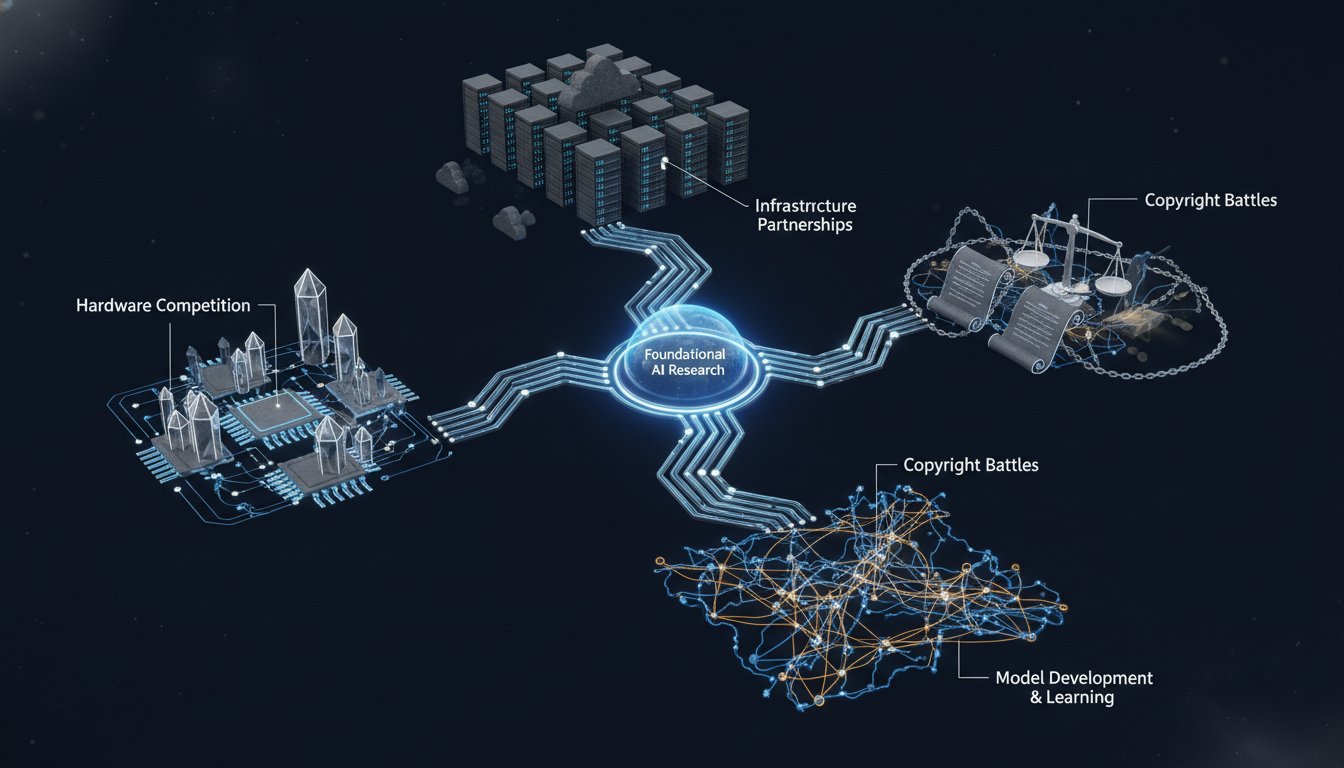

The first major impediment to AI adoption is a global shortage of infrastructure. This shortage encompasses not only the power needed to fuel data centers but also the compute capacity, particularly GPUs, and the network bandwidth required for AI operations. This constraint is so severe that data centers are being located where power is available, rather than bringing power to where data centers are needed. This infrastructure deficit has significant implications for national security and economic prosperity, as a country's ability to generate AI tokens, a key mechanism for AI processing, will be directly tied to its economic and security standing. While some perceive the massive investments in AI infrastructure, such as the multi-billion dollar projects by major tech companies, as a potential bubble, the sustained demand signals, exemplified by the continued use and revenue generation of AI services even after significant price increases, suggest a long-term, sustained demand. The increasing duration of autonomous execution for AI agents, extending from minutes to potentially hours or even days for complex tasks like coding, further underscores the growing need for robust infrastructure.

Secondly, a significant trust deficit hinders AI adoption. Many individuals and enterprises are hesitant to fully embrace AI systems due to concerns about data misuse, security vulnerabilities, and the inherent non-deterministic nature of AI models, which can produce unpredictable outputs. This unpredictability, while potentially beneficial for creative tasks, is problematic for applications requiring consistent and reliable performance, such as security software. Enterprises must ensure that AI systems are developed with robust safety and security measures, including visibility into data inputs, validation of model behavior, and the implementation of runtime enforcement guardrails. These guardrails are crucial for preventing AI models from generating harmful or unintended outputs, thereby building confidence in their use, especially within enterprise environments.

The third obstacle is the data gap. Many organizations mistakenly believe their proprietary data is an impenetrable moat, failing to recognize that the challenge lies not in possessing data, but in effectively harnessing and organizing it for AI. The publicly available data on the internet, which has historically trained AI models, is nearing exhaustion. The future growth of data will largely be machine-generated data from AI agents performing tasks, rather than human-generated data. The ability to correlate this machine data with human data is where significant value and differentiation will emerge. Overcoming these three constraints--infrastructure, trust, and data--is key to unlocking the full potential of AI, enabling companies to move from individual productivity gains to comprehensive workflow automation.

Ultimately, the successful adoption of AI hinges on addressing these core obstacles. The metrics for success in overcoming the trust deficit and data gap include algorithmically determining when AI models hallucinate inappropriately for specific use cases, and the ability to correlate machine-generated data with human data to unlock novel insights. While some companies may experience a bubble effect due to inflated valuations, the underlying trend of AI integration into every business workflow is a fundamental platform shift. The next frontier of AI will likely involve physical manifestations like robotics, presenting new challenges in safety and security, but the underhyped potential of AI lies in its capacity to generate original insights, enabling solutions to previously unimaginable problems, rather than simply automating existing tasks or replacing human jobs entirely.

Action Items

- Audit AI infrastructure: Assess power, compute, and network bandwidth needs for current and projected AI workloads.

- Implement AI trust guardrails: Develop algorithmic methods to pressure test AI models for safety and security vulnerabilities.

- Measure AI hallucination variance: Establish benchmarks to differentiate between acceptable poetic hallucination and critical security bugs.

- Analyze machine-generated data: Develop pipelines to correlate machine-generated time-series data with human data for AI training.

- Track AI agent execution duration: Monitor and document the increasing duration of autonomous AI agent task completion.

Key Quotes

"Most studies show that AI adoption is a priority to more than 90 of enterprise leaders, yet the very same studies show that less than 10% of enterprises have adopted AI across their entire organization. Like, why? If everyone knows adopting to AI has to be one of your biggest priorities in 2025, 2026, and beyond, why are so few organizations actually able to implement it from top to bottom?"

The speaker, Jeetu Patel, highlights a significant disconnect between stated AI adoption priorities and actual implementation rates among enterprises. Patel questions the reasons behind this gap, suggesting that despite widespread recognition of AI's importance, many organizations struggle to achieve full-scale adoption. This observation sets the stage for exploring the obstacles hindering widespread AI integration.

"We're now moving to the second phase of AI, which is moving from these chatbots to agents that can get tasks and jobs done almost fully autonomously. So, it's not it's no longer just about I ask you a question, I get back an answer; it's now about making sure that you can have full-fledged workflows within companies get automated. We're moving from a world of individual productivity to workflow automation."

Patel describes a critical evolution in AI capabilities, transitioning from simple question-answering chatbots to more sophisticated agents capable of autonomous task completion. He emphasizes that this shift signifies a move towards automating entire business workflows, thereby transforming AI's role from enhancing individual productivity to driving comprehensive workflow automation. This transition is presented as a key development in the current AI landscape.

"The first big impediment is we simply don't have enough infrastructure in the world to power the needs for AI to satisfy the needs of AI. What does that mean? You don't have enough power in the world, enough electricity to be able to fuel these data centers that are going to be needed for AI. That's number one. You don't have enough compute capacity in the world, meaning the GPUs that companies like NVIDIA and AMD make. We just don't have enough compute capacity in the world, and then you don't have enough network bandwidth in the world."

Patel identifies insufficient global infrastructure as the primary obstacle to AI adoption. He elaborates that this includes a lack of adequate power and electricity to support the necessary data centers, a shortage of essential compute capacity (specifically GPUs), and insufficient network bandwidth. Patel argues that these infrastructure limitations are fundamental constraints that must be addressed for AI to scale effectively.

"The second big constraint is a trust deficit. People just don't trust these systems. And if I use AI, is it going to use my data in the wrong way? Is it going to misuse anything that I tell it to do? And so you have to make sure that you figure out a way that these safety and security concerns that people have with AI are addressed."

Patel points to a significant trust deficit as the second major barrier to AI adoption. He explains that users, particularly in enterprise settings, harbor concerns about data privacy and the potential misuse of their information by AI systems. Patel stresses the necessity of addressing these safety and security concerns to build the confidence required for broader AI system utilization.

"The third area is a data gap. And what do I mean by a data gap? Most companies, Jordan, think that their data is their moat. They are going to have their data, which is their unique differentiator that only they have, being able to be used to go out and unlock the full potential for AI for their company. The reality is is most people don't know how to harness that data effectively and organize it in the right way so that they can take advantage of the full potential of AI."

Patel identifies a data gap as the third critical obstacle, explaining that while many companies view their data as a unique competitive advantage for AI, they often lack the expertise to effectively harness and organize it. He suggests that the inability to properly leverage proprietary data prevents organizations from fully realizing AI's potential. Patel frames this as a challenge in data utilization rather than data availability.

"What's overhyped in AI is all of us are going to lose our jobs and we're just going to be staring at the ocean and not have enough to do because AI is going to do everything. I think that's nonsense. We're going to have human-created creativity is nowhere near coming to an end. We are actually going to have so much value to add to society, and so I don't believe AI is going to take every single job away."

Patel dismisses the notion that AI will lead to widespread job loss and a future of idleness. He argues that human creativity will remain vital and that AI will augment, rather than replace, human contributions, leading to increased societal value. Patel asserts that while jobs will be reconfigured, the idea of AI eliminating all employment is unfounded.

"However, every job will get reconfigured with AI. That's important. Now, what's underhyped about AI? Is that we're going to have, and you're starting to see this already in some meaningful ways, there are going to be original insights. You know, up until now, AI's been used as an aggregation mechanism. It's going to give me the right answer based on the things that I've trained it on. But what about if AI could generate original insights that don't exist in the human corpus of knowledge?"

Patel posits that while AI will reconfigure jobs, its most underhyped potential lies in its ability to generate original insights, moving beyond its current role as an aggregation mechanism. He suggests that AI could uncover novel knowledge not present in existing human data. Patel believes this capacity for generating original insights will unlock unprecedented problem-solving capabilities.

Resources

External Resources

Articles & Papers

- Harm Bench - Mentioned as a benchmark for assessing model behavior and identifying jailbreaking vulnerabilities.

People

- Jeetu Patel - President of Cisco, discussed as an expert on AI adoption and its obstacles.

- Jordan Wilson - Host of the Everyday AI Podcast, interviewer.

Organizations & Institutions

- Cisco - Discussed for its role in providing enterprise AI solutions and developing products for infrastructure, trust, and data in AI.

- OpenAI - Mentioned in relation to ChatGPT, its pricing models, and its role in the generative AI phase.

- Nvidia - Referenced for its production of GPUs, indicating demand for compute capacity.

- AMD - Referenced for its production of GPUs, indicating demand for compute capacity.

- Anthropic - Mentioned for launching a new coding tool with a 30-hour autonomous execution duration.

- Microsoft - Mentioned as a partner of the Everyday AI Podcast and for its investment in AI infrastructure.

- Adobe - Mentioned as a partner of the Everyday AI Podcast.

Websites & Online Resources

- your everyday ai com - Referenced as the website for the Everyday AI Podcast's free daily newsletter and partner inquiries.

Other Resources

- Generative AI - Discussed as the current phase of AI, moving from chatbots to agents that can perform tasks.

- Agentic workflows - Discussed as a key development in the second phase of AI, where agents can perform tasks autonomously.

- Machine-generated data - Discussed as a significant and growing source of data for training AI models, distinct from human-generated data.

- Synthetic data - Mentioned as artificial data used to train AI models.

- Non-deterministic models - Described as the inherent nature of AI models, meaning they can produce different outputs for the same input.

- AI Defense - A product developed by Cisco to assess data going into models, validate model behavior, and enforce runtime guardrails.

- Physical AI / Robotics / Humanoids - Identified as the potential next phase of AI development.